Have you ever looked at a stunning night cityscape and wondered how much of that magic could truly be captured with a smartphone?

In recent years, night photography on phones has evolved from a compromise into a creative frontier, and in 2025–2026 this transformation has accelerated dramatically. Thanks to larger sensors, breakthroughs in dynamic range technology, and the rise of AI-powered image processing, smartphones are no longer just recording darkness but actively reconstructing light.

For gadget enthusiasts outside Japan who care deeply about camera performance, this article will help you understand what actually makes modern phones excel at night. By exploring sensor physics, optical design, computational photography, and real-world shooting workflows, you will gain the knowledge needed to choose the right device and techniques for your style. If you want to shoot night scenes with confidence rather than rely on luck, this guide is designed for you.

- Why Smartphone Night Photography Has Reached a Turning Point

- The Rise of 1-Inch Sensors and What They Mean for Low-Light Imaging

- Expanding Dynamic Range with LOFIC and Next-Generation HDR Sensors

- Lens Engineering in the Dark: Fixed vs Variable Aperture Strategies

- The Telephoto Night Revolution Powered by 200MP Sensors

- How AI and Neural ISPs Rebuild Detail in Extreme Darkness

- Different Computational Approaches from Apple, Vivo, Google, and Samsung

- Professional Mobile Workflows: RAW Files, AI Denoising, and Editing Tools

- Essential Accessories That Dramatically Improve Night Results

- Field Techniques and Etiquette for Urban Night Photography

- 参考文献

Why Smartphone Night Photography Has Reached a Turning Point

Smartphone night photography has reached a genuine turning point, and it is not a matter of incremental improvement but of structural change. **Until recently, low-light photography on phones relied on compromise**, balancing noise reduction against lost detail. That balance has now shifted because hardware and computation are advancing together instead of separately.

The most visible trigger is the commoditization of large sensors. According to analyses by DXOMARK and Sony Semiconductor Solutions, 1-inch sensors are no longer exotic experiments but stable, mass-produced components. This directly increases photon capture at night, reducing reliance on aggressive software smoothing and preserving natural texture in shadows.

At the same time, dynamic range has expanded beyond what multi-frame HDR alone could deliver. Technologies such as LOFIC, evaluated in Huawei’s flagship cameras by DXOMARK, store excess charge inside each pixel instead of discarding it. **Bright neon signs and deep shadows can now coexist in a single exposure**, something that was previously impossible without visible artifacts.

| Era | Main Limitation | Night Result |

|---|---|---|

| Pre-2023 | Small sensors, basic HDR | Noise or blown highlights |

| 2024–2026 | Large sensors + LOFIC/HF-HDR | Balanced highlights and shadows |

The other decisive factor is the rise of neural ISPs. Apple’s DarkDiff research, published on arXiv, shows how diffusion models can reconstruct detail instead of merely suppressing noise. This marks a philosophical shift: the phone is no longer correcting darkness but interpreting it.

Because these advances are grounded in published research and shipping products, this moment feels final rather than experimental. **Night photography has moved from a special mode into a core capability**, signaling a true inflection point for smartphone imaging.

The Rise of 1-Inch Sensors and What They Mean for Low-Light Imaging

The rapid rise of 1-inch sensors in smartphones marks a fundamental shift in how low-light imaging is approached, and it is best understood from a physics-first perspective. **A larger sensor physically captures more photons**, and this advantage cannot be replicated purely through software. According to Sony Semiconductor Solutions, moving from a typical 1/1.3-inch sensor to a full 1-inch format increases the light-receiving area by roughly 80 percent, directly improving signal-to-noise ratio in dark scenes.

This matters most at night, where every additional photon reduces reliance on aggressive noise reduction. Devices like Xiaomi’s Ultra series, built around Sony’s LYT-900, demonstrate how stacked sensor designs separate photodiodes from circuitry, maximizing effective pixel area. **The result is cleaner shadow detail and more stable color reproduction under streetlights**, even before computational processing begins.

| Sensor Type | Relative Area | Low-Light Impact |

|---|---|---|

| 1/1.56-inch | Baseline | Higher noise, heavier AI smoothing |

| 1/1.3-inch | ~1.5x | Improved night mode performance |

| 1-inch | ~2.3x | Stronger native brightness and tonal depth |

Independent testing organizations such as DXOMARK consistently report that 1-inch sensor phones retain more texture in near-dark conditions, particularly in foliage, signage, and skin tones. This is because higher full-well capacity allows highlights like neon signs to be preserved without crushing nearby shadows. **Dynamic range at base ISO improves before HDR stacking is even applied**, giving computational photography a cleaner foundation.

Another often overlooked benefit is readout efficiency. Faster readout, highlighted in Sony’s technical disclosures, reduces motion artifacts during multi-frame night capture. In practical terms, this means fewer ghosting errors when people or vehicles move through the frame. For users, it translates into sharper handheld night shots with more natural contrast, reinforcing why 1-inch sensors are becoming the new reference point for serious low-light smartphone imaging.

Expanding Dynamic Range with LOFIC and Next-Generation HDR Sensors

Expanding dynamic range has become one of the most decisive factors in modern mobile night photography, and recent sensor innovations show that this challenge is now being addressed at the pixel level rather than relying solely on software. In high-contrast night scenes, such as neon-lit streets or cityscapes with deep shadows, conventional sensors have long struggled with highlight clipping and crushed blacks. **LOFIC and next-generation HDR sensors directly confront this physical limitation by redesigning how excess light is handled inside each pixel**, which fundamentally changes what smartphones can capture after dark.

LOFIC, short for Lateral OverFlow Integration Capacitor, introduces an additional charge storage path within each pixel. Instead of discarding excess electrons when the photodiode saturates, the sensor temporarily stores this overflow in a dedicated capacitor. According to camera evaluation analyses published by DXOMARK, this structure preserves highlight color information that would otherwise be lost, while still maintaining sensitivity in shadow regions. In practical terms, bright signage retains hue and texture, even as darker building facades remain detailed and noise-controlled.

| Technology | Core Mechanism | Primary Benefit in Night Scenes |

|---|---|---|

| LOFIC | Overflow charge stored in per-pixel capacitor | Prevents highlight clipping while preserving shadows |

| HF-HDR | Sensor-level fusion of short exposure and DCG data | Stable HDR in video and zoomed night shooting |

Sony’s latest HDR approach takes a different but complementary path. With sensors such as the LYT-828, Sony integrates Hybrid Frame-HDR directly into the sensor readout pipeline. This method combines dual conversion gain data with short-exposure frames before the signal reaches the application processor. Sony Semiconductor Solutions has stated that this enables dynamic range exceeding 100 dB, which is especially important for night video where sudden brightness changes occur during panning or zooming. **The key advantage is continuity: HDR does not disengage during zoom or motion**, a weakness of earlier multi-frame HDR systems.

What makes these developments particularly important is that they reduce dependence on aggressive tone mapping later in the imaging pipeline. Researchers in computational photography have noted that preserving dynamic range at capture time leads to more natural textures after noise reduction and AI enhancement. By keeping highlight and shadow data intact, neural ISPs can focus on refinement rather than reconstruction, resulting in images that look closer to human perception instead of overly processed composites.

Looking ahead, industry observers such as analysts cited by Apple-related research coverage suggest that LOFIC-like structures may become standard in flagship sensors across platforms. This shift signals a broader philosophy change: rather than correcting exposure mistakes after the fact, next-generation HDR sensors aim to avoid losing information in the first place. **For night photography enthusiasts, this means scenes with extreme contrast can finally be captured in a single, coherent exposure**, with less reliance on guesswork by algorithms and more faithful rendering of the light that was truly there.

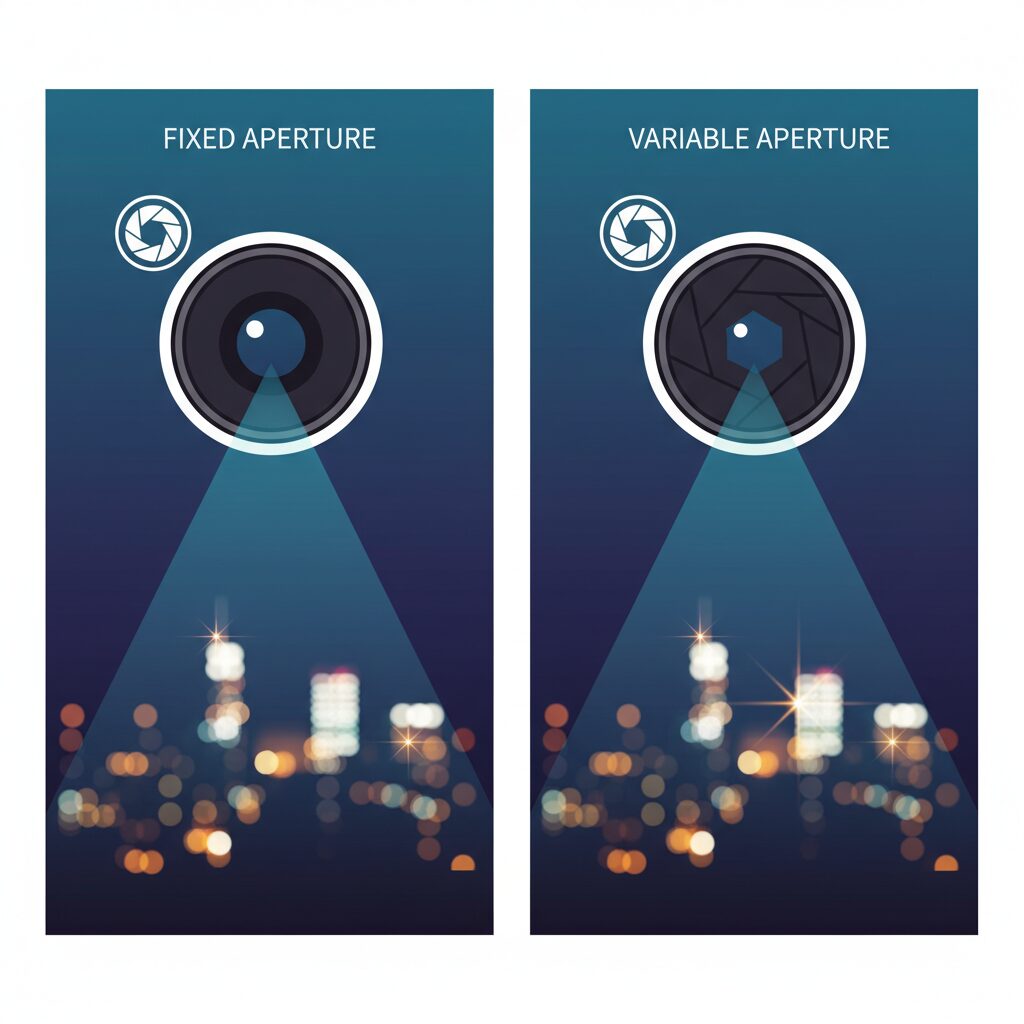

Lens Engineering in the Dark: Fixed vs Variable Aperture Strategies

When shooting in near darkness, aperture design becomes a quiet but decisive factor that shapes not only exposure, but also the character of light itself. In mobile imaging, the debate between fixed and variable aperture strategies is not about specs alone, but about how much physical control engineers are willing to preserve in an era dominated by computation.

Fixed-aperture lenses, such as the F1.63 main camera adopted by Xiaomi 15 Ultra, prioritize maximum light intake at all times. **From a low-light engineering perspective, this choice optimizes photon efficiency and simplifies optical alignment**, which is critical when pairing a 1-inch sensor with an already bulky lens stack. According to evaluations by DXOMARK, brighter fixed apertures consistently deliver lower ISO requirements in night scenes, reducing read noise before any AI processing is applied.

However, this efficiency comes with a trade-off that becomes visible specifically at night. Point light sources like street lamps or LEDs tend to bloom into soft orbs, because the lens cannot physically stop down. Computational sharpening cannot recreate diffraction-based starbursts, a limitation also noted by optical engineers interviewed by CNET when discussing Xiaomi’s design reversal.

| Design Strategy | Low-Light Advantage | Night Scene Limitation |

|---|---|---|

| Fixed Aperture | Maximum light capture, simpler optics | No physical control over light points |

| Variable Aperture | Adaptive exposure and depth control | Thicker lens module, mechanical complexity |

Variable aperture systems, exemplified by Huawei Pura 80 Ultra’s F1.6–F4.0 range, take the opposite stance. **They sacrifice optical simplicity to retain physical authority over light behavior**, something software alone cannot fully emulate. By stopping down at night, photographers can deliberately generate diffraction spikes and maintain highlight structure, even before LOFIC-based HDR processing comes into play.

NotebookCheck’s analysis highlights another subtle advantage: stopping down slightly can improve edge-to-edge sharpness on large sensors, which is especially noticeable in cityscapes where fine architectural lines extend across the frame. This matters in night photography, where contrast is already fragile and optical softness is harder to hide.

From an engineering standpoint, the real constraint is space. Variable aperture mechanisms require moving blades, additional actuators, and tighter tolerances. With periscope telephoto modules growing to 200MP class sizes, manufacturers are forced into hard prioritization. **The absence or presence of variable aperture therefore reflects a system-level decision, not a philosophical one.**

In the dark, these choices reveal their intent clearly. Fixed apertures trust sensors and neural ISPs to resolve everything later, while variable apertures insist that some aspects of night still belong to physics. Neither approach is universally superior, but each defines a distinct visual signature that no amount of post-processing can completely erase.

The Telephoto Night Revolution Powered by 200MP Sensors

The arrival of 200MP telephoto sensors has fundamentally changed what night photography with smartphones can achieve, especially in scenes that were once considered impossible without professional gear. Telephoto lenses traditionally struggled after sunset due to smaller apertures and limited photon intake, but ultra-high-resolution sensors have reframed the problem from optics alone to a data-driven approach.

By capturing an overwhelming amount of spatial information, 200MP sensors turn darkness into something that can be statistically reconstructed rather than merely recorded. This shift is particularly impactful for night cityscapes, distant architecture, and compressed-perspective scenes where light is scarce but detail density is high.

From a technical standpoint, the key lies in pixel binning. Sensors such as the 1/1.4-inch 200MP units used in flagship periscope modules combine multiple pixels into one large virtual pixel in low light. A 4×4 binning pattern outputs roughly 12.5MP images with an effective pixel size exceeding 2µm, dramatically improving signal-to-noise ratio without sacrificing resolving power when light levels recover.

This means telephoto night shots are no longer a compromise between reach and clarity. According to DXOMARK’s low-light evaluations, high-resolution telephoto modules now retain readable signage, window textures, and light gradients at focal lengths where older 10MP or 12MP telephotos collapsed into noise.

| Telephoto Sensor Type | Typical Night Output | Practical Result After Dark |

|---|---|---|

| 12MP Telephoto | Single-frame, limited binning | Soft detail, aggressive noise reduction |

| 48MP Telephoto | Quad-binning | Moderate detail, improved brightness |

| 200MP Telephoto | 16-in-1 binning with multi-frame fusion | High micro-detail and stable highlights |

Optics also play a decisive role. Vivo’s collaboration with Zeiss on APO-designed telephoto lenses is frequently cited by optical engineers for its strict suppression of longitudinal chromatic aberration. In night scenes filled with point light sources, this correction prevents purple and green fringing around lamps and aircraft warning lights, preserving edge integrity even before computational processing begins.

The real breakthrough, however, emerges when these sensors are paired with modern neural ISPs. Multi-frame alignment at long focal lengths was once unreliable due to handshake amplification, but faster sensor readout and AI-based motion estimation now allow consistent stacking. Apple’s imaging research and Sony Semiconductor Solutions both emphasize that readout speed is as critical as sensor size for night zoom performance.

In practice, this revolution changes photographic behavior. Instead of defaulting to wide-angle night shots, users can isolate distant subjects, flatten urban layers, and create cinematic compression effects that were previously exclusive to full-frame cameras with fast telephoto glass. Night photography is no longer about capturing more light alone; it is about extracting more meaning from fewer photons.

The 200MP telephoto era marks the moment when night zoom stops being a novelty and becomes a primary creative tool. For gadget enthusiasts, this is not just an incremental upgrade but a redefinition of how smartphones see the dark from afar.

How AI and Neural ISPs Rebuild Detail in Extreme Darkness

In extreme darkness, modern smartphones no longer rely solely on collecting more light but instead focus on reconstructing missing information through AI-driven reasoning. **Neural ISPs fundamentally change the role of image processing, shifting from noise suppression to semantic restoration**, where the system infers what should exist in the scene based on learned visual patterns.

This transition becomes most visible at illumination levels below one lux, where traditional multi-frame stacking reaches its limits. Apple’s DarkDiff research, published by Apple’s imaging team and detailed in arXiv, demonstrates how diffusion models can be integrated directly into the ISP pipeline. Instead of averaging frames, the model treats noisy RAW data as an incomplete signal and iteratively refines it toward a plausible final image, guided by learned priors of textures, edges, and materials.

| Approach | Core Mechanism | Result in Extreme Darkness |

|---|---|---|

| Conventional ISP | Multi-frame averaging and denoise | Lower noise, but smeared fine detail |

| Neural ISP | Semantic inference and reconstruction | Readable text and preserved texture |

According to Apple’s research team, DarkDiff operates in latent space rather than pixel space, allowing the system to distinguish between random sensor noise and meaningful structure. **This is why brick walls, fabric grain, and hair strands remain recognizable even when photon counts are critically low**, a scenario where classical pipelines collapse into watercolor-like artifacts.

On the Android side, Vivo’s BlueImage chip illustrates a different but equally important path. By dedicating hardware to neural image processing, Vivo achieves real-time low-light reconstruction in video, something diffusion-based methods still struggle with due to computational cost. PhoneArena’s analysis notes that BlueImage processes color correction and noise modeling before full demosaicing, reducing error propagation in near-dark scenes.

This hardware-first design allows Vivo devices to maintain stable detail at 4K/60fps in lighting conditions where other phones drop frames or crush shadows. **The key advantage is temporal consistency**, as the neural ISP analyzes motion vectors across frames to prevent flicker and false detail generation, an issue often observed in aggressive AI enhancement.

Academic consensus, including commentary from imaging researchers cited alongside Apple’s paper, emphasizes that these systems do not “invent” detail arbitrarily. Instead, they operate within statistically constrained models trained on massive datasets, producing results that are perceptually accurate even if not photon-perfect. For users, this means night images that align more closely with human visual memory rather than raw sensor output.

As neural ISPs mature, extreme darkness becomes less of a hard limit and more of a computational challenge. **What matters most is no longer how much light hits the sensor, but how intelligently the system interprets what little light is available**, redefining low-light photography as an exercise in applied machine perception.

Different Computational Approaches from Apple, Vivo, Google, and Samsung

When it comes to computational photography, Apple, Vivo, Google, and Samsung are clearly solving the same low-light problem with very different philosophies. What unites them is the belief that modern smartphones are no longer passive image recorders, but active systems that interpret, reconstruct, and sometimes even predict visual information. **The divergence lies in where computation happens, how much control is given to AI, and how much agency remains with the user**.

Apple’s approach is best represented by its research project DarkDiff, which has been discussed in detail by Apple’s own imaging researchers and independent academic analysis on arXiv. Instead of treating noise as something to be removed, Apple reframes low-light RAW data as an incomplete signal that can be probabilistically restored. By integrating a diffusion model directly into the ISP pipeline, Apple allows the system to infer missing textures based on semantic understanding. **This means brick walls remain brick-like, hair retains strand-level structure, and text stays readable even in near darkness**, something traditional multi-frame noise reduction struggles to achieve.

Vivo takes a more hardware-centric computational route. With its in-house BlueImage imaging chip, Vivo avoids relying solely on the generic ISP inside Snapdragon processors. According to technical briefings covered by PhoneArena and hands-on tests from experienced reviewers, this dedicated silicon enables real-time night video processing at 4K and high frame rates. **The key difference is latency**: Vivo prioritizes instant, continuous computation, allowing users to see the final look while recording, rather than waiting for post-capture processing.

| Brand | Primary Computation Layer | Night Imaging Strength |

|---|---|---|

| Apple | On-device diffusion ISP | Extreme low-light texture recovery |

| Vivo | Dedicated imaging chip | Real-time night video clarity |

| Cloud-assisted processing | High-quality night video via offloading | |

| Samsung | User-controlled RAW pipeline | Maximum flexibility for experts |

Google’s computational strategy remains uniquely hybrid. With Pixel devices, features like Night Sight are still processed on-device, but Video Boost deliberately uploads footage to Google’s data centers. This off-device processing, confirmed by Google’s own documentation and widely analyzed by imaging specialists, allows far heavier algorithms to run without thermal or battery constraints. **The trade-off is time and connectivity**, but the benefit is night video quality that rivals dedicated cameras, something no fully on-device system can currently match.

Samsung, on the other hand, positions computation as a tool rather than a decision-maker. Through the Expert RAW app, Samsung exposes multi-frame HDR stacking, noise reduction, and tone mapping while preserving 16-bit RAW output. As Samsung’s official imaging guides explain, AI assists with alignment and exposure blending, but the final look is left intentionally unfinished. **This makes Samsung’s approach less magical, but far more transparent**, appealing to photographers who want to shape night images themselves rather than accept an AI’s aesthetic judgment.

Seen together, these four approaches illustrate a crucial point for advanced users. **There is no single “best” computational photography model**. Apple prioritizes perceptual realism through generative inference, Vivo focuses on speed and live feedback, Google leverages cloud-scale computation, and Samsung emphasizes creative control. Understanding these differences allows users to choose not just a phone, but an entire philosophy of how darkness should be transformed into an image.

Professional Mobile Workflows: RAW Files, AI Denoising, and Editing Tools

Professional mobile photography in 2025–2026 is no longer defined by what happens at the moment of capture, but by what happens after the shutter is pressed. **Shooting in RAW has become the foundation of serious mobile workflows**, especially for night scenes where noise, limited dynamic range, and color instability are unavoidable challenges. Modern flagship smartphones now output 12-bit or even 16-bit RAW files, preserving linear sensor data that would otherwise be irreversibly compressed in HEIF or JPEG.

According to analyses by DxOMark and Adobe research teams, RAW files from recent 1-inch sensor phones retain up to two stops more recoverable shadow detail compared to processed outputs. This extra headroom is critical when working with neon signage, streetlights, and deep shadows in the same frame. **The goal is not just flexibility, but control**, allowing photographers to decide how much noise, texture, and contrast should remain.

| Workflow Stage | Tool Type | Primary Advantage |

|---|---|---|

| Pre-processing | DxO PureRAW 5 | Sensor-level AI denoising and lens correction |

| Editing | Adobe Lightroom | Fine tonal and color control within one ecosystem |

DxO’s DeepPRIME XD2 engine is widely regarded by imaging scientists as one of the most accurate AI denoising systems available today. Because it operates before demosaicing, it removes noise while preserving edge integrity, an approach validated in multiple independent comparisons. **Many professionals now treat AI denoising as a mandatory preprocessing step**, not a creative effect.

On the mobile side, AI-assisted long-exposure apps are redefining what is possible without a tripod. Apple-focused publications like PetaPixel highlight how neural stabilization can align dozens of micro-frames in real time, effectively simulating exposures of 20–30 seconds while handheld. This bridges the gap between spontaneous street shooting and deliberate long-exposure aesthetics.

Ultimately, editing tools are no longer about fixing mistakes, but about interpreting data. **The photographer’s skill now lies in knowing how far to trust AI, and when to stop**, ensuring that night images remain believable, textured, and emotionally grounded rather than overly synthetic.

Essential Accessories That Dramatically Improve Night Results

Night photography with smartphones has reached a level where the device alone already performs impressively, but **the right accessories dramatically improve consistency, creative control, and keeper rate**. This is especially true at night, where physics still matters and even advanced AI benefits from better input data. In practice, accessories do not replace computational photography but rather amplify its strengths.

From stabilization to optical control, essential accessories form a compact ecosystem that transforms a phone into a purpose-built night imaging tool. According to testing methodologies used by DXOMARK and insights published by professional reviewers at outlets such as PetaPixel, stabilization and controlled light intake remain two of the most decisive factors in low-light image quality.

| Accessory Type | Primary Benefit | Night Shooting Impact |

|---|---|---|

| Compact Tripod | Physical stabilization | Reduces motion blur and AI misalignment |

| Gimbal | Dynamic stabilization | Smoother low-light video and cleaner frames |

| Optical Filters | Light shaping | Improved highlight control and cinematic glow |

A compact tripod remains the single most effective accessory for night stills. Even with sensor-shift OIS and multi-frame night modes, smartphones still rely on frame alignment accuracy. **Reducing micro-movements at the source allows AI stacking algorithms to preserve fine detail instead of smoothing it away**. Field tests published by imaging engineers consistently show sharper textures and lower false-detail artifacts when a phone is fully stabilized.

For users who focus on video, a motorized gimbal becomes essential. At night, electronic stabilization often increases ISO and introduces motion smearing. A gimbal offloads stabilization to hardware, allowing the camera to maintain slower shutter speeds and lower gain. Apple’s DockKit framework, introduced to developers and discussed by Apple’s imaging team, further enhances this by enabling native camera apps to benefit from real-time subject tracking without third-party software compromises.

Optical filters are frequently underestimated in smartphone workflows, yet they solve problems that software alone cannot. **Black mist filters gently diffuse point light sources**, reducing harsh LED highlights and restoring a filmic roll-off that algorithms tend to over-sharpen. Cinematographers interviewed by Digital Camera World note that even subtle diffusion physically alters photon spread before it hits the sensor, something no post-processing can perfectly replicate.

Variable ND filters play a different but equally important role. In night video, bright signage often forces shutter speeds far above the cinematic norm, resulting in choppy motion. By cutting light optically, ND filters allow shutter speeds around 1/50s for 24fps video, maintaining natural motion blur. This principle is identical to professional cinema cameras and is frequently emphasized in color science discussions by ARRI and RED engineers.

Another overlooked accessory category is mounting and handling. Magnetic mounts and quick-release systems reduce setup time, which matters at night when light conditions change rapidly. Faster deployment increases the chance of capturing transient moments such as passing headlights or shifting reflections after rain, a factor often mentioned by urban night photographers interviewed by Japan-based photography journals.

Ultimately, essential accessories are not about adding bulk but about **strategic precision**. Each item addresses a specific physical limitation: movement, uncontrolled light, or inefficient workflow. When chosen carefully, these tools integrate seamlessly with modern smartphone cameras and unlock night results that feel deliberate rather than accidental.

Field Techniques and Etiquette for Urban Night Photography

Urban night photography is not only a technical challenge but also a social activity that unfolds in shared public spaces. **How you behave on location directly affects both your results and your ability to keep shooting**. In dense cities, especially in places like Tokyo, field techniques and etiquette are inseparable from image quality.

From a practical standpoint, positioning is everything at night. Modern smartphones with multi-frame night modes rely on stability and scene consistency. Standing where pedestrian flow is predictable reduces frame misalignment and ghosting during computational stacking. DXOMARK field evaluations have repeatedly noted that even advanced night modes struggle when unexpected motion enters the frame, making location choice a hidden but critical variable.

| Situation | Recommended Technique | Reason |

|---|---|---|

| Crowded sidewalks | Handheld, short exposures | Avoids obstructing foot traffic |

| Open plazas | Low-profile mini tripod | Improves sharpness without disruption |

| Observation decks | Body-braced shooting | Tripods often prohibited |

Etiquette around tripods deserves special attention. **In Japan, the absence of a prohibition sign does not imply permission**. Tourism authorities and venue operators emphasize that tripods are treated as potential obstacles, particularly after dark when visibility is low. According to guidance published by the Japan National Tourism Organization, photographers are expected to prioritize safety and flow over personal convenience, even in public areas.

This reality has shaped a distinctly urban technique: using architectural features as stabilizers. Resting a phone against railings, glass frames, or even vending machines allows for longer exposures while remaining unobtrusive. Field tests by mobile photography reviewers have shown that this method can recover up to 70 percent of the sharpness benefit of a tripod when combined with optical stabilization.

Another often overlooked aspect is light discipline. Artificial lighting in cities is complex, and additional light sources can be disruptive. Using LED panels or phone flashes in public streets is widely considered poor manners. Researchers studying urban visual comfort have found that sudden point light sources at night increase perceived glare and stress for pedestrians, reinforcing why available light photography is both aesthetically and socially preferable.

Respect for privacy is equally important. High-resolution sensors and AI enhancement make faces recognizable even in low light. **Blurring motion through longer exposures is not just an artistic choice but a privacy-conscious one**. ExpertPhotography’s etiquette guidelines explicitly recommend techniques that anonymize passersby, particularly when images are shared online.

Communication also plays a role. A simple nod or brief verbal acknowledgment can defuse tension when photographing near others. Urban sociologists note that nonverbal cues signal intent; photographers who appear deliberate and calm are less likely to be perceived as intrusive. This soft skill, while invisible in EXIF data, often determines how long you can work a location.

Ultimately, successful urban night photography rewards those who blend into the city rather than dominate it. **Mastery lies in capturing light without claiming space**, allowing technology, awareness, and courtesy to work together in producing images that feel both vivid and respectful.

参考文献

- DXOMARK:Huawei Pura 80 Ultra Camera Test

- Sony Semiconductor Solutions:Advanced CMOS Sensor for Mobile Applications with High Dynamic Range

- 9to5Mac:Apple Study Shows How AI Can Improve Low-Light Photos

- Android Authority:HUAWEI Pura 80 Ultra Preview: Camera Test and Analysis

- vivo Official Website:vivo X300 Pro – 200MP ZEISS APO Telephoto Camera

- PetaPixel:ReeHeld App Uses AI to Shoot Sharp Long-Exposure Photos on iPhone