If you stream 4K on Netflix, upload 8K footage to YouTube, or edit 10-bit video on a flagship smartphone, you are already living inside a codec revolution. In 2026, video compression is no longer a background technology—it directly shapes battery life, cloud costs, network congestion, and the visual quality you experience every day.

For more than two decades, H.264 has powered everything from Blu-ray to early smartphones, thanks to its unmatched compatibility. However, as global video traffic continues to surge and immersive formats like HDR, 8K, and XR become mainstream, its limitations are increasingly visible.

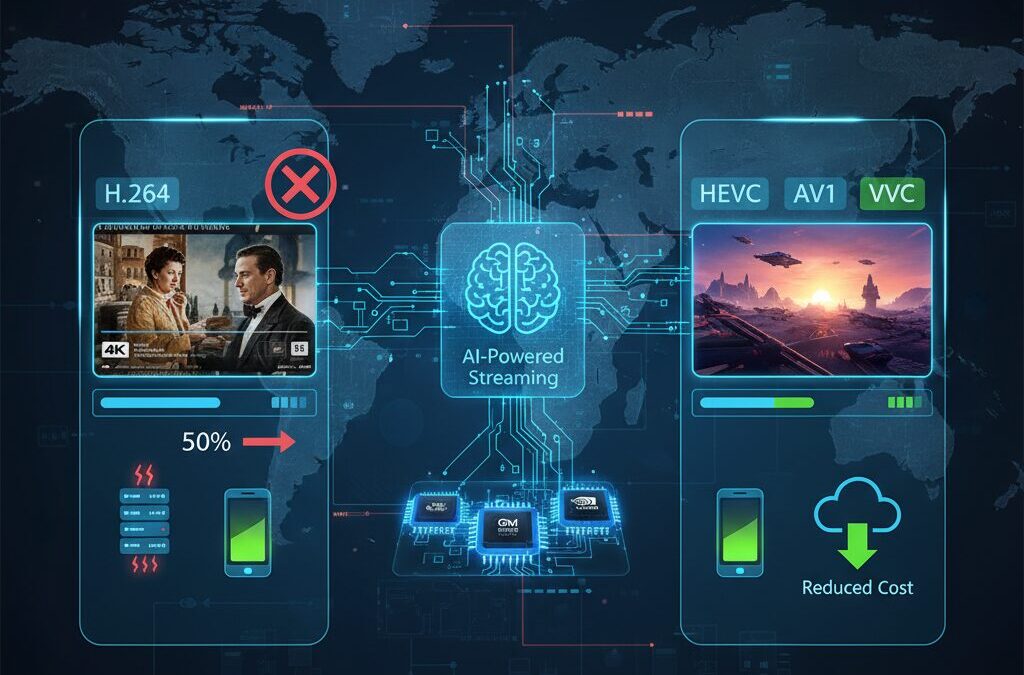

This article explores how HEVC redefined efficiency with up to 50% bitrate savings over H.264, how AV1 is reshaping internet streaming, why VVC and AV2 are fighting for the next standard, and how cutting-edge chips from Apple, Qualcomm, and NVIDIA are transforming real-world performance. By the end, you will clearly understand which codec matters for your next device, workflow, or streaming strategy in 2026.

- Why Video Codecs Matter More Than Ever in 2026

- The Enduring Dominance of H.264: Compatibility as Strategic Infrastructure

- HEVC’s Technical Breakthrough: CTUs, Advanced Prediction, and Smarter Filtering

- Bitrate and Quality Benchmarks: 1080p, 4K, and 8K in Measurable Numbers

- The Computational Trade-Off: Encoding Complexity vs Hardware Acceleration

- Market Growth and Multi-Codec Reality: From $2.6B to a $7.9B Ecosystem

- Licensing Shake-Up in 2026: Access Advance, Patent Pool Consolidation, and Royalty Strategy

- Apple A20 and 2nm Silicon: Power Efficiency Meets 8K HEVC and AV1 Encoding

- Snapdragon 8 Elite Gen 5 and Samsung’s APV Codec: A New Pro Video Contender

- NVIDIA RTX 50 Series: 4:2:2 10-bit Hardware Decoding and Creator Workflows

- Broadcast Innovation: NHK, VVC (H.266), and the Road to 8K Terrestrial Delivery

- VVC vs AV1 vs AV2: The Battle Between Licensed and Royalty-Free Futures

- AI-Driven Compression: NNVC, Semantic Coding, and the Path Toward H.267

- Smart Buying Guide for 2026: Choosing Devices for Streaming, Shooting, and Editing

- 参考文献

Why Video Codecs Matter More Than Ever in 2026

In 2026, video codecs are no longer just technical specifications hidden in developer documentation. They directly shape how we watch, create, and distribute content every single day. As global video traffic continues to surge and 4K, 8K, HDR, and XR become mainstream, the efficiency of a codec now determines bandwidth costs, battery life, cloud infrastructure spending, and ultimately user experience.

According to multiple market analyses, the next-generation video codec market was valued at around $2.6 billion in 2024 and is projected to approach $7.9 billion by 2030, reflecting a CAGR of over 20%. This is not incremental growth. It signals a structural shift in how the media ecosystem operates, driven by higher resolutions and immersive formats that legacy compression methods struggle to handle efficiently.

The pressure comes primarily from resolution inflation. Full HD once defined premium quality. Today, 4K streaming is standard on major platforms, 8K capture is available on consumer devices, and immersive 360-degree formats are being tested for next-generation broadcasting. Research comparing H.264 and HEVC shows that HEVC can reduce bitrate by roughly 50% while maintaining similar perceptual quality, a difference that becomes dramatic at 4K and above.

| Resolution | H.264 Bitrate | HEVC Bitrate |

|---|---|---|

| 1080p | ~6 Mbps | ~3 Mbps |

| 4K | ~32 Mbps | ~15–16 Mbps |

| 8K | Not recommended | 80 Mbps+ |

For streaming platforms, this translates into millions of dollars in annual CDN savings. For mobile users, it means fewer buffering interruptions and lower data consumption. For cloud gaming and XR services, where low latency and high fidelity must coexist, codec efficiency directly affects service viability.

The BBC’s subjective quality studies have indicated that HEVC can achieve nearly 59% bitrate savings compared to H.264 at equivalent perceived quality. This matters because networks are finite. Even with 5G maturity, spectrum and infrastructure costs remain real constraints. Efficient compression effectively expands network capacity without laying a single additional cable.

Hardware evolution further amplifies the importance of codecs. Modern SoCs and GPUs now include dedicated encode/decode engines for HEVC and AV1. Without hardware acceleration, advanced codecs can demand significantly higher computational resources, sometimes up to 10 times more encoding complexity compared to older standards. With hardware support, however, these advanced formats become practical at scale.

We are also entering a multi-codec era. AV1 has gained strong momentum in internet streaming due to its royalty-free model, while VVC promises even greater compression gains for broadcasting and high-end applications. As Nokia and other industry analysts note, the future of video compression is not about a single winner but about optimized deployment across use cases.

In 2026, codecs sit at the intersection of media, semiconductors, telecom, and cloud economics. They influence device purchasing decisions, platform compatibility, and long-term infrastructure planning. Understanding them is no longer optional for gadget enthusiasts or content creators. It is essential to navigating the modern digital landscape.

The Enduring Dominance of H.264: Compatibility as Strategic Infrastructure

More than two decades after its standardization in 2003, H.264 remains deeply embedded in the foundations of the digital video ecosystem. While newer codecs promise dramatic bitrate savings, H.264’s true power lies not in efficiency, but in universality. It functions as strategic infrastructure—quiet, reliable, and everywhere.

From Blu-ray Disc to early YouTube, from DSLR cameras to the first wave of smartphones, H.264 became the lingua franca of video. Its adoption coincided with the rise of broadband and mobile computing, effectively standardizing how visual information travels across networks. This historical timing created a network effect that no successor has fully displaced.

As industry analyses such as LiveAPI’s 2026 codec comparison note, H.264 is still the only codec that guarantees hardware-level decoding across legacy browsers, low-cost smart TVs, and devices manufactured more than five years ago. That guarantee is not a technical footnote—it is a business safeguard.

| Category | H.264 Support Level (2026) | Strategic Impact |

|---|---|---|

| Legacy browsers | Near-universal | Ensures baseline playback |

| Low-cost smart TVs | Hardware decode standard | Reduces support costs |

| Older smartphones | Guaranteed acceleration | Stable battery performance |

For streaming platforms, this compatibility translates directly into risk mitigation. Delivering video at scale requires a dependable “base layer” that works regardless of device age or regional hardware constraints. H.264 fulfills this role with unmatched predictability. Even platforms aggressively deploying AV1 or HEVC maintain H.264 fallback streams to prevent playback failures.

There is also an economic dimension. Software-only decoding of newer codecs can increase CPU load and battery drain on older hardware. In contrast, dedicated H.264 silicon is ubiquitous. This reduces customer support incidents, minimizes return rates for connected devices, and stabilizes user experience in emerging markets where hardware refresh cycles are longer.

Market data reinforces this structural role. Even as the next-generation codec market grows at a CAGR above 20%, according to Research and Markets, multi-codec deployment has become the norm rather than the exception. H.264 persists not because it is the most efficient, but because it is the most interoperable.

For gadget enthusiasts and media professionals alike, this means one crucial thing: when absolute playback certainty matters—client presentations, public kiosks, cross-border distribution—H.264 remains the safest export format. Its dominance endures because compatibility, in the modern media economy, is infrastructure.

HEVC’s Technical Breakthrough: CTUs, Advanced Prediction, and Smarter Filtering

HEVC’s leap over H.264 is not incremental but architectural. Instead of refining the old macroblock model, it rethinks how each frame is partitioned, predicted, and filtered. The result is a codec that achieves roughly 50% bitrate savings at equivalent subjective quality, as widely reported in comparative analyses and BBC-led evaluations.

The core of this breakthrough lies in three pillars: larger and flexible coding units, dramatically expanded prediction modes, and smarter in-loop filtering. Together, these elements allow HEVC to adapt to content complexity with surgical precision.

Coding Tree Units: From Fixed Blocks to Content-Aware Partitioning

H.264 relies on fixed 16×16 macroblocks. HEVC replaces them with Coding Tree Units (CTUs) of up to 64×64 pixels, which can be recursively split using a quad-tree structure. This flexibility enables the encoder to match block size to visual characteristics in real time.

| Feature | H.264 | HEVC |

|---|---|---|

| Basic Unit | 16×16 Macroblock | Up to 64×64 CTU |

| Partitioning | Limited sub-blocks | Recursive quad-tree |

| Adaptation | Moderate | Highly content-aware |

In flat regions such as skies or walls, large CTUs reduce header overhead and preserve bitrate. In textured areas like foliage or human faces, finer splits maintain detail. According to industry comparisons, this spatial adaptability is a primary reason HEVC scales so efficiently to 4K and beyond.

Advanced Prediction: 35 Directions of Intelligence

Prediction is where HEVC sharpens its edge. In intra prediction, H.264 supports 9 directional modes, while HEVC expands this to 35. This allows the encoder to follow subtle gradients and diagonal structures with far greater fidelity.

For example, slanted architectural lines or complex shadows can be modeled more accurately before residual data is encoded. Fewer residual bits mean lower bitrate without visible degradation. Subjective tests referenced by industry publications indicate that viewers often perceive smoother textures at the same PSNR levels, underscoring that prediction accuracy directly affects human visual experience.

Smarter Filtering: Deblocking Plus SAO

Compression inevitably introduces artifacts. HEVC addresses this with a refined deblocking filter and the addition of Sample Adaptive Offset (SAO), an in-loop filter absent in H.264.

The deblocking filter reduces boundary discontinuities between blocks, while SAO statistically classifies pixels and applies offset corrections to luminance and chrominance values. This dual-layer filtering significantly suppresses ringing and banding, especially at aggressive compression levels.

The synergy between CTUs, advanced prediction, and intelligent filtering forms a tightly integrated pipeline. Rather than relying on brute-force bitrate, HEVC extracts structural and perceptual redundancy more effectively. That is why, in practical deployments, it consistently delivers around half the bitrate of H.264 for comparable visual quality, particularly in high-resolution workflows.

Bitrate and Quality Benchmarks: 1080p, 4K, and 8K in Measurable Numbers

When discussing video quality in 2026, resolution alone is no longer enough. What truly defines viewing experience is the relationship between resolution, bitrate, and codec efficiency.

For gadget enthusiasts and creators, understanding measurable bitrate benchmarks helps you decide whether your network, storage, and hardware are truly future-ready.

The higher the resolution, the more dramatically codec efficiency impacts real-world performance.

| Resolution | H.264 Target Bitrate | HEVC Target Bitrate | Average Bandwidth Reduction |

|---|---|---|---|

| 1080p (Full HD) | ~6 Mbps | ~3 Mbps | ≈50% |

| 4K (UHD) | ~32 Mbps | 15–16 Mbps | ≈51% |

| 8K (FUHD) | Not recommended | 80 Mbps+ | N/A |

At 1080p, the difference between H.264 and HEVC may seem incremental on paper. A drop from 6 Mbps to 3 Mbps halves bandwidth usage, but on modern broadband this might not feel dramatic.

However, once you move to 4K, the gap becomes operationally critical. Streaming 4K in H.264 at around 32 Mbps stresses home networks and mobile data caps, while HEVC maintains comparable perceptual quality at roughly half that rate.

This efficiency gain directly translates into smoother playback under network congestion and significantly lower CDN distribution costs.

The BBC’s subjective quality evaluations (MOS testing) have shown that HEVC achieves similar perceived quality at nearly 59% lower bitrate compared to H.264 in controlled conditions.

This matters because objective metrics like PSNR do not always reflect human perception. What viewers care about is not mathematical similarity, but visual smoothness, texture retention, and absence of blocking artifacts.

Especially in high-motion 4K sports or dense foliage scenes, bitrate headroom determines whether compression artifacts appear.

At 8K, the conversation shifts entirely. H.264 is effectively impractical at this resolution due to extreme bandwidth requirements.

HEVC deployments for 8K commonly exceed 80 Mbps even with efficient encoding. That means sustained fiber connectivity or professional broadcast infrastructure is required.

In this range, every percentage point of compression efficiency saves tens of megabits per second, making advanced codecs indispensable rather than optional.

1080p is forgiving. 4K is demanding. 8K is unforgiving.

Another measurable dimension is storage impact. Recording one hour of 4K video at 32 Mbps (H.264) consumes roughly 14.4 GB, while at 16 Mbps (HEVC) it drops to about 7.2 GB.

For creators shooting daily content, this difference compounds quickly across SSD arrays or cloud backups.

Over a 100-hour production cycle, that gap becomes hundreds of gigabytes saved.

Ultimately, bitrate benchmarks are not abstract statistics. They define whether your Wi-Fi can sustain stable playback, whether your NAS fills up prematurely, and whether your mobile data survives a weekend of streaming.

As resolutions climb from 1080p to 8K, measurable bitrate efficiency becomes the central metric that separates legacy workflows from next-generation media environments.

In 2026, understanding these numbers is as important as understanding resolution itself.

The Computational Trade-Off: Encoding Complexity vs Hardware Acceleration

HEVC’s impressive compression gains do not come for free. The very mechanisms that enable roughly 50% bitrate reduction over H.264—such as flexible CTU partitioning and expanded intra prediction modes—dramatically increase computational complexity.

According to technical comparisons cited by LiveAPI and other industry analyses, HEVC encoding can require up to 10 times the processing power of H.264. This gap is especially visible in software-based workflows, where CPUs must evaluate countless block partition combinations and prediction paths before selecting the optimal one.

In practical terms, the trade-off can be summarized as follows.

| Codec | Encoding Complexity | Compression Efficiency | Software Burden |

|---|---|---|---|

| H.264 | Moderate | Baseline | Manageable on legacy CPUs |

| HEVC | Very High | ~50% bitrate saving | Heavy without hardware assist |

This imbalance explains why early HEVC adoption felt painful. On older PCs or entry-level devices, software decoding often led to frame drops and abnormal battery drain, a phenomenon noted in multiple codec ecosystem reports. The algorithm was efficient in bandwidth, but inefficient in raw computation.

The equation changed once silicon vendors embedded fixed-function video engines directly into their chips. Apple’s A-series and M-series processors, Qualcomm’s Snapdragon platforms, and NVIDIA’s NVENC blocks integrate dedicated HEVC encode/decode circuitry that bypasses general-purpose CPU cores.

Hardware acceleration converts computational complexity into silicon specialization. Instead of brute-forcing predictions in software, dedicated logic pipelines process CTU decisions and motion vectors in parallel, dramatically lowering latency and energy consumption.

The impact is measurable. As Puget Systems’ verification of RTX 50 series GPUs shows, modern hardware encoders can improve export performance by up to 60% over previous generations while handling demanding formats such as 4:2:2 10-bit in real time. What once required workstation-class CPUs can now run efficiently on consumer GPUs.

Energy efficiency is equally critical. TSMC’s 2nm-based mobile SoCs reportedly achieve 25–30% lower power consumption at equivalent performance levels compared to prior nodes. When paired with dedicated video engines, this enables 8K HEVC recording on mobile devices without thermal throttling—a scenario that would be unrealistic in pure software mode.

However, the trade-off never fully disappears. Hardware encoders are optimized for specific profiles and standards. When a new codec such as AV1 or VVC emerges, early adopters often fall back to software encoding until silicon catches up. This creates a temporary performance penalty that influences real-world deployment timelines.

Ultimately, the computational trade-off is not about choosing between complexity and speed. It is about architectural alignment. Advanced codecs push mathematical boundaries, while semiconductor innovation absorbs that complexity into specialized circuits.

For gadget enthusiasts and creators alike, understanding this balance clarifies why a device’s codec support on paper is only half the story. The real differentiator is whether that support is backed by dedicated hardware—because in modern video ecosystems, silicon is what turns theory into seamless experience.

Market Growth and Multi-Codec Reality: From $2.6B to a $7.9B Ecosystem

The next-generation video codec market is no longer a niche battleground for engineers. It is rapidly expanding from a $2.6 billion valuation in 2024 to a projected $7.9 billion ecosystem by 2030, according to Research and Markets, representing a remarkable 20.4% CAGR. This growth is not incremental. It reflects a structural transformation in how video is produced, delivered, and monetized.

Behind this surge lies a simple reality: bandwidth demand is compounding faster than infrastructure budgets. As 4K becomes mainstream and 8K, HDR, and XR experiences scale, compression efficiency directly translates into operational savings. Intel Market Research notes that cloud video streaming platforms are under continuous pressure to reduce delivery costs while maintaining quality, making advanced codecs not just technical upgrades but financial levers.

| Year | Market Size | CAGR |

|---|---|---|

| 2024 | $2.6B | — |

| 2030 (Projected) | $7.9B | 20.4% |

However, this expansion does not point to a single winner. We are firmly in a multi-codec era. Streaming giants deploy AV1 for royalty-free internet distribution. Broadcasters continue optimizing HEVC. Research institutions push VVC for future terrestrial upgrades. Device manufacturers selectively support combinations depending on silicon capabilities and licensing exposure.

Multi-codec deployment has become a risk management strategy. Platforms hedge against patent uncertainty, hardware fragmentation, and regional regulatory differences. This diversification reduces dependency on any one standard while maximizing reach across legacy and cutting-edge devices.

Regional dynamics reinforce this complexity. North America currently accounts for roughly 40% of the market, while Asia-Pacific holds about 25% and is expanding at the fastest rate. In bandwidth-sensitive markets such as Japan, where mobile data constraints remain a consumer concern, higher-efficiency codecs are not optional—they are competitive differentiators.

The economic impact extends beyond streaming. Cloud gaming, immersive XR, remote collaboration, and AI-enhanced media workflows all depend on compression efficiency. Each percentage point of bitrate reduction compounds across millions of concurrent streams, directly influencing CDN expenditure and energy consumption. Industry analyses increasingly frame codec choice as an ESG issue, linking efficient compression to lower data center power usage.

In practical terms, the jump from $2.6B to $7.9B signals more than vendor revenue growth. It indicates a maturing ecosystem of silicon providers, patent pools, streaming platforms, broadcasters, and AI-driven codec innovators. The market is no longer about replacing H.264 with a successor. It is about orchestrating multiple codecs simultaneously to balance cost, compatibility, performance, and strategic control.

For stakeholders deeply invested in media technology, this multi-codec reality defines the competitive landscape of 2026 and beyond. Growth is accelerating—but so is complexity. Success now depends on mastering interoperability rather than betting on a single compression champion.

Licensing Shake-Up in 2026: Access Advance, Patent Pool Consolidation, and Royalty Strategy

In 2026, the biggest catalyst for HEVC’s renewed momentum is not a new algorithm but a licensing reset. For years, manufacturers hesitated because HEVC royalties were fragmented across multiple patent pools, creating uncertainty around total cost and litigation exposure. That structural friction has now been fundamentally reduced.

The consolidation led by Access Advance marks a turning point in how video codec IP is packaged and monetized. In late 2025, Access Advance acquired the HEVC and VVC programs previously managed by Via LA, integrating them under a broader unified framework that includes HEVC Advance and VCL Advance.

According to industry reports, the program recorded a 100% renewal rate among existing HEVC licensees, including major technology vendors such as Google, Samsung, Sony, Apple, Huawei, and LG. This level of continuity sends a powerful signal to device makers and SoC vendors that the licensing landscape is now predictable rather than fragmented.

| Program | Scope | 2026 Strategic Impact |

|---|---|---|

| HEVC Advance | Core HEVC patent coverage | Stable renewal, rate certainty |

| VCL Advance | Expanded portfolio incl. former Via LA assets | Broader one-stop licensing |

| MCBA | Multi-codec bridging (HEVC + VVC) | Reduced overlap, bundled economics |

One of the most important tactical levers is the extended deadline through June 30, 2026. Companies signing before that date can lock in current royalty rates and caps through 2030, avoiding a 25% rate increase applicable to later entrants. This deadline effectively accelerates adoption decisions in H1 2026.

For chipset vendors and OEMs, this creates a clear ROI window. If a smartphone maker plans a 3–4 year product cycle, securing favorable HEVC/VVC terms today directly improves long-term gross margin forecasting. Royalty predictability becomes a competitive advantage, especially in high-volume markets.

At the same time, the consolidation sharpens the contrast with AV1 and future AV2, which operate under a royalty-free model. As noted by analysts covering the codec landscape, the industry is no longer debating compression efficiency alone; it is optimizing for total cost of ownership, including legal overhead and cross-licensing complexity.

In practical terms, 2026 transforms HEVC from a “technically superior but legally complex” codec into a commercially streamlined option. For broadcasters, hardware makers, and premium device brands, that shift reduces risk exposure and enables clearer multi-year roadmap planning—precisely what was missing during HEVC’s early adoption phase.

The result is not merely administrative simplification. It is a structural rebalancing of power between patent holders, platform giants, and device manufacturers, reshaping how next-generation codecs are evaluated: not just by bitrate savings, but by licensing architecture and strategic timing.

Apple A20 and 2nm Silicon: Power Efficiency Meets 8K HEVC and AV1 Encoding

Apple’s A20 marks a pivotal moment where process innovation directly reshapes mobile video creation. Built on TSMC’s 2nm (N2) node, it delivers a reported 25–30% reduction in power consumption at the same performance level compared with prior 3nm generations, alongside over 15% higher transistor density. This shift is not just about benchmarks; it fundamentally changes how 8K video can be captured and encoded on a handheld device.

For the first time, sustained 8K HEVC recording and real-time AV1 hardware encoding coexist within a thermally constrained smartphone envelope. That matters because, as industry analyses of next-generation codecs show, HEVC already enables roughly 50% bitrate savings over H.264 at equivalent quality. Encoding that efficiency at 8K without thermal throttling has historically required active cooling or external hardware.

| Process Node | Power Efficiency | Video Capability Impact |

|---|---|---|

| 3nm (N3E) | Baseline | Limited sustained 8K workloads |

| 2nm (N2) | 25–30% lower power | Sustained 8K HEVC, real-time AV1 encode |

The practical implication is endurance. Recording 8K/60fps in HEVC demands enormous compute throughput due to CTU partitioning, advanced motion estimation, and in-loop filters such as SAO. Historically, HEVC encoding could require up to 10× the computational load of H.264 in software implementations. By integrating a dedicated hardware encoder tightly coupled with the CPU, GPU, and NPU through wafer-level multi-chip module packaging, A20 minimizes energy wasted on data movement.

This architectural proximity is critical. Memory bandwidth and latency often bottleneck high-resolution encoding more than raw compute. With denser transistor layouts and improved interconnect efficiency, the A20 can feed its media engine fast enough to maintain stable frame pacing during 8K capture, even with HDR and high bit depth enabled.

AV1 support is equally strategic. According to industry reports on AV1’s hardware ecosystem, the codec has become central to 4K streaming across YouTube and Netflix due to its royalty-free model and superior compression efficiency. Real-time hardware encoding on-device means creators can shoot, compress, and upload in a distribution-ready format without cloud-side transcoding, reducing latency and server-side costs.

Thermal stability also enhances computational photography layers stacked on top of video encoding. Noise reduction, AI-based upscaling, and scene-aware bitrate allocation can run concurrently without forcing aggressive clock throttling. In practical terms, this allows longer continuous takes, more consistent brightness, and fewer dropped frames during complex scenes such as fireworks or fast motion—content types that traditionally stress encoders.

From a storage perspective, pairing 8K resolution with HEVC’s roughly 50% bitrate advantage over H.264 dramatically reduces file size inflation. When combined with AV1 for distribution, creators gain flexibility: HEVC for high-efficiency capture and editing compatibility, AV1 for platform-optimized delivery.

The A20 therefore represents more than a node shrink. It signals a convergence of silicon scaling and codec maturity, where power efficiency, compression science, and creator economics align. For gadget enthusiasts focused on mobile cinematography, this is the moment when 8K encoding becomes not experimental, but practical.

Snapdragon 8 Elite Gen 5 and Samsung’s APV Codec: A New Pro Video Contender

The Snapdragon 8 Elite Gen 5 marks a pivotal moment for Android video creation. With native hardware support for Samsung’s APV (Advanced Professional Video) codec, Qualcomm is positioning flagship smartphones as serious tools for professional-grade production.

According to Qualcomm’s official disclosures and industry coverage, this is the first mobile platform to implement APV acceleration at the silicon level. That single detail changes everything for creators who have long relied on HEVC for delivery and ProRes for heavy editing workflows.

For the first time, Android devices gain a high-efficiency, professional-grade codec designed to rival ProRes—without the traditional storage penalty.

APV was introduced by Samsung in 2023 and reached commercial readiness in 2026. It is engineered to balance editing resilience and storage efficiency—two goals that typically conflict in mobile production.

| Codec | Efficiency vs HEVC | Target Use |

|---|---|---|

| HEVC (H.265) | Baseline | Distribution, streaming |

| ProRes | Larger files | Professional editing |

| APV | ~20% smaller than HEVC ~10% smaller than ProRes |

Mobile pro capture |

Industry reports indicate that APV achieves roughly 20% better storage efficiency than HEVC while reducing file sizes by around 10% compared to ProRes, all while supporting up to 8K resolution, 10-bit to 16-bit color depth, and 4:4:4 chroma sampling. That specification alone signals clear professional intent.

The significance of hardware support inside Snapdragon 8 Elite Gen 5 should not be underestimated. As seen historically with HEVC adoption, software-only encoding dramatically increases power draw and thermal output. By integrating APV directly into the video processing pipeline, Qualcomm enables sustained 8K capture without the rapid battery drain that typically limits mobile production sessions.

Equally important is ecosystem alignment. Google has committed to native APV support in Android 16, while companies such as Adobe and Blackmagic Design are aligning their tools with the format. This mirrors the early stages of HEVC acceleration years ago, but with a stronger creator-first positioning.

In practical terms, this means an Android flagship powered by Snapdragon 8 Elite Gen 5 can record high–bit-depth footage suitable for color grading, transfer it directly into professional editing software, and retain manageable storage footprints. For mobile filmmakers, that removes one of the last structural advantages held by proprietary alternatives.

APV does not aim to replace HEVC for streaming; it aims to redefine what “mobile pro video” means. By separating capture optimization from distribution efficiency, Qualcomm and Samsung are carving out a new layer in the codec stack—one optimized for creators rather than bandwidth alone.

As next-generation codecs like AV1 and VVC continue to compete on delivery efficiency, Snapdragon 8 Elite Gen 5 and APV shift the conversation toward production quality. For gadget enthusiasts who care about real-world creative power, this is not just another spec bump—it is a structural evolution in how Android devices handle serious video work.

NVIDIA RTX 50 Series: 4:2:2 10-bit Hardware Decoding and Creator Workflows

The NVIDIA RTX 50 Series marks a decisive shift for creators working with modern mirrorless and cinema cameras. With its Blackwell architecture and next-generation NVENC/NVDEC engines, the lineup finally delivers full hardware decoding for H.264 and HEVC 4:2:2 10-bit, a format that has become standard in professional acquisition.

For years, 4:2:2 10-bit footage posed a bottleneck. While 4:2:0 streams were widely accelerated on GPUs, 4:2:2 material often fell back to CPU decoding, causing timeline stutter, dropped frames, and excessive power draw during editing. The RTX 50 Series removes that constraint at the hardware level.

| GPU Model | NVENC Engines | 4:2:2 10-bit Decode | 8K Support |

|---|---|---|---|

| RTX 5090 | 3 | Full Hardware | Up to 8K 60fps+ |

| RTX 5080 | 2 | Full Hardware | Yes |

| RTX 4090 | 2 | 4:2:0 Only | Yes |

According to verification testing by Puget Systems, export speeds on the RTX 5090 improve by up to 60% compared to the RTX 4090, and up to 4× versus the RTX 3090 in certain encoding workloads. These gains are not theoretical; they translate directly into shorter render queues and faster client turnaround.

The practical impact on creator workflows is substantial. When editing 4K or 8K 4:2:2 10-bit HEVC footage in applications such as DaVinci Resolve or Adobe Premiere Pro, real-time playback with color grading and LUTs applied becomes consistently achievable without proxy generation.

This matters especially for hybrid shooters and small production teams. Many current cameras default to 4:2:2 10-bit internal recording to preserve color fidelity for grading. Without GPU acceleration, editors were forced to transcode into intermediate formats, increasing storage consumption and delaying delivery.

The triple NVENC configuration on the RTX 5090 also enables parallel encoding tasks. Creators can export a master file, generate social media cuts, and stream simultaneously, all leveraging hardware acceleration. For multi-cam 8K projects, this parallelism reduces timeline lag and stabilizes playback during angle switching.

Importantly, this advancement aligns with the broader industry shift toward high-efficiency codecs such as HEVC and AV1. As higher bit-depth and chroma fidelity become standard, GPU-level decode support is no longer optional. With the RTX 50 Series, NVIDIA positions the GPU not merely as a rendering device, but as the central engine of modern creator pipelines.

Broadcast Innovation: NHK, VVC (H.266), and the Road to 8K Terrestrial Delivery

Japan’s roadmap toward next-generation terrestrial broadcasting is being shaped decisively by NHK Science & Technology Research Laboratories. At the center of this strategy is VVC (H.266), positioned as the technological bridge between today’s HEVC-based 8K satellite broadcasting and tomorrow’s bandwidth-constrained terrestrial delivery.

According to Japan’s Ministry of Internal Affairs and Communications, spectrum efficiency is the single most critical constraint in upgrading digital terrestrial television. Without a major leap in compression performance, 4K and especially 8K over terrestrial networks remain impractical. This is precisely where VVC enters the equation.

Why VVC Matters for Terrestrial 8K

| Codec | Relative Bitrate (Same Subjective Quality) | Implication for Terrestrial |

|---|---|---|

| HEVC (H.265) | Baseline | Limited headroom for 8K |

| VVC (H.266) | ~50% lower than HEVC | Enables realistic 4K/8K trials |

NHK’s own experimental evaluations have demonstrated that VVC can achieve nearly 50% bitrate reduction compared to HEVC at equivalent subjective quality. In highly complex scenes such as fireworks, dense crowds, or rapid motion sports footage—traditionally weak points for compression—VVC significantly reduces block artifacts and mosquito noise.

This improvement is not incremental. It directly translates into either doubling picture quality at the same bandwidth or maintaining quality while freeing spectrum for additional services.

NHK has conducted large-scale outdoor transmission tests in metropolitan environments such as Tokyo and Nagoya. These tests simulate real-world multipath interference and signal attenuation, conditions far harsher than laboratory setups. The results indicate that VVC-based encoding maintains visual stability even under fluctuating signal strength, a crucial requirement for mobile and indoor reception.

Importantly, NHK is not merely adopting VVC—it has actively contributed technologies to the international standardization process. Several proposals from Japanese researchers were incorporated into the final H.266 specification, reinforcing Japan’s influence in global broadcast engineering.

Another dimension of this innovation lies in system integration. Terrestrial 8K delivery will require coordination among:

Advanced modulation schemes

Next-generation error correction

High-efficiency video coding (VVC)

VVC acts as the compression backbone within this broader transmission ecosystem. Without it, modulation gains alone would not close the bandwidth gap.

While some analysts, including industry voices cited by Nokia and FlatpanelsHD, question whether VVC can achieve mass-market streaming dominance due to licensing complexity, the broadcast sector operates under different economics. Public broadcasters prioritize deterministic quality, long lifecycle stability, and regulatory alignment—areas where standardized codecs like VVC maintain strategic advantage.

NHK’s long-term vision extends beyond conventional television. Demonstrations at recent NHK Tech Expo events showcased immersive ultra-high-resolution environments, including dome-based displays and ultra-wide capture systems. Although experimental 30K immersive formats remain research-stage, the compression lessons learned feed directly back into terrestrial 8K planning.

In practical terms, the road to 8K terrestrial broadcasting will not happen overnight. Receiver upgrades, silicon-level VVC decoding support, and regulatory coordination are still evolving. However, the trajectory is clear: VVC is positioned as the enabling layer that transforms 8K from a satellite-exclusive showcase into a potentially nationwide terrestrial reality.

For technology enthusiasts watching Japan’s broadcast ecosystem, this is more than an incremental codec upgrade. It represents a structural shift—where compression science, spectrum policy, and hardware innovation converge to redefine what over-the-air television can deliver in the ultra-high-definition era.

VVC vs AV1 vs AV2: The Battle Between Licensed and Royalty-Free Futures

In 2026, the codec war is no longer just about compression efficiency. It is about business models, ecosystem control, and long-term strategic freedom. At the center of this battle stand VVC (H.266), AV1, and the emerging AV2, representing two fundamentally different futures: licensed versus royalty-free.

VVC inherits the traditional patent pool structure from its predecessors, while AV1 and AV2 are backed by the Alliance for Open Media with a royalty-free promise. This philosophical divide now shapes adoption decisions as much as technical performance does.

| Codec | Licensing Model | Main Backers |

|---|---|---|

| VVC (H.266) | Licensed (Patent Pools) | Broadcast, hardware vendors |

| AV1 | Royalty-free | Google, Netflix, Amazon, Apple |

| AV2 | Royalty-free (planned) | Alliance for Open Media |

Technically, VVC delivers impressive gains. According to research cited by Nokia and public broadcasting experiments in Japan, VVC can reduce bitrate by roughly 30–50% compared to HEVC under equivalent subjective quality. For bandwidth-constrained terrestrial broadcasting and 8K transmission, that advantage is not theoretical. It directly translates into channel capacity and infrastructure savings.

However, VVC faces a familiar challenge: licensing complexity. Even after HEVC patent pools were consolidated under Access Advance, the industry remembers the uncertainty that slowed HEVC adoption. Analysts quoted by FlatpanelsHD have even questioned whether VVC risks being “dead on arrival” outside specialized sectors.

AV1, in contrast, has already won the internet. As Visionular and multiple hardware ecosystem reports highlight, AV1 decoding is now supported across major browsers, operating systems, and modern chipsets. YouTube and Netflix actively prioritize AV1 for 4K streaming, leveraging its royalty-free model to scale globally without per-device fees.

The economic logic is simple: at hyperscale, even small royalties multiply into massive liabilities. For cloud platforms delivering billions of streams per day, a free codec is not just attractive. It is strategically essential.

AV2 raises the stakes further. Announced as the next step beyond AV1, AV2 aims for substantial compression gains while preserving the royalty-free structure. Coverage in T3 and industry analysis from GreyB suggest that major streaming platforms are already evaluating early adoption pathways. If AV2 achieves meaningful efficiency improvements, it could neutralize VVC’s primary advantage.

The result is a bifurcated future. VVC is gaining traction in broadcast infrastructure, public media, and regions where controlled ecosystems and regulatory frameworks reduce licensing friction. AV1 dominates open internet streaming, and AV2 may strengthen that dominance.

For gadget enthusiasts and hardware buyers, the implication is clear. Devices optimized for AV1 decoding are future-proof for streaming. VVC support, meanwhile, may become critical in professional broadcast or next-generation terrestrial deployments.

This is not merely a codec comparison. It is a contest between centralized patent governance and open collaborative standardization. The winner will shape how video is financed, distributed, and monetized for the next decade.

AI-Driven Compression: NNVC, Semantic Coding, and the Path Toward H.267

Video compression is entering a structural transition from rule-based mathematics to data-driven intelligence. The research community now refers to this shift as AI-driven compression, and at its core are NNVC (Neural Network Video Coding), semantic coding, and the exploratory path toward H.267, often described as Beyond VVC.

According to industry analyses such as GreyB’s 2026 codec landscape report, early NNVC prototypes demonstrate over 25% additional bitrate reduction compared to VVC under similar subjective quality conditions. This is not a marginal gain. It represents a new optimization frontier driven by learned models rather than handcrafted prediction tools.

From Tool-Based Coding to Learned Representations

Traditional codecs like HEVC and VVC rely on block partitioning, motion vectors, transform coding, and in-loop filtering. NNVC replaces or augments these modules with neural networks trained end-to-end. Instead of selecting from predefined intra modes or transform kernels, the encoder learns how to represent content efficiently through latent feature spaces.

Research groups contributing to Beyond VVC have shown that neural intra prediction and neural loop filtering can outperform conventional filters, particularly in complex textures and low-bitrate scenarios. The gain becomes especially visible in high-resolution content where classical block structures struggle with fine detail continuity.

| Approach | Core Mechanism | Efficiency Potential |

|---|---|---|

| VVC | Advanced block partitioning + prediction tools | ~50% over HEVC |

| NNVC (Prototype) | Neural prediction + learned transforms | ~25% over VVC |

| Semantic Coding | Object-aware compression | Content-dependent, dynamic gains |

Semantic Coding: Compressing Meaning, Not Just Pixels

Perhaps the most radical evolution is semantic coding. Instead of treating every pixel equally, AI models identify objects such as faces, text overlays, sky regions, or moving foreground elements. Compression resources are then allocated based on perceptual or contextual importance.

This approach aligns with research trends highlighted in discussions around H.267, where scene understanding becomes part of the codec pipeline. For example, a news broadcast could prioritize facial detail and on-screen captions while aggressively compressing static studio backgrounds. The encoder is no longer blind; it understands.

This represents a philosophical shift: compression moves from signal reconstruction to meaning reconstruction.

The Hardware Factor: Why 2026 Is Different

AI-driven compression would have been impractical a decade ago. In 2026, however, consumer SoCs integrate dedicated NPUs capable of accelerating neural inference in real time. As semiconductor roadmaps continue toward denser nodes and heterogeneous packaging, neural modules can coexist with traditional video pipelines without catastrophic power penalties.

This convergence makes H.267 technically plausible. While standardization is still in exploratory phases, the direction is clear: hybrid architectures combining conventional coding tools with neural enhancement layers. Early proposals do not abandon compatibility; they aim to embed neural components selectively, preserving deployability.

The path toward H.267 is therefore evolutionary rather than disruptive. VVC established the efficiency baseline. NNVC extends it. Semantic coding reframes the objective. Together, they signal that the next era of video compression will be defined less by block sizes and more by learned intelligence embedded directly into the codec itself.

Smart Buying Guide for 2026: Choosing Devices for Streaming, Shooting, and Editing

In 2026, smart device buying is no longer about megapixels or CPU clock speed alone. It is about which codecs your device can encode and decode in hardware, and how efficiently it handles 4K, 8K, HDR, and next-generation streaming formats.

With global next-generation codec markets growing at over 20% CAGR, according to Research and Markets, streaming, shooting, and editing devices are now deeply shaped by HEVC, AV1, and emerging standards such as VVC and AV2.

If you choose the wrong silicon, you lock yourself into higher storage costs, slower exports, or compromised streaming quality for years.

1. Devices for Streaming: Prioritize AV1 Hardware Decode

Major platforms such as YouTube and Netflix increasingly deliver 4K streams in AV1. Visionular and AOMedia ecosystem reports highlight that AV1 hardware adoption is now widespread across flagship chipsets.

This means your TV, smartphone, tablet, or laptop should support native AV1 hardware decoding, not software fallback. Software decoding increases battery drain and may cause dropped frames at higher resolutions.

For future-proof streaming in 2026, look for:

Devices powered by recent Apple A-series and M-series chips, Snapdragon 8-series (Gen 2 and later), and modern desktop GPUs all meet this requirement. Without it, you may not receive the highest quality stream even if your display is capable.

2. Devices for Shooting: Efficiency vs Editability

When selecting a smartphone or camera, codec choice directly affects storage usage and post-production flexibility.

HEVC typically delivers around 50% bitrate savings compared to H.264 at equivalent quality, as demonstrated in multiple industry analyses and BBC-led evaluations. This makes HEVC ideal for long 4K or 8K recording sessions.

However, creators should also consider color depth and chroma subsampling support.

| Use Case | Recommended Codec | Why It Matters |

|---|---|---|

| Casual 4K Recording | HEVC (H.265) | Half the storage vs H.264 |

| Advanced Editing | HEVC 4:2:2 10-bit or ProRes/APV | Greater color grading flexibility |

| Long-form Archival | HEVC or AV1 | Lower long-term storage cost |

New chipsets such as Apple’s 2nm A20 series and Qualcomm’s Snapdragon 8 Elite Gen 5 introduce advanced hardware pipelines, enabling efficient high-resolution encoding with reduced power draw. Reports indicate that 2nm designs can lower power consumption by roughly 25–30% versus prior nodes at similar performance levels.

This directly translates into longer 8K capture times and reduced thermal throttling during extended shoots.

3. Devices for Editing: 4:2:2 Hardware Support Is Critical

Many modern mirrorless cameras record in HEVC or H.264 4:2:2 10-bit. Historically, GPUs accelerated only 4:2:0 formats, leaving CPUs to handle heavier 4:2:2 decoding.

According to Puget Systems’ verification tests, NVIDIA’s RTX 50 series introduces full hardware support for 4:2:2 decoding and significantly accelerates export workflows compared to previous generations.

This means smoother timeline playback, faster scrubbing, and shorter render times for high-bitrate footage.

If you edit professionally or semi-professionally, ensure your GPU explicitly supports 4:2:2 hardware decode. Otherwise, even a powerful CPU may struggle with multiple 4K streams.

4. Storage and Bandwidth Strategy

Codec efficiency directly impacts SSD capacity planning and cloud backup costs.

At 4K resolution, HEVC may require roughly 15–16 Mbps for quality levels that demand around 32 Mbps in H.264. Over hundreds of hours of footage, this difference becomes financially meaningful.

In markets like Japan, where mobile data limits remain common, efficient codecs reduce upload times and bandwidth charges.

For creators frequently uploading to cloud platforms, recording in HEVC or AV1 can halve transfer times compared to legacy H.264 at equivalent quality.

5. Licensing Stability and Ecosystem Confidence

HEVC adoption was once slowed by fragmented patent pools. However, the 2025–2026 consolidation under Access Advance unified major licensing structures, reporting 100% renewal among existing licensees and participation from leading global manufacturers.

This reduces long-term implementation uncertainty for device makers and increases confidence that HEVC support will remain stable across hardware generations.

Meanwhile, AV1’s royalty-free model continues to dominate internet streaming, while VVC and AV2 evolve in parallel for next-stage efficiency gains.

In practical terms, a smart 2026 buying strategy looks like this: choose AV1-capable devices for streaming, HEVC-efficient hardware for shooting, and 4:2:2-accelerated GPUs for editing.

Video is no longer just content. It is infrastructure. And the codec engine inside your device determines how efficiently that infrastructure works for the next five years.

参考文献

- BetaNews:H.265/HEVC offers 50 percent bitrate savings over H.264/AVC

- Visionular:AV1 Decoding and Hardware Ecosystem: The Future of Video Delivery

- Research and Markets:Next Generation Video Codecs Market – Global Strategic Business Report

- Business Wire:Access Advance Extends HEVC Advance Rate Increase Deadline

- Wccftech:Apple A20 And A20 Pro, The iPhone’s First 2nm Chipsets – Here Is Everything You Need To Know

- Puget Systems:Verifying NVIDIA GeForce RTX 50 Series Performance

- Nokia:The Future of Video Compression | Is VVC Ready for Prime Time?

- T3:Netflix could get a massive streaming boost soon, thanks to key tech breakthrough

- GreyB:Exclusive 2026 Video Codec Landscape | Who Leads Next Standard?