Face recognition is no longer just a convenient way to unlock your phone. In 2026, it has quietly become a core layer of global digital infrastructure, powering smartphones, airports, public safety systems, and even AI-powered smart glasses.

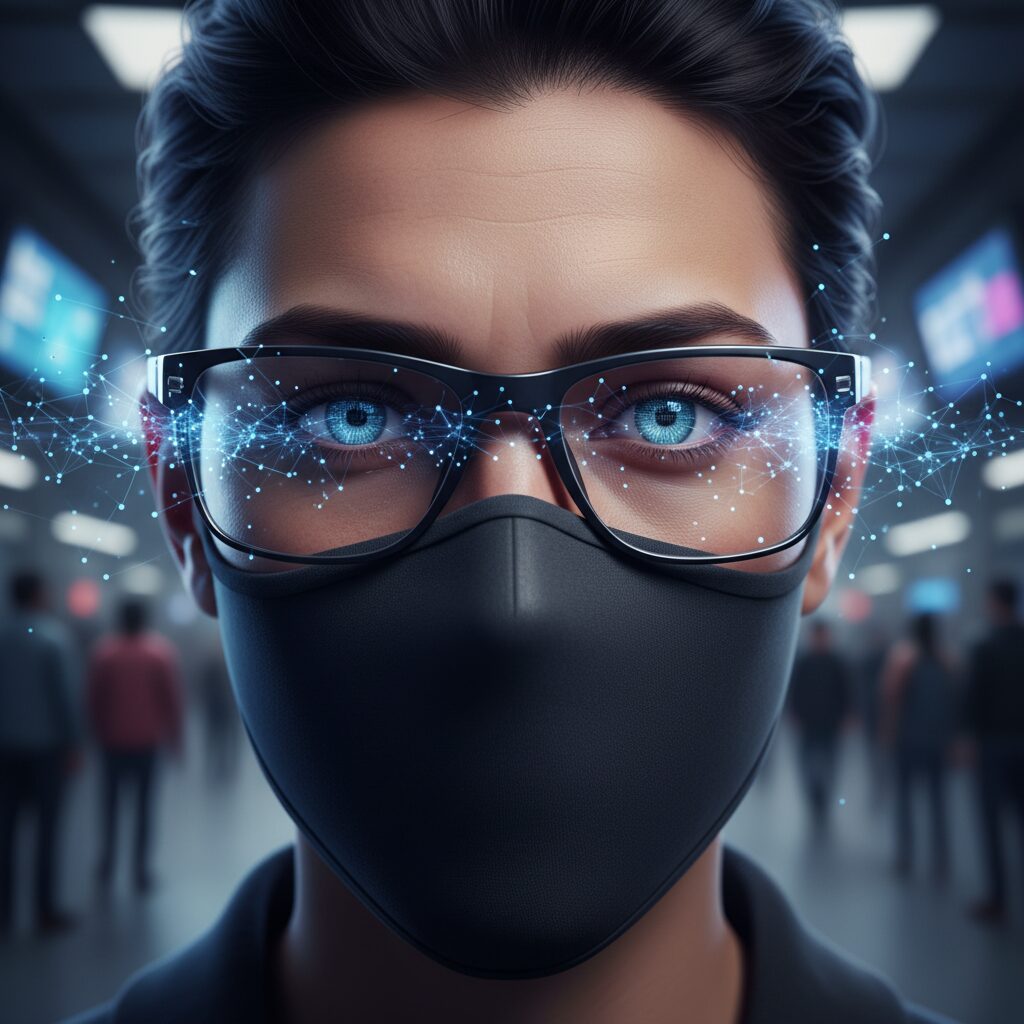

For gadget enthusiasts, this evolution raises a natural question: how did facial recognition suddenly become so reliable in the real world, even when faces are partially hidden by masks, glasses, or motion?

The answer lies in major breakthroughs in deep learning, periocular (eye-area) recognition, and on-device AI processing. These advances have transformed face recognition from a fragile lab technology into a robust system that works in crowded stations, dim lighting, and everyday life.

This article explores how benchmark data from NIST, real deployments by Apple and NEC, and the rise of wearable devices are redefining what facial recognition can do. By reading on, you will gain a clear, evidence-based understanding of where the technology stands today and why 2026 marks a turning point for visual intelligence.

- From Phone Unlocking to Visual Intelligence Infrastructure

- How NIST Benchmarks Define Real-World Facial Recognition Accuracy

- Why Masks and Glasses No Longer Break Face Recognition Systems

- Periocular Recognition Breakthroughs Around the Eyes

- Deep Learning Innovations That Preserve Accuracy Under Occlusion

- Apple Face ID in 2026 and the Evolution of Consumer Biometrics

- The Rise of AI Smart Glasses and On-the-Go Face Recognition

- Facial Recognition in Airports, Railways, and Urban Infrastructure

- Public Safety and Law Enforcement Use Cases in Crowded Environments

- Market Growth, Ethics, and the Shift Toward On-Device Biometrics

- What Comes Next: Spatial World Models and Physical AI

- 参考文献

From Phone Unlocking to Visual Intelligence Infrastructure

In the early 2010s, facial recognition was experienced mainly as a convenience feature for unlocking a smartphone. By 2026, however, this role has fundamentally changed, and the technology now functions as a core layer of visual intelligence infrastructure that quietly supports society. **The shift is not incremental but architectural**, driven by breakthroughs in deep learning and by the demand for reliable performance under real-world conditions such as masks, glasses, and motion.

This transformation is clearly reflected in evaluations conducted by the U.S. National Institute of Standards and Technology. According to NIST’s FRTE benchmarks released in early 2026, top-tier algorithms achieve false non-match rates of around 0.15 percent even under extremely strict thresholds. Such performance means facial recognition no longer requires controlled environments or explicit user cooperation, enabling seamless deployment in airports, transit systems, and wearable devices.

| Phase | Primary Use | Operational Characteristics |

|---|---|---|

| Early 2010s | Phone unlocking | Static pose, no occlusion tolerance |

| Early 2020s | Access control | Limited mask and glasses support |

| 2026 | Visual intelligence infrastructure | In-the-wild recognition, continuous operation |

A key enabler of this evolution is the dramatic improvement in recognition accuracy when faces are partially covered. NIST analyses on mask effects show that leading algorithms limit error increases to roughly four to five times compared to uncovered faces, a gap that has narrowed further in commercial systems by 2026. **This level of robustness allows facial recognition to fade into the background**, much like electricity or network connectivity.

Equally important is the rise of periocular recognition, which focuses on the eye region. Recent peer-reviewed research demonstrates that refined training methods preserve subtle asymmetries around the eyes, improving accuracy without increasing computational cost. These findings have been rapidly absorbed into production systems, reinforcing the idea that facial recognition is now a reusable intelligence component rather than a single-purpose feature.

As a result, facial recognition in 2026 should be understood not as a gadget function but as a foundational layer that enables higher-level perception. **From smartphones to smart glasses and urban systems, it provides machines with visual identity awareness**, forming the basis upon which contextual understanding and human-centric AI services are built.

How NIST Benchmarks Define Real-World Facial Recognition Accuracy

When discussing real-world facial recognition accuracy in 2026, the most reliable reference is the benchmark framework provided by the U.S. National Institute of Standards and Technology. According to NIST, laboratory accuracy alone is no longer sufficient, and algorithms must prove their robustness under unconstrained, in-the-wild conditions such as masks, glasses, aging, and variable lighting.

NIST’s FRTE benchmarks are designed to simulate these real operational stresses by evaluating both false non-match rates and false match rates at extremely strict thresholds. This approach reflects environments like airport security gates or financial authentication, where even a single error can have serious consequences.

| Evaluation Metric | Operational Meaning | 2026 Top-Tier Results |

|---|---|---|

| FNMR @ FMR=10⁻⁶ | User rejected despite being genuine | ≈0.15% |

| Masked Face FNMR Ratio | Accuracy drop due to occlusion | 4–5× increase only |

NIST reports released in early 2026 show that leading algorithms maintain FNMR values around 0.0014–0.0016 even at a one-in-a-million false match tolerance. This level of performance means that users can pass through authentication systems with minimal friction, while operators retain strong security guarantees.

Equally important is how NIST evaluates masked and partially occluded faces. Its long-running analysis demonstrates that modern systems, particularly those optimized for periocular features, limit accuracy degradation to a manageable range. This evidence explains why facial recognition now performs reliably in airports, transit systems, and wearable devices without requiring user cooperation.

By grounding performance claims in transparent, repeatable benchmarks, NIST effectively defines what “real-world accuracy” means, transforming facial recognition from a promising technology into dependable infrastructure.

Why Masks and Glasses No Longer Break Face Recognition Systems

Until a few years ago, wearing a mask or glasses was one of the most reliable ways to confuse face recognition systems. Covering the nose and mouth removed key facial landmarks, while reflections and frame occlusion disrupted eye detection. In 2026, however, this assumption no longer holds true, and **face recognition has become robust enough that everyday accessories rarely degrade user experience**.

This shift is not based on marketing claims but on measurable improvements. According to the latest evaluations published by the U.S. National Institute of Standards and Technology, top-tier algorithms now maintain extremely low error rates even under partial occlusion. These results demonstrate that modern systems are no longer dependent on a “complete face,” but instead adapt dynamically to visible regions.

| Condition | Typical FNMR (Pre-2020) | Typical FNMR (2026 Top Tier) |

|---|---|---|

| No mask, no glasses | ~0.1–0.3% | ~0.15% |

| Mask worn | Several % or higher | ~0.6–0.9% |

| Glasses or sunglasses | Highly variable | Near baseline |

The key technical breakthrough behind this resilience is the maturation of periocular recognition, which focuses on the region around the eyes. Research published in 2025 showed that preserving left–right eye asymmetry during training significantly improves discrimination accuracy. By avoiding horizontal flipping and carefully controlling gradient updates, models retain subtle individual traits that remain visible even when masks or frames are present.

From a system design perspective, this means that **the eyes have effectively become a first-class biometric signal**, rather than a fallback. Vendors such as NEC have demonstrated walk-through recognition systems that identify individuals at normal walking speed from several meters away, even when glasses, motion blur, or off-axis gaze are involved. These systems combine visible-light and infrared sensing with self-supervised foundation models, allowing stable recognition without requiring users to stop or cooperate.

Consumer devices reflect the same trend. Apple’s latest Face ID iterations, as explained in its official guidance and reinforced by NIST-aligned benchmarks, rely far less on the lower half of the face than earlier generations. Mask-aware template generation and expanded angular tolerance enable consistent authentication using eye and upper-face geometry alone. As a result, **putting on glasses in the morning or a mask on public transport no longer changes how the device behaves**.

Importantly, these improvements also reduce false rejections rather than simply increasing tolerance. NIST data shows that even at extremely strict security thresholds, modern algorithms keep rejection rates under one percent with masks on. For users, this translates into smoother access at airports, transit gates, and secure facilities, without repeated retries or manual overrides.

In practical terms, masks and glasses have lost their power as “edge cases.” What once broke face recognition systems is now an expected input condition, handled by design rather than exception. This quiet reliability is precisely why, in 2026, face recognition feels less like a technology you notice and more like infrastructure you trust.

Periocular Recognition Breakthroughs Around the Eyes

Periocular recognition, which focuses on the region around the eyes, has emerged as one of the most decisive breakthroughs in facial recognition by 2026. **When masks, scarves, or helmets obscure the lower face, the eye area becomes the most information-dense biometric zone**, containing eyelid contours, eyebrow shapes, skin texture, and inter-eye geometry. According to evaluations by the U.S. National Institute of Standards and Technology, modern algorithms trained with periocular emphasis now retain high accuracy even under heavy occlusion, a scenario that once caused error rates to spike dramatically.

A key technical advance lies in how deep learning models are trained to understand the asymmetry between the left and right eyes. Research published in 2025 by Fujio, Kaga, and Takahashi demonstrated that conventional horizontal flipping during training can blur these asymmetries and reduce accuracy. By contrast, **vertical flip learning preserves left-right uniqueness**, while a technique known as opposite-side backpropagation stop prevents the model from confusing one eye with the other. Implemented on standard CNN architectures such as ResNet18, this approach improved periocular recognition accuracy by roughly 1–2 percent without increasing computational cost, a meaningful gain at scale.

These algorithmic refinements translate directly into real-world performance. NIST’s Face Mask Effects analysis showed that top-tier systems limited the false non-match rate increase under mask conditions to about four to five times that of unmasked faces, a sharp improvement over early-pandemic systems. **By 2026, many commercial deployments report that users are often unaware the system is compensating for masks at all**, because periocular features alone are sufficient to maintain a smooth authentication experience.

| Condition | Primary Visible Features | Relative FNMR Impact |

|---|---|---|

| No occlusion | Full face geometry | Baseline |

| Mask worn | Eyes, eyebrows, upper cheeks | Approximately 4–5× |

| Mask and glasses | Periocular texture and shape | Slightly higher but stable |

Industry leaders have rapidly integrated these insights. NEC has combined periocular recognition with iris analysis through self-supervised foundation models, enabling walk-through identification from up to three meters away while subjects move at normal walking speed. This capability, validated in large-scale trials, highlights a broader shift: **recognition no longer depends on a cooperative, stationary user**, but instead adapts to natural human motion and imperfect visibility.

Periocular breakthroughs also underpin the rise of wearable devices such as AI smart glasses. Because these devices capture faces from oblique angles and under variable lighting, reliance on the eye region is essential. Analysts and engineers alike note that periocular-centric models are what make real-time, on-device recognition feasible within strict power and privacy constraints. As a result, the area around the eyes has quietly become the cornerstone of robust facial recognition in the wild, redefining what reliable biometric authentication looks like in everyday life.

Deep Learning Innovations That Preserve Accuracy Under Occlusion

Occlusion has long been one of the hardest problems in face recognition, yet recent deep learning innovations have shown that accuracy does not have to be sacrificed when faces are partially hidden. In 2026, masks, eyeglasses, and sunglasses are no longer treated as exceptional edge cases. Instead, they are explicitly modeled during training, allowing recognition systems to remain reliable in everyday, in-the-wild conditions.

According to evaluations published by the U.S. National Institute of Standards and Technology, top-performing algorithms now keep false non-match rates at extremely low levels even when strict thresholds are applied. What is especially notable is that these gains are not limited to clean, frontal images. **The same models maintain strong performance when key facial regions are occluded**, which marks a clear shift from pre-pandemic approaches that relied heavily on the full face.

| Condition | Typical FNMR Range | Observed Trend |

|---|---|---|

| No occlusion | ~0.0015 | Near theoretical limits in NIST FRTE |

| Mask worn | ~0.006–0.009 | Error increase contained within 4–5x |

| Glasses or sunglasses | Slightly above baseline | Stabilized through periocular learning |

The technical foundation behind this resilience lies in periocular recognition, which focuses on the region around the eyes. When the lower half of the face is covered, this area becomes the most information-dense signal. Research published in 2025 demonstrated that conventional data augmentation, such as horizontal flipping, can actually blur the asymmetric traits between the left and right eye. To address this, researchers proposed vertical flip learning combined with suppressing gradient updates from the opposite eye.

These techniques may sound subtle, but their impact is measurable. Experiments showed a **1–2% absolute improvement in recognition accuracy under occlusion**, achieved without increasing model size or computational cost. Importantly, these methods integrate seamlessly with standard convolutional neural networks like ResNet18, which makes them attractive for deployment in real systems rather than remaining confined to academic prototypes.

Commercial implementations confirm that these ideas scale beyond the lab. NEC has publicly demonstrated systems that combine face and iris cues using self-supervised foundation models. These systems can authenticate individuals while they are walking, at distances of up to three meters, and under challenging lighting conditions. Glasses, motion blur, and partial occlusion are treated as normal input variations, not as failure modes.

From a user perspective, this progress translates into a smoother experience. Authentication no longer requires deliberate cooperation such as removing a mask or adjusting eyewear. From a system designer’s perspective, the significance is even greater. **Robustness under occlusion reduces bias, lowers friction, and expands the range of environments where facial recognition can be responsibly deployed**, from transportation hubs to wearable devices.

As NIST benchmarks continue to evolve, they increasingly reward algorithms that perform well under these imperfect conditions. This alignment between evaluation criteria and real-world usage ensures that future deep learning innovations will keep prioritizing accuracy where it matters most: not in controlled labs, but in daily life where faces are rarely fully visible.

Apple Face ID in 2026 and the Evolution of Consumer Biometrics

In 2026, Apple Face ID is no longer perceived as a simple convenience feature for unlocking an iPhone, but as a mature consumer-grade biometric system that reflects the broader evolution of facial recognition in society. **Apple’s approach emphasizes reliability under real-world conditions**, especially changes in appearance caused by masks, glasses, makeup, and aging, which historically degraded user experience.

According to Apple’s official guidance and reports cited by technology analysts, Face ID in iOS 26 has expanded its tolerance for viewing angles and partial occlusion, while maintaining its on-device privacy model. This balance is critical, as the U.S. National Institute of Standards and Technology has repeatedly highlighted that consumer biometrics must achieve both low false rejection rates and predictable behavior in uncontrolled environments.

One practical example is the renewed focus on the “Alternate Appearance” feature. By storing a second full facial template, Face ID can adapt to drastic but legitimate appearance changes, such as prescription glasses with thick frames or specific professional makeup. **Apple engineers describe this as a controlled form of user-assisted retraining**, avoiding continuous background learning that could increase security risk.

| Aspect | Before 2024 | Face ID in 2026 |

|---|---|---|

| Mask handling | Limited eye-based fallback | Optimized periocular modeling |

| Angle tolerance | Primarily frontal | Wider off-axis recognition |

| User adaptation | Single appearance model | Dual appearance support |

Another notable shift is hardware miniaturization. Industry reports referenced by MacRumors indicate that Apple demanded thinner Face ID modules for its 2026 iPhone lineup, enabling a wider camera field of view. This design choice is not cosmetic alone. **A wider field directly improves recognition when the device is placed on a desk or held casually**, scenarios that dominate daily usage but were rarely considered in early biometric systems.

From a consumer biometrics perspective, Apple’s strategy aligns with NIST’s broader findings that accuracy gains increasingly come from robustness rather than raw algorithmic scores. Face ID’s evolution demonstrates how high-end research in periocular recognition and deep learning translates into subtle but meaningful everyday trust. Users may not notice the improvement explicitly, yet the absence of friction has become the defining metric of success.

The Rise of AI Smart Glasses and On-the-Go Face Recognition

The rapid rise of AI smart glasses in 2026 marks a decisive shift in how face recognition is used outside controlled environments. These devices are no longer designed merely to authenticate the wearer, but to recognize other people in real time while the user is moving through the world. This transition from static verification to on-the-go face recognition defines the new category of visual intelligence.

According to evaluations published by the U.S. National Institute of Standards and Technology, modern face recognition algorithms now maintain extremely low error rates even under masks and glasses, conditions that once crippled accuracy. This robustness is the technical foundation that allows smart glasses to function reliably in streets, events, and workplaces rather than only in front of fixed cameras.

| Use Context | Recognition Target | Key Technical Enabler |

|---|---|---|

| Consumer smart glasses | Known individuals | Periocular-focused deep learning |

| Public safety | Watchlists | On-device encrypted matching |

| Business networking | Contacts & profiles | Real-time visual AI overlays |

A major breakthrough enabling this shift is the maturation of periocular recognition, which focuses on the eye region rather than the full face. Peer-reviewed research released in 2025 demonstrated that preserving left–right eye asymmetry during model training improves recognition accuracy by up to two percent without increasing computational cost. This improvement is critical for smart glasses, where sunglasses, masks, and motion blur are the norm.

Global technology leaders are already translating these advances into wearable products. Apple’s upcoming smart glasses, as reported by multiple industry analyses, prioritize low-power processing derived from Apple Watch chips and deep integration with Siri. The intent is not immersive augmented reality, but subtle assistance such as identifying a familiar person and recalling past interactions at the right moment.

At CES 2026, competing devices from Meta, Rokid, and Xreal reinforced the same direction. Lighter frames, cameras optimized for continuous vision, and voice-first interaction signal that smart glasses are becoming socially acceptable, always-on sensors. Face recognition in this context operates quietly in the background, surfacing information only when it adds clear value.

Real-world deployment by law enforcement further illustrates the power and sensitivity of on-the-go recognition. During India’s Republic Day parade in January 2026, police officers used AI smart glasses to identify suspects in dense crowds without relying on cloud connectivity. Faces captured by the glasses were matched locally against encrypted databases, with visual cues overlaid directly in the officer’s field of view.

Experts cited by international security and standards bodies emphasize that the future viability of these devices depends on localized processing and strict data governance. By keeping biometric matching on-device and minimizing data retention, smart glasses can deliver instant recognition while reducing privacy risks. In this balance between capability and restraint, AI smart glasses are redefining how humans perceive and understand the people around them.

Facial Recognition in Airports, Railways, and Urban Infrastructure

Facial recognition has moved from controlled checkpoints into the flow of everyday mobility, and airports and railways have become its most demanding proving grounds. In 2026, large-scale transport hubs no longer evaluate these systems by laboratory accuracy alone, but by how reliably they perform amid masks, glasses, crowds, and constant motion.

According to the U.S. National Institute of Standards and Technology, the latest FRTE benchmarks show that top-tier algorithms maintain false non-match rates around 0.15 percent even under extremely strict thresholds. This level of reliability is what enables fully automated boarding and ticketless travel without forcing passengers to stop or cooperate consciously.

In Japan, Narita International Airport’s Face Express system illustrates how this translates into real operations. During peak months in 2025, the system processed millions of international travelers from diverse regions, many wearing masks or sunglasses. Panasonic- and NEC-based solutions continued to rank at the top globally in both controlled portrait images and more challenging in-the-wild border scenarios, demonstrating resilience to aging and pose variation.

| Infrastructure | Deployment Focus | Operational Insight |

|---|---|---|

| International Airports | Seamless boarding and immigration | Walk-through recognition reduces queues without lowering security |

| Urban Railways | Ticketless gate access | Camera angles and occlusion remain critical design factors |

Railway trials reveal a different challenge. Experiments at metro stations show high accuracy for masked commuters, yet failures occur when helmets or visors block the periocular region. These findings are shaping next-generation gate designs that rely on multi-camera layouts and, increasingly, multimodal biometrics.

Across cities, facial recognition is becoming part of urban infrastructure rather than a visible security device. The direction is clear: systems must adapt to people as they are, not ask people to adapt to machines, and transport networks are setting that standard first.

Public Safety and Law Enforcement Use Cases in Crowded Environments

In crowded public spaces such as large-scale events, transportation hubs, and political gatherings, facial recognition has shifted from a passive surveillance tool to an active decision-support system for public safety professionals. By 2026, accuracy gains under masks, glasses, and uncontrolled lighting conditions have made real-time identification in dense crowds operationally viable, rather than experimental. According to benchmarks published by the U.S. National Institute of Standards and Technology, top-tier algorithms now maintain extremely low false non-match rates even under stringent thresholds, which is a prerequisite for use in law enforcement scenarios where hesitation or misidentification can carry serious consequences.

What fundamentally changes policing in crowded environments is mobility. Instead of relying solely on fixed cameras, officers are now equipped with wearable devices that bring facial recognition directly into their line of sight. This approach proved particularly effective during India’s Republic Day Parade in January 2026, where Delhi Police deployed AI-enabled smart glasses across a security force of approximately 10,000 personnel. The system was designed specifically for moving through crowds, identifying individuals of interest without stopping foot traffic or drawing public attention.

The operational workflow is intentionally simple. When an officer scans a crowd, detected faces are compared in real time against a watchlist stored on-device. Visual cues are overlaid directly onto the officer’s view, allowing immediate situational awareness without verbal radio checks or manual ID verification. Importantly, the algorithms demonstrated resilience against common concealment tactics such as masks, hats, glasses, beards, and even deliberate makeup alterations. Reports indicate that photographs taken decades earlier could still be matched accurately by compensating for age-related changes.

From a technical standpoint, this level of robustness is closely tied to advances in periocular recognition, which prioritizes the eye region when the lower face is obscured. NIST’s earlier Face Mask Effects analyses already showed that leading algorithms limited error-rate increases to roughly four to five times under masks, and commercial systems in 2026 have further narrowed that gap. In crowded settings where mask usage remains common, this improvement directly translates into fewer missed detections and fewer unnecessary interventions.

| Operational Requirement | Traditional CCTV | Wearable Facial Recognition |

|---|---|---|

| Mobility in crowds | Limited to fixed angles | Officer-driven, dynamic viewpoints |

| Mask and disguise tolerance | Significant accuracy drop | High resilience via eye-region analysis |

| Response latency | Delayed, post-analysis | Immediate, in-view alerts |

Another critical dimension is threat detection beyond identity alone. In the Delhi case, facial recognition was combined with thermal scanning, enabling officers to detect concealed objects under clothing while simultaneously confirming identity. This multimodal approach reflects a broader trend noted by public-sector researchers, where face recognition acts as one sensor among several, rather than a single decisive authority. Such integration reduces overreliance on facial data and improves overall risk assessment in chaotic environments.

Ethical and legal considerations remain central, and agencies have responded by redesigning system architecture rather than abandoning the technology. Localized processing, limited watchlists, and event-specific deployments are increasingly favored. Experts from government technology advisory panels emphasize that these constraints are not merely policy choices but technical safeguards that help maintain public trust while preserving effectiveness.

In practice, the use of facial recognition in crowded public safety scenarios in 2026 is less about constant surveillance and more about precision. When applied narrowly to high-risk contexts, supported by independently validated accuracy metrics, and embedded in wearable, officer-centric tools, it enables faster, calmer, and more proportionate responses in environments where every second and every misjudgment matters.

Market Growth, Ethics, and the Shift Toward On-Device Biometrics

The rapid growth of the facial recognition market in 2026 is no longer driven by novelty, but by trust and deployment scale. According to industry analyses frequently cited by regulators and enterprise buyers, the global market expanded from 6.94 billion dollars in 2024 to 7.92 billion dollars in 2025, maintaining a double-digit annual growth rate. What is notable is not only the size of this expansion, but the direction it is taking. **Growth is increasingly concentrated in solutions that minimize centralized data storage and favor on-device or localized biometric processing**.

This shift is tightly linked to ethical pressure. Policymakers, privacy advocates, and standard-setting bodies such as NIST have consistently emphasized that biometric systems must reduce the risk of mass surveillance and data leakage. High-profile evaluations by NIST’s FRTE and FATE programs are often referenced by public agencies to justify procurement decisions, but passing accuracy benchmarks is no longer sufficient. Vendors are now expected to demonstrate how biometric templates are captured, stored, and deleted, and whether identification can occur without persistent cloud connectivity.

| Domain | Market Driver | Ethical Emphasis |

|---|---|---|

| Financial Services | Passwordless authentication | Biometric data never leaves the device |

| Public Transport | Seamless, contactless flow | Ephemeral matching without identity retention |

| Healthcare | AI-assisted diagnosis | Strict separation of identity and medical inference |

On-device biometrics have therefore emerged as a practical compromise between usability and ethics. Apple’s Face ID architecture is often cited by security researchers as a reference model, because facial templates are encrypted and stored in a secure enclave rather than transmitted externally. A similar philosophy is now visible in AI smart glasses used by law enforcement in India, where real-time matching is performed locally on paired smartphones without internet access. **These designs directly respond to criticism that facial recognition enables unchecked surveillance**.

Market analysts also point out that localized processing reduces latency and operational cost, which further accelerates adoption. In banking, on-device facial recognition has effectively completed the transition to passwordless authentication, while in border control it enables near-zero waiting times without building massive centralized biometric databases. Researchers in ethics and human-computer interaction have noted that users are more willing to accept always-on biometric systems when they understand that identification happens transiently and contextually.

As the market continues to grow, ethics is no longer treated as a constraint but as a differentiator. Vendors that align high NIST-validated accuracy with transparent, localized data handling are gaining a clear advantage. In this sense, **the shift toward on-device biometrics is not only a technical evolution, but a market-driven response to societal expectations of dignity, consent, and control**.

What Comes Next: Spatial World Models and Physical AI

What comes next in facial recognition is not simply higher accuracy, but a fundamental shift toward Spatial World Models and what researchers increasingly call Physical AI. In this paradigm, AI systems no longer interpret faces as isolated biometric inputs. Instead, they understand people as entities embedded in a continuously changing physical environment, where space, movement, and intent are tightly connected.

This transition is driven by the realization that real-world intelligence requires context. According to Fujitsu’s presentation at CES 2026, their Spatial World Model enables machines to construct a unified representation of spaces, objects, and humans, allowing AI to reason about how people move, where they are likely to go, and what they are trying to do. Facial recognition becomes one signal among many, rather than the single source of truth.

In practice, this means that identification is increasingly complemented, or even replaced, by behavioral understanding. Fujitsu’s computer vision research shows that skeletal recognition and gait analysis can infer age group, gender tendencies, and intent even when faces are masked or partially obscured. This approach reduces reliance on raw facial images, aligning with privacy-by-design principles while maintaining operational usefulness in crowded or dynamic environments.

| Approach | Primary Input | Strength in Real Environments |

|---|---|---|

| Conventional Face Recognition | Static facial features | High precision under controlled conditions |

| Spatial World Model | Face, body, movement, space | Robust understanding in dynamic, crowded spaces |

Physical AI extends this spatial understanding into action. Robots, cameras, and wearable devices share a common world model, enabling them to anticipate human behavior rather than merely react to it. Fujitsu describes scenarios in which service robots adjust their paths based on predicted pedestrian flow, or retail systems detect customer hesitation near shelves without identifying individuals by name.

This capability becomes especially powerful when combined with wearable devices such as AI smart glasses. A spatially aware system can recognize that a user is approaching a colleague, entering a restricted zone, or navigating a congested area, and then surface relevant information at the right moment. The intelligence lies not in face matching alone, but in understanding the situation as a whole.

Importantly, experts emphasize that Spatial World Models also provide a technical pathway to ethical deployment. By abstracting humans into anonymized vectors of motion and posture, systems can deliver safety, efficiency, and assistance without persistent identity tracking. This balance between utility and restraint is increasingly cited by academic and industrial researchers as essential for social acceptance.

In short, the future of facial recognition is not about seeing faces more clearly, but about seeing the world more intelligently. Spatial World Models and Physical AI mark the point where recognition evolves into understanding, enabling machines to coexist with humans in shared physical spaces in a way that feels natural, supportive, and increasingly invisible.

参考文献

- NIST:Face Technology Evaluations – FRTE/FATE

- NIST:Face Recognition Technology Evaluation (FRTE) 1:1 Verification

- SciTePress:Improving Periocular Recognition Accuracy

- NEC:Walk-Through Face and Iris Recognition Technology

- MacRumors:Apple’s 2026 Smart Glasses: Five Key Features to Expect

- Mint:Security forces to use AI-enabled smart glasses on Republic Day 2026