Have you ever felt that smartphone cameras have reached a point where yearly upgrades no longer matter?

Many flagship phones now produce excellent photos, yet only a few truly change how images are created.

The Galaxy S25 Ultra is one of those rare devices, and its impact goes far beyond simple megapixel counts.

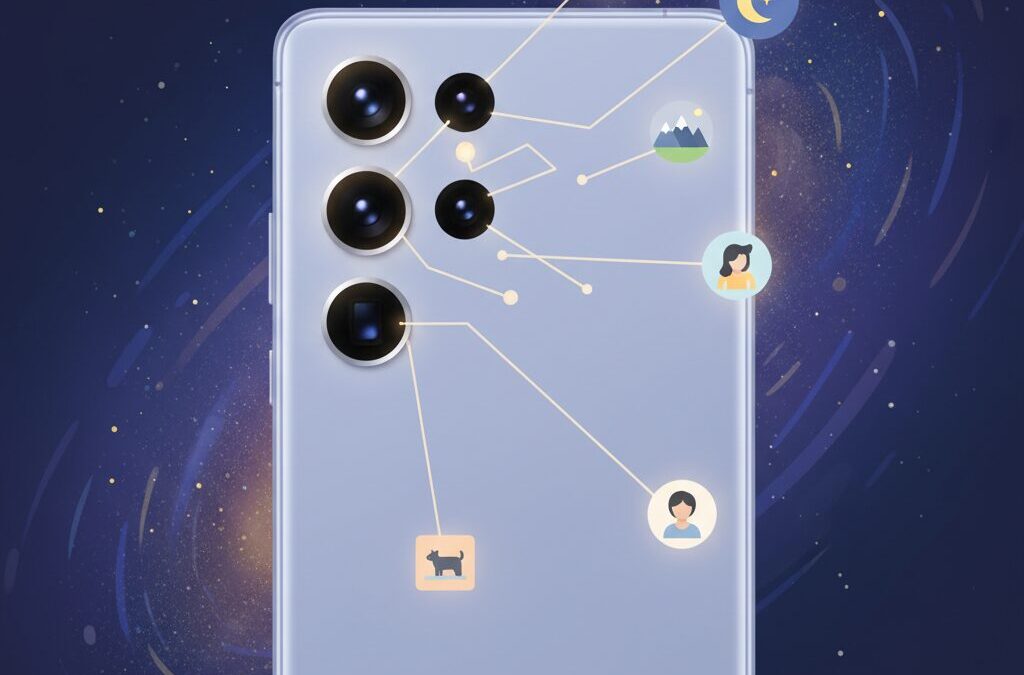

With a 200MP main sensor and Samsung’s advanced ProVisual Engine, this model represents a shift toward AI-driven computational photography.

Instead of relying solely on larger sensors, Samsung combines high-resolution hardware with powerful on-device AI processing.

This approach aims to deliver consistently high-quality photos in everyday situations, from bright landscapes to challenging night scenes.

In this article, you will discover how the Galaxy S25 Ultra’s camera system is designed, why Samsung made specific hardware choices, and how AI reshapes image processing.

You will also learn how it compares with rival flagships and what its long-term value looks like as newer models approach.

If you care about cutting-edge mobile imaging and real-world camera performance, this guide will help you understand why the Galaxy S25 Ultra still matters today.

- The Current State of Smartphone Cameras and Samsung’s Strategy

- Inside the 200MP ISOCELL HP2 Sensor: Design and Engineering Choices

- Pixel Binning Explained: How Tetra²pixel Adapts to Light

- Upgraded Ultra-Wide Camera and the Rise of High-Resolution Macro Shots

- Telephoto Configuration and the Role of AI Zoom

- What Is the ProVisual Engine and Why It Changes Image Processing

- Snapdragon 8 Elite for Galaxy: AI ISP and NPU in Action

- Night Photography and Video: How AI Handles Noise and Motion

- Galaxy S25 Ultra vs Other Flagships: Camera Philosophy Compared

- Longevity, Software Updates, and What the S25 Ultra Means for the Future

- 参考文献

The Current State of Smartphone Cameras and Samsung’s Strategy

As of early 2026, smartphone cameras have clearly entered a mature phase, and dramatic hardware leaps are becoming rare. According to industry observers such as GSMArena and Samsung’s own semiconductor disclosures, raw sensor size and megapixel counts alone no longer guarantee visible improvements. **Image quality is now defined by how effectively hardware and software collaborate**, and this shift frames the current competitive landscape.

In this environment, Samsung’s strategy stands out for its deliberate restraint. Rather than pursuing ever-larger sensors like some Chinese manufacturers, Samsung has doubled down on ultra-high resolution combined with advanced computational photography. The 200MP class sensor, once criticized as a marketing number, has evolved into a practical imaging tool thanks to pixel binning and AI-driven processing. This approach reflects a belief shared by many imaging researchers that flexibility across lighting conditions matters more than peak specifications.

The broader market illustrates this divergence clearly. Devices with one-inch sensors excel in shallow depth of field and light intake, but often require compromises in device thickness and thermal management. Samsung instead prioritizes balance, maintaining a slim industrial design while relying on processing power to close the gap. **This choice signals a long-term view that smartphones are no longer miniature cameras, but intelligent imaging systems.**

| Industry Trend | Typical Approach | Samsung’s Direction |

|---|---|---|

| Sensor competition | Larger physical sensors | Higher resolution with AI optimization |

| Image processing | Post-capture enhancement | Real-time AI ISP and NPU processing |

| Product design | Camera-first form factor | Ergonomics and daily usability |

Samsung executives have repeatedly emphasized that most users value consistency over extreme scenarios. Academic studies in computational photography, including research frequently cited by IEEE imaging conferences, support this view by showing that multi-frame synthesis and semantic scene understanding reduce failed shots more effectively than marginal hardware gains. **Samsung’s camera philosophy therefore targets reliability rather than spectacle.**

This strategy also aligns with changes in user behavior. Photos are primarily consumed on smartphone displays and social platforms, where dynamic range, color stability, and noise control matter more than absolute optical purity. By investing in AI pipelines that adapt output to human perception, Samsung positions its cameras to feel subjectively better, even when competitors claim superior components.

In short, the current state of smartphone cameras is less about breaking records and more about refining experiences. Samsung’s approach reflects an understanding that the future of mobile imaging lies in computation, not just optics, and this conviction shapes every decision behind its flagship camera systems.

Inside the 200MP ISOCELL HP2 Sensor: Design and Engineering Choices

The 200MP ISOCELL HP2 sensor at the heart of the Galaxy S25 Ultra is not simply a specification carried over from its predecessor, but a carefully validated design choice rooted in physical and optical engineering constraints. Samsung Electronics has repeatedly emphasized, including in its semiconductor briefings, that HP2 represents a balanced point between sensor size, pixel density, and manufacturability. **At 1/1.3-inch, the sensor is large enough to gather substantial light while remaining compatible with a slim flagship chassis**, a trade-off that becomes critical when multiple camera modules must coexist.

From an engineering standpoint, HP2’s defining feature is Tetra²pixel technology, which allows the sensor to adapt its effective pixel size to ambient light conditions. This is not a simple on-off binning mechanism but a multi-stage architecture that reconfigures pixel clusters dynamically at the hardware level before image processing begins. According to Samsung Semiconductor documentation, this approach reduces readout noise and improves charge aggregation efficiency, especially in low-light scenarios where signal integrity is most fragile.

| Output Mode | Effective Pixel Size | Primary Use Case |

|---|---|---|

| 200MP | 0.6µm | Bright light, maximum detail capture |

| 50MP | 1.2µm | General photography, balanced quality |

| 12.5MP | 2.4µm | Low light, night photography |

This flexible pixel architecture explains why Samsung opted not to adopt a newer but optically disruptive sensor. Industry analysts cited by PhoneArena note that switching to a different main sensor would have required a thicker lens stack, increasing camera bump height and negatively affecting ergonomics. **HP2 instead leaves headroom for image quality gains through tuning, rather than physical redesign**, an approach aligned with Samsung’s long-term sensor roadmap.

Another often overlooked aspect is the sensor’s fast readout circuitry. High-resolution sensors typically suffer from rolling shutter artifacts, yet HP2’s column-parallel ADC design shortens readout time, which benefits both stills and high-resolution video. Qualcomm engineers have pointed out that this characteristic pairs efficiently with the Snapdragon ISP, enabling complex multi-frame processing without introducing latency.

In practical terms, the HP2 sensor is less about headline megapixels and more about controllability. **Its design prioritizes predictable optical behavior and data consistency**, giving downstream computational photography systems stable, high-quality input. This sensor-first reliability is precisely why HP2 remains a cornerstone of Samsung’s imaging strategy in the Galaxy S25 Ultra.

Pixel Binning Explained: How Tetra²pixel Adapts to Light

Pixel binning is a core technology that allows a high‑resolution sensor to behave intelligently under different lighting conditions, and Tetra²pixel is Samsung’s most advanced implementation of this concept. Rather than treating all 200 million pixels as fixed units, the sensor dynamically changes how pixels cooperate, so that image quality is optimized for the available light.

In bright scenes, Tetra²pixel prioritizes pure resolution. Each 0.6µm pixel works independently, enabling extremely fine textures such as foliage, stone, or distant architecture to be captured without artificial sharpening. According to Samsung Semiconductor’s technical documentation on the ISOCELL HP2, this mode is designed to maximize spatial detail when photon noise is not a limiting factor.

| Light Condition | Effective Pixel Size | Output Resolution |

|---|---|---|

| Bright daylight | 0.6µm | 200MP |

| Normal indoor | 1.2µm (2×2 binning) | 50MP |

| Low light / night | 2.4µm (4×4 binning) | 12.5MP |

As light levels drop, the sensor begins to merge neighboring pixels. Four pixels become one virtual pixel in 50MP mode, which improves signal‑to‑noise ratio without sacrificing too much detail. This middle state is especially important for everyday photography, because it balances sharpness and brightness in a way that feels natural to the human eye.

In very dark environments, sixteen pixels are combined into a single 2.4µm unit. This dramatically increases light‑gathering capability, reducing chroma noise and preserving shadow detail. Imaging researchers frequently note that larger effective pixel sizes are critical for low‑light performance, and Tetra²pixel achieves this without physically enlarging the sensor.

What makes this system compelling is its seamless integration with the image signal processor. The transition between modes happens automatically and invisibly, so you do not need to think about technical settings. Tetra²pixel adapts to light in real time, ensuring that resolution is never wasted and sensitivity is never insufficient.

Upgraded Ultra-Wide Camera and the Rise of High-Resolution Macro Shots

The ultra-wide camera of the Galaxy S25 Ultra has been quietly but fundamentally transformed, and this upgrade reshapes how close-up photography is approached on a smartphone. By moving from a 12MP sensor to the 50MP ISOCELL JN3, Samsung has effectively redefined the role of the ultra-wide lens, positioning it as a high-resolution macro tool rather than a secondary perspective camera.

This change matters because macro photography is no longer treated as a software trick, but as a resolution-driven imaging discipline. According to Samsung Semiconductor’s sensor documentation, the JN3’s 0.7µm pixels and higher pixel count allow meaningful cropping while preserving fine detail. In practical terms, this means that the camera does not rely solely on aggressive sharpening or AI reconstruction when shooting subjects at very close distances.

Macro shooting with ultra-wide lenses traditionally suffers from edge softness and limited resolving power. However, the S25 Ultra benefits from the JN3’s ability to capture dense spatial information across the frame. Independent camera evaluations from GSMArena note that fine structures such as pollen grains, fabric fibers, and surface scratches are rendered with higher micro-contrast compared to the previous generation.

The relationship between resolution and macro performance becomes clearer when comparing sensor-level characteristics. Higher pixel density allows the ProVisual Engine to selectively crop the sharpest central region without visibly degrading image quality. This approach aligns with modern computational photography research discussed by Qualcomm, where high-resolution inputs improve the stability of AI-based noise reduction and texture preservation.

| Aspect | Previous Ultra-Wide | Galaxy S25 Ultra Ultra-Wide |

|---|---|---|

| Resolution | 12MP | 50MP |

| Macro Detail Retention | Limited after cropping | High even with digital crop |

| Noise Handling | Software-dependent | Sensor + AI hybrid |

Another important outcome is consistency. Because the ultra-wide camera now supports pixel binning similar to the main sensor, close-up shots taken in lower light maintain cleaner color transitions. Samsung Newsroom has emphasized that unified sensor behavior across lenses allows the ProVisual Engine to apply more predictable tone mapping, which is especially noticeable in macro shots of food, plants, and everyday objects.

The rise of high-resolution macro photography on the S25 Ultra is not about magnification alone, but about information density. By feeding richer raw data into the imaging pipeline, the ultra-wide camera shifts macro photography from a novelty feature to a reliable creative option. This evolution reflects a broader industry trend, where resolution serves as a foundation for computational accuracy rather than as a marketing number.

For users who value close-up realism over exaggerated sharpness, the upgraded ultra-wide camera offers a tangible, experience-level improvement. It quietly demonstrates how sensor choices, when aligned with advanced image processing, can elevate even the smallest subjects into visually compelling photographs.

Telephoto Configuration and the Role of AI Zoom

The telephoto configuration of the Galaxy S25 Ultra deliberately prioritizes computational intelligence over radical hardware change, and this choice clearly defines the role of AI Zoom. Samsung retains a dual-telephoto setup consisting of a 3x optical lens with a 10MP Sony IMX754 sensor and a 5x periscope lens using a 50MP Sony IMX854 sensor. While this specification appears conservative on paper, it is designed to serve as a stable optical foundation for advanced AI-driven zoom processing.

Rather than chasing higher optical magnification, Samsung focuses on maximizing usable detail across intermediate focal lengths. According to Samsung Newsroom and Qualcomm documentation, the ProVisual Engine leverages the high-resolution 50MP telephoto sensor to enable in-sensor zoom, where central pixel regions are cropped with minimal loss, and then enhanced through AI-based super resolution. This approach allows the camera to deliver consistent image quality at non-native zoom levels such as 4x, 7x, or 8x, which are frequently used in real-world photography.

| Zoom Range | Primary Source | Processing Role |

|---|---|---|

| 3x | IMX754 (Optical) | AI-based upscaling to 12MP output |

| 5x | IMX854 (Optical) | 50MP crop with AI super resolution |

| 10x | IMX854 (Cropped) | Edge reconstruction and texture synthesis |

AI Zoom plays a particularly critical role beyond 5x. At higher magnifications, traditional digital zoom often suffers from edge breakage and texture collapse. Samsung addresses this by training deep learning models to recognize semantic patterns such as text, building outlines, and foliage. As noted by Qualcomm’s explanation of the Snapdragon 8 Elite AI ISP, these models operate directly on RAW-domain data, enabling cleaner reconstruction before aggressive noise reduction is applied.

This process results in zoomed images that feel optically coherent rather than artificially sharpened. Independent evaluations by GSMArena and Amateur Photographer observe that signage, window frames, and distant architectural details maintain legibility up to 10x, even though no dedicated 10x optical lens exists. The AI does not invent arbitrary detail but reinforces edges and contrast based on learned optical characteristics, which helps avoid the uncanny artifacts seen in earlier AI zoom implementations.

From a user perspective, this telephoto strategy emphasizes reliability. The camera dynamically selects the optimal lens and processing path without requiring manual intervention, which aligns with Samsung’s goal of reducing failed shots. In everyday scenarios such as travel or event photography, AI Zoom effectively bridges the gap between optical steps, ensuring that framing flexibility does not come at the cost of image credibility.

In this sense, the telephoto system of the Galaxy S25 Ultra should be understood not as a static set of lenses, but as a hybrid optical-computational platform. The hardware defines the boundaries, while AI Zoom continuously negotiates image quality within those limits, offering a zoom experience that is both practical and technically grounded.

What Is the ProVisual Engine and Why It Changes Image Processing

The ProVisual Engine is not a single camera feature but an end-to-end computational imaging framework that fundamentally redefines how a smartphone creates images. Instead of treating photography as a linear pipeline, it coordinates sensor behavior, AI-based analysis, and real-time image synthesis so that every shot is interpreted before it is finalized. This approach reflects a broader shift identified by Samsung Electronics and Qualcomm, where image quality is now driven as much by neural processing as by optics.

What makes the ProVisual Engine transformative is its ability to understand context, not just pixels. Immediately after light is converted into electrical signals by the sensor, the AI ISP and NPU inside Snapdragon 8 Elite for Galaxy begin parallel processing. Objects, lighting conditions, and motion are recognized at the Raw data stage, allowing noise reduction, tone mapping, and detail preservation to be applied selectively rather than uniformly.

The key change is that image processing decisions are made based on scene semantics, not fixed presets.

According to Samsung’s official technical briefings, this semantic processing enables more natural results in difficult scenarios such as backlit portraits or mixed lighting at night. Independent analysis by GSMArena also notes that this method reduces over-sharpening artifacts that were common in earlier computational photography systems.

| Aspect | Conventional ISP | ProVisual Engine |

|---|---|---|

| Processing model | Sequential | Parallel AI-driven |

| Scene awareness | Limited | Object-level recognition |

| Noise handling | Global | Region-specific |

This shift matters because it allows high-resolution sensors like the 200MP HP2 to be used flexibly without overwhelming users with complexity. The ProVisual Engine decides when resolution, sensitivity, or multi-frame synthesis should take priority, delivering consistent results across lighting conditions.

In practical terms, the ProVisual Engine changes image processing from reactive correction to proactive interpretation. That conceptual leap is why it represents a turning point in mobile photography, moving smartphones closer to how humans actually perceive scenes rather than how sensors mechanically record them.

Snapdragon 8 Elite for Galaxy: AI ISP and NPU in Action

The Snapdragon 8 Elite for Galaxy plays a central role in turning Galaxy S25 Ultra’s camera hardware into an intelligent imaging system, and its AI ISP and NPU are where this transformation becomes visible in real-world use. Rather than acting as separate processing blocks, these components operate in parallel from the moment light is converted into electrical signals at the sensor level.

This tight integration allows image processing decisions to be made before traditional ISP pipelines would normally begin, fundamentally changing how noise reduction, exposure, and color are handled.

According to Qualcomm’s technical briefings on the Snapdragon 8 Elite platform, the AI ISP is designed to analyze RAW sensor data in real time, using machine-learned models instead of fixed mathematical formulas. This approach enables contextual awareness at the pixel level, which is particularly important for a 200MP sensor that produces enormous data volumes.

| Component | Primary Role | Practical Impact |

|---|---|---|

| AI ISP | Early-stage image analysis and noise control | Cleaner RAW data before demosaicing |

| NPU | Semantic recognition and scene understanding | Object-aware tone and texture optimization |

| CPU/GPU | Final rendering and user-facing processing | Smooth capture and preview experience |

What makes this pipeline distinctive is the handoff between the AI ISP and the NPU. Once initial noise suppression and signal stabilization are performed, the NPU immediately begins semantic segmentation, identifying elements such as sky, skin, foliage, text, and architectural edges. Samsung explains through its Newsroom disclosures that this object-aware understanding feeds directly into multi-frame fusion decisions.

In practice, this means that different parts of the same image are processed with different priorities, even before HDR compositing is finalized.

For example, in a backlit portrait, the AI ISP preserves highlight data in the sky while the NPU flags facial regions as high priority. Exposure stacking then favors skin-tone consistency without flattening cloud detail, a balance that traditional single-pass HDR often struggles to achieve.

The NPU itself benefits from a reported roughly 40% uplift in AI throughput compared to the previous generation, as highlighted by Samsung and Qualcomm’s joint announcement for the Galaxy S25 series. This additional headroom enables more aggressive use of spatio-temporal analysis, especially in low-light scenes.

Instead of treating motion as a problem, the system classifies it as information. Static areas accumulate detail across multiple frames, while moving subjects are preserved with minimal blur.

This capability becomes particularly noticeable in night street photography, where passing cars, walking pedestrians, and illuminated signage coexist. Reviews from outlets such as GSMArena have noted that the S25 Ultra maintains background clarity without turning moving subjects into smeared artifacts, suggesting that temporal filtering is applied selectively rather than globally.

Another important aspect is how the Snapdragon 8 Elite for Galaxy is tuned specifically for Samsung’s ProVisual Engine. Industry observers, including SamMobile, point out that this is not a generic Snapdragon implementation. Clock adjustments are only part of the story; the more significant difference lies in how Samsung’s camera models are optimized to run efficiently on the NPU.

This co-design approach reduces latency between capture and final image generation, which directly affects shutter responsiveness and burst shooting reliability.

From a user perspective, the result is subtle but meaningful. The camera feels decisive, with less hesitation in complex lighting, and fewer cases where the final image diverges from what was shown in the preview. That consistency is a hallmark of a mature AI imaging pipeline.

In essence, the AI ISP and NPU inside the Snapdragon 8 Elite for Galaxy do not simply enhance image quality in isolation. They redefine the order and logic of image processing itself, allowing the Galaxy S25 Ultra to extract maximum value from its 200MP sensor without increasing physical size. This architectural shift explains why software updates can continue to improve output long after launch.

The camera evolves because the intelligence behind it was designed to scale, making this chipset-camera partnership one of the most consequential elements of the S25 Ultra’s imaging identity.

Night Photography and Video: How AI Handles Noise and Motion

Night photography and video are where smartphone cameras are most brutally tested, and this is precisely where the Galaxy S25 Ultra’s AI-driven approach shows its real strengthです。In low-light scenes, the challenge is not only brightness but also the delicate balance between noise suppression and motion fidelity. Samsung’s ProVisual Engine tackles this by analyzing both spatial detail and temporal change at the same time, rather than treating each frame in isolationです。

At the core of night shooting is the combination of 200MP sensor binning and AI-based multi-frame fusionです。When light levels drop, the main sensor automatically shifts into larger effective pixel modes, while the AI ISP on Snapdragon 8 Elite performs early-stage noise reduction directly on RAW data. According to Samsung Semiconductor documentation, this early intervention improves the signal-to-noise ratio before conventional demosaicing, which is critical for preserving faint textures in shadowsです。

What makes the S25 Ultra stand out is how it handles motion in dark scenesです。Traditional night modes often rely on long exposures, which inevitably blur moving subjects. The S25 Ultra instead captures multiple short exposures and lets the NPU classify pixels as static or moving. Static regions receive aggressive noise reduction, while moving areas prioritize sharpness and edge integrityです。

| Processing Target | AI Priority | Visual Result |

|---|---|---|

| Static background | Multi-frame noise averaging | Cleaner shadows with retained detail |

| Moving subject | Motion-aware sharpening | Reduced blur and clearer outlines |

This approach is particularly effective for night videoです。Qualcomm has highlighted that the new AI ISP can process temporal data in real time, allowing frame-by-frame noise control without introducing flicker. In practice, this means city lights remain stable, skin tones avoid chroma noise, and moving objects do not dissolve into smearsです。Independent camera reviews from outlets such as GSMArena have noted that handheld night video on the S25 Ultra shows noticeably less grain compared to earlier Galaxy generationsです。

The result is not artificially bright night footage, but footage that feels closer to human night visionです。Highlights are protected, blacks remain deep, and motion stays readable. By letting AI decide where noise reduction should be strong and where it should be restrained, the Galaxy S25 Ultra turns night photography and video from a gamble into a consistently reliable experienceです。

Galaxy S25 Ultra vs Other Flagships: Camera Philosophy Compared

When comparing the Galaxy S25 Ultra with other flagship smartphones, the most striking difference lies not in specifications, but in camera philosophy. **Samsung positions the S25 Ultra as a computational-first camera**, where hardware serves as a rich data source and AI determines the final image. This approach contrasts sharply with competitors that still emphasize optical purity or sensor size as the primary driver of image quality.

Chinese flagships from brands such as Xiaomi and vivo often adopt 1-inch type sensors, prioritizing light-gathering capability and shallow depth of field. According to analyses frequently cited by publications like Amateur Photographer, this philosophy aims to replicate traditional camera physics within a smartphone body. Samsung, by contrast, deliberately avoids ultra-large sensors in favor of a 200MP platform that maximizes flexibility through pixel binning and multi-frame synthesis.

| Brand approach | Primary focus | Intended outcome |

|---|---|---|

| Samsung Galaxy S25 Ultra | High resolution + AI processing | Consistent results across scenes |

| Apple iPhone Pro | Color science + video pipeline | Natural tone and cinematic video |

| Chinese 1-inch models | Large sensor optics | Maximum raw light capture |

Apple’s iPhone Pro series represents yet another philosophy. Apple emphasizes color accuracy, temporal consistency, and video reliability. Reviews from TechRadar and GSMArena often note that Apple’s processing intervenes less aggressively in still images, preserving natural tones even if that means sacrificing punch. Samsung instead optimizes for perceptual clarity, using its ProVisual Engine to enhance textures and edges in ways that align with human visual memory.

**The Galaxy S25 Ultra treats photography as a data problem rather than an optical one.** With 200MP of information and a powerful AI ISP and NPU, the device analyzes scenes semantically before rendering the final image. Samsung Newsroom explains that object-aware processing allows skies, skin, foliage, and architecture to be tuned independently, something traditional pipelines struggle to achieve.

This philosophical divergence becomes clear in everyday use. Where a large-sensor phone excels in controlled lighting, the S25 Ultra aims to be reliable everywhere: night streets, extreme zoom, macro details, or high-contrast travel scenes. The result is not absolute optical purity, but a camera that prioritizes success rate and versatility, redefining what a flagship smartphone camera is meant to deliver.

Longevity, Software Updates, and What the S25 Ultra Means for the Future

When discussing longevity, the Galaxy S25 Ultra is best understood not as a snapshot of 2025 hardware, but as a long-term platform designed to mature over time. Samsung’s public commitment to seven years of OS and security updates places the S25 Ultra among a very small group of smartphones with a support window extending well into the early 2030s. According to Samsung Electronics and corroborated by major industry observers, this policy covers both major Android version upgrades and monthly or quarterly security patches, fundamentally changing how a flagship phone should be evaluated.

This extended software horizon directly reshapes the value proposition of the S25 Ultra. Traditionally, Android flagships delivered peak performance in their first two to three years, after which OS divergence and security concerns accelerated replacement cycles. With seven years guaranteed, the S25 Ultra aligns more closely with long-lived consumer electronics such as laptops or tablets, especially when its Snapdragon 8 Elite for Galaxy platform already exceeds the requirements of current Android workloads.

| Aspect | Galaxy S25 Ultra | Typical Pre-2020 Flagship |

|---|---|---|

| OS upgrade support | Up to 7 years | 2–3 years |

| Security updates | Up to 7 years | 3–4 years |

| Camera feature updates | Ongoing via ProVisual Engine | Rare or absent |

From a camera perspective, longevity is no longer tied solely to sensor replacement. The S25 Ultra’s imaging pipeline is heavily software-defined, and Samsung Newsroom has repeatedly emphasized that ProVisual Engine improvements are delivered post-launch through firmware and One UI updates. In practical terms, this means that noise reduction models, object recognition accuracy, and even video processing behaviors can improve without any hardware change. Independent reviews from outlets such as GSMArena have already noted measurable refinements in night photography and HDR consistency through updates released months after launch.

This software-centric evolution also signals what the S25 Ultra represents for the future of smartphones. Rather than chasing yearly sensor swaps, Samsung appears to be treating flagship hardware as a stable foundation upon which AI models are iteratively trained and deployed. Qualcomm’s documentation on the Snapdragon 8 Elite highlights that its NPU was designed with multi-year on-device AI workloads in mind, suggesting that features not yet mainstream in 2025 can realistically arrive on the same device later in its life cycle.

Industry analysts from publications such as Android Police interpret this approach as a transition toward an “AI-first OS era,” where user experience improvements are driven more by model updates than by silicon churn. For S25 Ultra owners, this implies that future Android versions will not merely maintain compatibility, but actively unlock new use cases in photography, video, and productivity that were not fully realized at launch.

Seen through this lens, the S25 Ultra functions as a bridge device. It marks a point where flagship smartphones stop being annual upgrades and start behaving like long-term personal platforms. For users who value durability, predictable updates, and evolving camera performance, this shift may prove more influential than any single hardware specification, quietly redefining expectations for the next generation of Galaxy devices.

参考文献

- GSMArena:Samsung Galaxy S25 Ultra review: Camera, photo and video quality

- Samsung Semiconductor:ISOCELL HP2 | Mobile Image Sensor

- PhoneArena:Samsung did Galaxy S25 Ultra buyers a favor by not equipping it with the better HP9 camera

- Samsung Newsroom:Capture the Perfect Shot Every Time With Galaxy’s ProVisual Engine

- Qualcomm:Qualcomm and Samsung Redefine Premium Performance for the Galaxy S25 Series

- TechRadar:Flagship phone camera clash: iPhone 17 Pro vs Galaxy S25 Ultra