Have you ever noticed that your smartphone clearly receives notifications, yet no sound plays at all?

Many gadget enthusiasts around the world are experiencing this strange silence in 2026, even on the latest flagship devices.

If you rely on your phone for work, communication, or real-time alerts, this issue can feel both confusing and stressful.

This phenomenon is not caused by a single bug or a simple settings mistake.

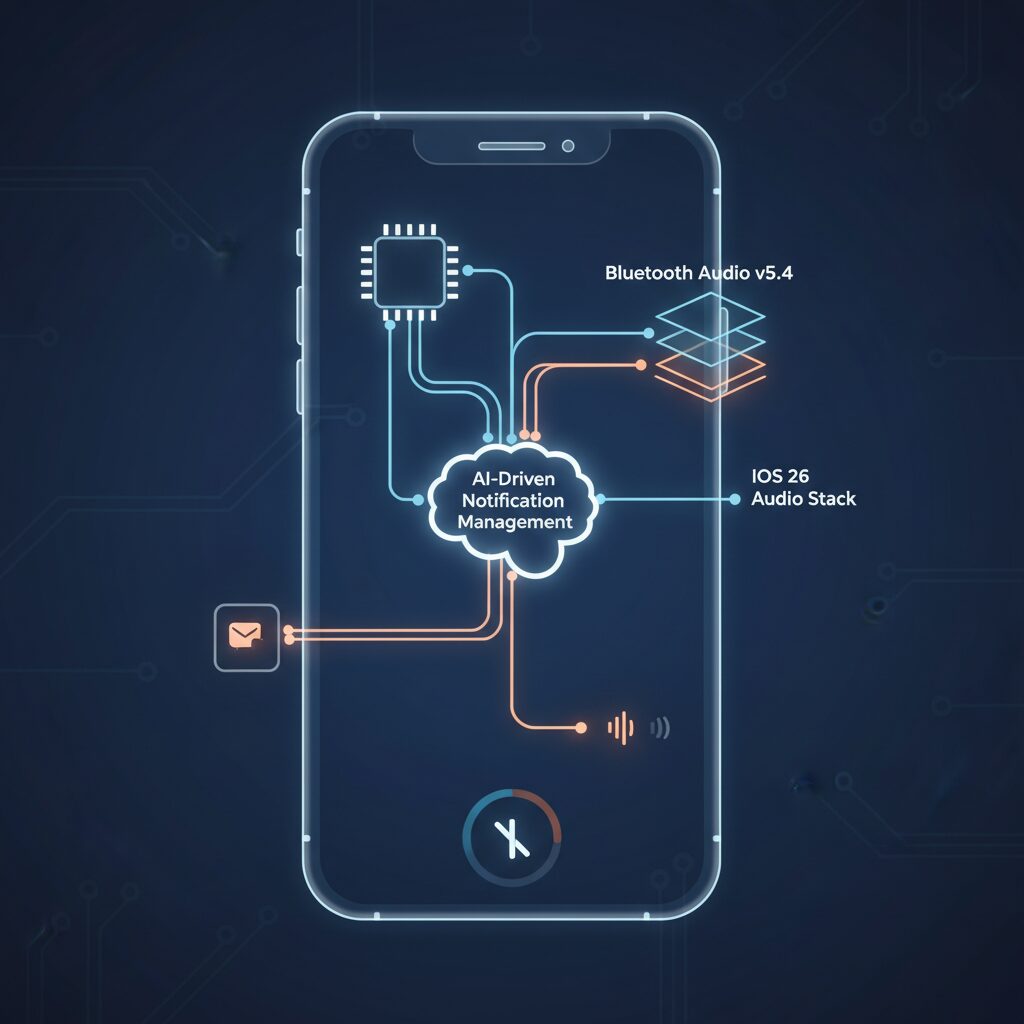

It is the result of multiple advanced technologies colliding, including AI-driven notification management, new Bluetooth audio standards, and increasingly complex operating system audio stacks.

As mobile platforms like iOS 26 and Android 16 mature, they are quietly redefining how, when, and even whether sounds should reach your ears.

By reading this article, you will gain a clear understanding of why notification sounds disappear, what role agentic UX plays in this shift, and how human psychology is influencing modern alert design.

You will also learn why silence is sometimes intentional, not accidental, and what this means for the future of mobile computing.

If you care about gadgets and want to stay ahead of hidden UX changes, this insight will be invaluable.

- The Global Rise of Silent Notifications in Modern Smartphones

- How iOS 26 Audio Architecture Creates Notification Sound Desynchronization

- Android 16 Notification Grouping and the Silent Alert Bug

- Bluetooth 6.0 and the New Complexity of Audio Routing

- AI-Driven Smart Focus and the Risks of Context-Based Muting

- Agentic UX and the Shift From User Control to AI Judgment

- Notification Fatigue and Scientific Evidence Behind Intentional Silence

- The Transition From Sound Alerts to Advanced Haptic Feedback

- What Silent Notifications Reveal About the Future of Human–Device Trust

- 参考文献

The Global Rise of Silent Notifications in Modern Smartphones

The global rise of silent notifications in modern smartphones has become one of the most paradoxical trends in mobile computing. **Notifications are technically delivered, yet users increasingly report that they never hear them.** This phenomenon is no longer limited to isolated bugs but is now observed across regions, devices, and operating systems.

According to user reports and platform analyses cited by Apple and Google developer communities, the spread accelerated after the global rollout of iOS 26 and Android 16. Both platforms prioritize intelligent notification management to combat alert fatigue, a concept long discussed in human–computer interaction research. As a result, sound is often the first element to be suppressed when the system detects low urgency.

| Factor | Global Trend | User Impact |

|---|---|---|

| OS-level AI filtering | Enabled by default worldwide | Audible alerts reduced without explicit consent |

| Notification grouping | Standardized across apps | Only the first alert makes a sound |

| Multi-device routing | Phones, watches, earbuds | Sound plays on an unintended device |

Research into alert fatigue, including studies referenced by medical and academic institutions, shows that repeated audio alerts quickly lose effectiveness. **Systems now assume that silence is safer than interruption**, even for messages users might personally consider important.

Jakob Nielsen and other UX authorities have noted that smartphones are shifting from passive tools to decision-making agents. In this model, the device decides when silence is preferable, effectively redefining what a notification means on a global scale.

What makes this shift uniquely modern is its universality. From Tokyo to Berlin to San Francisco, users share the same experience: a phone that knows something arrived, but chooses not to speak. This silent consensus marks a defining moment in the evolution of mobile user experience.

How iOS 26 Audio Architecture Creates Notification Sound Desynchronization

In iOS 26, Apple fundamentally reworked its internal audio architecture to support more context-aware and AI-mediated experiences, but this evolution also introduced an unexpected side effect: notification sound desynchronization. This phenomenon occurs when notifications visually arrive as expected, yet the accompanying sound fails to play, even though system settings appear correct. The issue is not cosmetic but architectural, rooted in how iOS 26 now separates physical input states from software-level audio flags.

At the core of the problem is a mismatch between the system’s logical mute state and the actual hardware control, such as the Action Button introduced on recent iPhone Pro models. According to analyses shared in Apple Support Communities, iOS 26 maintains multiple parallel representations of “silence,” including Focus modes, AI-driven attention awareness, and legacy mute flags. **When these layers fall out of sync, the audio stack may continue treating notification sounds as volume zero, despite user-facing indicators showing sound as enabled.**

This desynchronization often emerges several hours or days after an OS update. Field reports collected in early 2026 describe a consistent pattern: devices function normally immediately after updating to iOS 26 or 26.1, but notification sounds from high-traffic apps such as Gmail, LINE, or Messenger disappear after prolonged uptime. Engineers cited by The Mac Observer attribute this behavior to memory management issues in the audio driver layer, where stale mute states are not garbage-collected correctly.

| Observed Symptom | Underlying Audio Layer Behavior | User-Visible State |

|---|---|---|

| Notifications arrive silently | Software mute flag stuck at zero volume | Sound enabled in Settings |

| Silent alarms | Attention-Aware logic suppresses output | Alarm scheduled and active |

| Sound returns after reboot | Audio routing stack fully reinitialized | No settings changed |

The introduction of the Action Button further complicates this behavior. Unlike the older mechanical mute switch, the Action Button triggers a software-defined path that must be interpreted by iOS before affecting audio output. Multiple user experiments, documented on Reddit and Apple forums, show that rapidly toggling this button several times forces the OS to rescan its audio state. **The fact that such a physical ritual can “wake up” notification sounds strongly suggests delayed polling or race conditions within the audio routing stack.**

Apple has not publicly released a detailed technical postmortem, but references in developer discussions indicate that iOS 26’s audio subsystem prioritizes resilience and AI arbitration over immediacy. Jakob Nielsen and other UX researchers have noted that modern operating systems increasingly assume silence as the safer default when confidence in context is low. In iOS 26, that philosophy manifests as conservative audio suppression when the system detects ambiguity between hardware intent and inferred user focus.

From a user perspective, this creates the unsettling impression that the device is unreliable. From an architectural standpoint, however, it reflects a system struggling to reconcile legacy interaction models with agentic UX principles. **Notification sound desynchronization in iOS 26 is therefore less a single bug than a visible crack in an increasingly complex audio decision pipeline**, one that Apple will likely need several incremental updates to fully stabilize.

Android 16 Notification Grouping and the Silent Alert Bug

In Android 16, notification management has taken a more aggressive turn with enhanced Notification Grouping, and this is where a subtle but serious silent alert bug has emerged. The intention is clear: reduce notification overload by intelligently bundling alerts. However, in practice, this mechanism can unintentionally suppress sounds that users rely on for time‑sensitive communication.

The core of the issue lies in how Android 16 automatically assigns a system-level group key called g:Aggregate_AlertingSection when an app does not explicitly define its own group ID. According to analyses shared by developers and confirmed through GitHub issue tracking, once a second notification joins an existing auto-generated group, the system may forcibly mark it as SILENT, even if sound is enabled at both the app and OS level.

| Condition | System Behavior | User Impact |

|---|---|---|

| No explicit group ID | Aggregate key applied automatically | Notifications bundled without clear priority |

| Second alert in same group | SILENT flag forced | Sound does not play |

| High-frequency messages | Cooldown logic overlaps grouping | Important alerts missed |

This behavior is most noticeable in real-time messaging apps such as WhatsApp and Telegram, where only the first message triggers an audible alert, while subsequent messages arrive quietly within the grouped notification. Google’s own issue trackers and Pixel community discussions indicate that this affects devices from Pixel 7 through Pixel 10, suggesting an OS-level flaw rather than a device-specific anomaly.

What makes this bug particularly confusing is that it can persist even when users disable notification cooldown features or set global notification volume to maximum. Android’s adaptive logic appears to override manual intent, prioritizing its interpretation of notification fatigue over explicit user settings. UX researchers have long warned about this trade-off, and organizations like the Nielsen Norman Group have noted that over-automation without transparency erodes user trust.

As a temporary workaround, power users and developers recommend manually setting notification channels to High priority within each affected app, ensuring that sound is explicitly requested for every alert. Google has acknowledged the issue and suggested that a corrective patch is planned for early 2026, but until then, users are effectively caught between smarter notification design and the risk of missing critical information.

The Android 16 silent alert bug is not just a technical glitch; it is a clear example of how well-intentioned UX optimization can conflict with fundamental expectations. For users who depend on audible cues, understanding this grouping behavior is now essential to maintaining reliable communication.

Bluetooth 6.0 and the New Complexity of Audio Routing

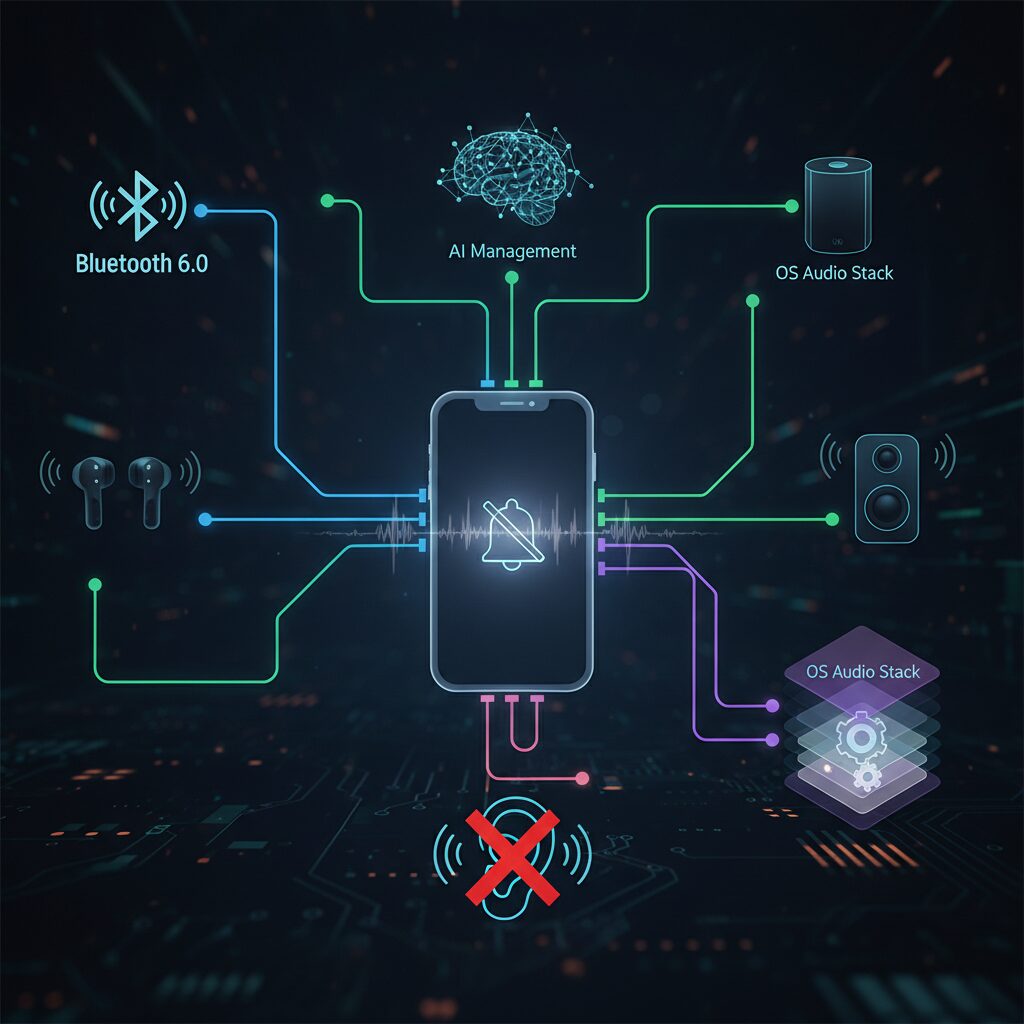

Bluetooth 6.0 has quietly transformed audio routing from a simple switch into a context-aware, policy-driven decision system. While the standard promises higher precision and lower latency, it also introduces new failure modes where notification sounds are technically played, yet never perceived by the user. For gadget enthusiasts, this shift explains why “silent notifications” have become more common even on flagship devices.

At the core is Channel Sounding, a Bluetooth 6.0 feature that uses phase-based ranging to estimate distance with centimeter-level accuracy. According to analyses published by organizations closely following Bluetooth SIG developments, operating systems now infer whether earbuds are worn, nearby, or simply left on a desk. When the system misreads proximity, notification audio is routed exclusively to wireless earbuds that are not actually in use, leaving the phone speaker silent.

This behavior is not random. Once received signal strength crosses a defined threshold, the OS automatically switches into a personal listening policy. In real-world environments with reflections or interference, RSS values can spike even when earbuds are idle. As a result, users hear nothing, while the system believes it made the correct, privacy-respecting decision.

| Bluetooth 6.0 Feature | Intended Benefit | Observed Side Effect |

|---|---|---|

| Channel Sounding | Accurate proximity detection | Audio routed to unworn earbuds |

| ISOAL Enhancement | Low-latency audio delivery | Notification attack clipped or lost |

| Adaptive Advertising | Reduced power consumption | Delayed reconnection for alerts |

ISOAL enhancements add another layer of complexity. Bluetooth 6.0 segments and reconstructs audio more aggressively to minimize delay. However, engineers have observed timing mismatches between notification sound generators and Bluetooth frame updates. When the initial milliseconds of a short alert are dropped, the sound becomes perceptually invisible, especially with third-party earbuds or legacy in-car systems.

Industry commentators at ZDNET and similar publications note that these issues are not traditional bugs but emergent properties of smarter routing. The system optimizes for audio quality, privacy, and power efficiency, sometimes at the expense of reliability. In 2026, hearing a notification is no longer guaranteed by volume alone; it depends on how accurately Bluetooth 6.0 interprets human intent in a noisy physical world.

AI-Driven Smart Focus and the Risks of Context-Based Muting

AI-driven Smart Focus has been introduced as a remedy for notification overload, but in practice it often becomes a new source of silence that users cannot easily explain or control. In 2026, both iOS 26 and Android 16 rely on agentic UX, where AI continuously infers context from calendars, sensors, and behavioral history, and then decides whether a sound should be played or muted. **The critical shift is that notifications are no longer governed by explicit user rules, but by probabilistic judgments made by the system.** This design choice fundamentally changes the trust relationship between user and device.

Smart Focus systems typically aggregate signals such as motion patterns, microphone input, camera-based gaze detection, and historical response data. According to CMSWire’s 2026 UX analysis, these models calculate a real-time “focus score” and dynamically suppress alerts when the score exceeds a certain threshold. While this improves perceived productivity, it introduces a failure mode where urgency is misclassified. **When AI overfits past behavior, it may conclude that certain contacts or apps are rarely urgent, even when the current message is critical.** Users then experience what feels like an inexplicable bug, even though the system is operating as designed.

Jakob Nielsen and other UX authorities have warned that this moment marks the beginning of “agent versus agent” interaction. The user’s defensive AI attempts to protect attention, while enterprise or social apps deploy their own logic to break through filters. In this tug-of-war, context-based muting often errs on the side of silence. From the user’s perspective, the device appears unreliable, because the reasoning behind the mute decision is hidden deep inside the agent’s inference layer.

| Context Signal | AI Interpretation | Observed Risk |

|---|---|---|

| Calendar marked as meeting | User should not be interrupted | High-priority calls muted unintentionally |

| Low historical response rate | Sender is non-urgent | Misclassification of one-off critical messages |

| Stable posture and low movement | Deep focus state | Extended silent periods without user awareness |

Another layer of complexity comes from tone-aware interfaces, where notification sounds are no longer static assets but AI-generated tones. These tones are modulated by perceived importance and ambient noise. Research cited by CMSWire shows that dynamically generated sounds can reduce annoyance, yet **they also remove consistency, preventing the brain from learning a clear association between a specific sound and an incoming alert.** As a result, users often report that “no sound played,” when in reality a subtle, context-optimized tone was emitted but not cognitively registered.

This phenomenon aligns with findings from alert fatigue research in medical and academic fields. Studies summarized by Nextech and ResearchGate demonstrate that repeated exposure to alerts reduces responsiveness through desensitization. OS vendors justify aggressive muting as a well-being feature, but **Smart Focus crosses a delicate boundary when protective silence overrides user intent.** The system’s goal of minimizing stress can conflict with the user’s expectation of reliability, especially for communication tools tied to work or family.

The core risk of context-based muting is therefore not technical malfunction, but opacity. Users are rarely told why a specific alert was silenced. UX trend analyses from UX Tigers emphasize that without transparency, users attribute silence to bugs rather than AI decisions, eroding confidence in the platform. **When a device cannot clearly explain its own behavior, advanced intelligence paradoxically feels less trustworthy than simpler rule-based systems.**

In this AI-driven era, Smart Focus demonstrates both the promise and peril of agentic UX. It successfully reduces noise, yet it also introduces moments where silence carries unintended consequences. Understanding that these silences are often intentional, probabilistic choices rather than errors is essential for power users, developers, and designers alike, as they navigate the evolving balance between automation and control.

Agentic UX and the Shift From User Control to AI Judgment

By 2026, Agentic UX has fundamentally changed how people interact with their devices, shifting authority from explicit user control to AI-driven judgment. Instead of users deciding which notifications should sound, when, and how, the operating system now acts as an autonomous agent that interprets context and makes those decisions on the user’s behalf. **This transition is not cosmetic; it rewires the power balance between human intention and machine inference.**

The core promise of Agentic UX is cognitive relief. Research on alert fatigue, frequently cited by UX strategists and human–computer interaction scholars, shows that excessive notifications reduce responsiveness and well-being. According to studies discussed in medical and academic contexts, every additional alert lowers the probability of meaningful response, a finding that OS designers now treat as justification for aggressive AI filtering. As a result, modern systems increasingly prioritize what the AI believes matters, not what the user has explicitly allowed.

This logic is embodied in features such as Smart Focus and adaptive silent modes. These systems combine sensor data, calendar context, behavioral history, and communication patterns to estimate a user’s current cognitive load. When the AI concludes that attention should not be interrupted, notification sounds are suppressed automatically, even if settings indicate they are enabled. **From the user’s perspective, this feels like a bug; from the system’s perspective, it is a protective intervention.**

| UX Paradigm | Decision Authority | Notification Logic |

|---|---|---|

| Rule-based UX | User | Static settings and manual priorities |

| Agentic UX | AI system | Context inference and probabilistic judgment |

A critical side effect of this shift is the erosion of predictability. Jakob Nielsen and other UX authorities have warned that users build trust through consistency. However, AI-generated behaviors, including dynamic notification tones and context-sensitive silencing, deliberately break consistency to optimize relevance. When notification sounds are dynamically generated or altered, the human brain struggles to associate a specific sound with urgency, leading to the widespread perception that notifications “did not ring” at all.

What makes Agentic UX especially contentious is that its decisions are often invisible. Without clear explanations, users cannot tell whether silence is caused by a system error, a connectivity issue, or an AI judgment call. **This opacity transforms silence into a UX failure, even when the system is functioning exactly as designed.** Leading UX research outlets argue that transparency, not additional intelligence, is now the limiting factor in Agentic UX adoption.

Ultimately, the move from user control to AI judgment reflects a broader belief held by OS vendors in 2026: that machines are better suited than humans to manage attention. Yet the notification sound problem demonstrates the fragility of this assumption. When AI becomes the arbiter of urgency, silence is no longer neutral. It is an active decision, one that directly shapes trust, agency, and the perceived reliability of modern computing.

Notification Fatigue and Scientific Evidence Behind Intentional Silence

Notification fatigue is not only a UX problem but also a well-documented cognitive phenomenon. Recent studies in human factors and behavioral science show that when users are exposed to frequent alerts, their brains gradually reduce responsiveness to the stimulus. This process, known as desensitization, explains why people often feel that notifications are no longer ringing, even when they technically are.

Research cited by clinical informatics organizations reports that in high-alert environments such as hospitals, excessive notifications increase error rates by more than 14 percent. Similar patterns appear in consumer devices. A 2025 mixed-methods study of university students found that attention disruption, rather than notification volume itself, was the strongest predictor of stress and reduced well-being.

From the perspective of neuroscience, the auditory system prioritizes novelty. When alert sounds become repetitive or predictable, the brain categorizes them as low-value background noise. This is why modern operating systems increasingly suppress sounds by default, especially during focused activity.

| Scientific Concept | Observed Effect | Implication for Notifications |

|---|---|---|

| Desensitization | Reduced neural response to repeated stimuli | Alerts feel quieter or unnoticed |

| Attention Fragmentation | Lower task performance and higher stress | Systems favor intentional silence |

UX researchers such as Jakob Nielsen have pointed out that intelligent silence can improve trust when it is predictable and transparent. The real challenge is not eliminating sound, but aligning notification behavior with human cognitive limits so that when a sound does occur, it truly matters.

The Transition From Sound Alerts to Advanced Haptic Feedback

For decades, notification design has relied primarily on sound alerts, but this approach is increasingly reaching its limits in 2026. As mobile environments become quieter and more context-aware, sound is often suppressed by AI-driven focus modes, Bluetooth routing, or social etiquette. In response, advanced haptic feedback is being positioned as a more reliable and personal channel for critical alerts.

Haptics are not merely a fallback for silent mode; they are now treated as a first-class communication layer. According to UX research cited by UX Studio and CMSWire, tactile notifications show higher recognition rates under cognitive load than audio cues, particularly in public or work-focused settings. This shift reflects a deeper understanding of human perception, where touch delivers information without competing for auditory attention.

| Notification Method | Primary Strength | Key Limitation |

|---|---|---|

| Sound alerts | Immediate awareness | Easily muted or rerouted |

| Basic vibration | Discreet signaling | Low semantic richness |

| Advanced haptics | Contextual, private feedback | Requires user learning |

Modern devices now employ LRA-based vibrotactile patterns, surface haptics, and even ultrasonic mid-air feedback to encode urgency and meaning. Studies referenced by MDPI indicate that differentiated haptic patterns improve task response accuracy, even when visual and audio channels are compromised. This evidence explains why OS designers increasingly favor touch-based alerts for high-priority notifications.

Importantly, this transition also addresses notification fatigue. Because haptics operate within the user’s personal space, they reduce social friction and cognitive stress. As a result, users are more likely to perceive alerts as intentional rather than intrusive, marking a decisive evolution from sound-centric design toward a quieter yet more dependable interaction model.

What Silent Notifications Reveal About the Future of Human–Device Trust

Silent notifications do more than cause missed messages; they quietly reshape how trust between humans and devices is formed. In 2026, as agentic UX becomes mainstream, users increasingly rely on systems that decide when to speak and when to stay silent. **This silence is interpreted not as neutrality, but as intent**, forcing users to question whether their devices are acting in their best interest.

According to human–computer interaction research frequently cited by organizations such as the Nielsen Norman Group, trust in digital systems is built on predictability and explainability. When notifications arrive visually but without sound, users perceive a breakdown in this contract. The device appears unreliable, even if the silence is technically deliberate.

| Notification Behavior | User Interpretation | Trust Impact |

|---|---|---|

| Consistent audible alerts | System is dependable | Trust reinforced |

| Context-aware silence | System is judging context | Conditional trust |

| Unexplained silence | System is malfunctioning | Trust erosion |

Studies on alert fatigue in medical and enterprise environments have shown that excessive alerts reduce response rates, which partly justifies AI-driven silence. However, **silence without explanation shifts cognitive burden back to the user**, who must now verify logs, screens, or secondary devices to confirm reality.

Experts in adaptive systems design argue that future trust will depend less on raw accuracy and more on transparency. If an AI suppresses a sound, users expect to know why. Without that feedback loop, even well-intentioned automation feels like loss of control.

Silent notifications therefore act as an early signal of a broader transition: devices are no longer passive tools but autonomous partners. **Whether this partnership succeeds will depend on how clearly systems communicate their silence**, not just their sound.

参考文献

- Apple Support Communities:No notification sounds after new iOS 26 update on my iPhone

- GitHub:Android 16: subsequent notifications not ringing unless the notification group is dismissed

- ZDNet:What is Bluetooth 6.0? Why the newest audio connectivity standard is such a big deal

- CMSWire:2026: The Year User Experience Finally Rewrites the Rules of AI

- UX Tigers:18 Predictions for 2026

- ResearchGate:Alert Fatigue and Smartphone Notifications: A Mixed-methods Study