If you have upgraded to a flagship HDR TV or monitor recently and felt that it does not look dramatically different, you are not alone. In 2026, peak brightness has surged from 1,000 nits to as high as 10,000 nits, and color gamut coverage is approaching full Rec.2020. On paper, the progress is astonishing.

Yet many enthusiasts say the real-world impact feels subtle. Even with Micro RGB backlights, SQD Mini LED with over 20,000 local dimming zones, and Tandem OLED panels exceeding 3,000 nits, the perceived jump in image quality is smaller than expected.

In this article, you will discover why HDR has reached a perceptual plateau, how the JOD model explains the limits of human vision, what Panasonic and Sharp are doing with AI-driven environmental optimization, and how Windows 11 and Netflix’s Dolby Vision ecosystem quietly shape what you actually see. By the end, you will understand why the future of HDR is not about brighter screens, but about transparent technology that preserves creative intent while adapting invisibly to your environment.

- The HDR Paradox of 2026: Higher Specs, Smaller Visual Impact

- From Nits to JOD: Why Human Vision Sets a Hard Limit

- The Plateau Effect: Psychophysical Evidence Behind HDR Saturation

- Micro RGB and SQD Mini LED: Reinventing LCD Beyond Brightness Wars

- Tandem OLED’s Comeback: Multi-Stack Efficiency and 3,000-Nit Milestones

- When Hardware Converges: Why LCD and OLED Now Look More Alike

- Panasonic’s Auto AI and Ambient Light Intelligence Explained

- Sharp’s Spatial Recognition AI: Depth as the New Dynamic Range

- Windows 11, Auto HDR, and the OS-Level Bottleneck

- Netflix, Dolby Vision, and the Power of Creative Intent Metadata

- Tone Mapping in the 10,000-Nit Era: Protecting Midtones Over Highlights

- Energy Regulations and Real-World Brightness Constraints

- From Spectacle to Infrastructure: The Rise of Invisible Display Technology

- 参考文献

The HDR Paradox of 2026: Higher Specs, Smaller Visual Impact

In 2026, HDR displays have reached a striking paradox. Peak brightness that once defined the high end—1,000 nits—is now common in midrange models, while flagship TVs claim 3,000 to 10,000 nits. Yet many enthusiasts say the visual leap feels smaller than expected. Higher specs no longer guarantee higher impact, and the reason is rooted in human perception, not marketing.

Recent perceptual research using the Just-Objectionable-Difference (JOD) model shows why. JOD quantifies the smallest visible difference in image quality a human can reliably detect. According to psychophysical studies cited in industry analysis, improvements from 500 to 1,000 nits produce a clear jump in perceived quality. But once displays exceed roughly 2,000 nits, gains enter a plateau. The eye follows Weber’s Law at high luminance levels, responding logarithmically rather than linearly.

| Upgrade Range | Physical Gain | Perceptual Impact (JOD) |

|---|---|---|

| 500 → 1,000 nits | 2× brightness | Large, clearly visible improvement |

| 2,000 → 4,000 nits | 2× brightness | Marginal, plateau-level improvement |

| 4,000 → 10,000 nits | 2.5× brightness | Subtle in typical living-room viewing |

This plateau effect explains why a 2026 flagship can look surprisingly similar to a 2024 premium model in everyday content. Even if peak luminance doubles on paper, the perceptual delta may rise only fractions of a JOD point. In other words, the bottleneck has shifted from hardware capability to human biology.

There is also a trade-off. Studies indicate that high peak brightness combined with insufficient black-level control can reduce perceived quality due to halo artifacts and visual discomfort. The de Vries–Rose law describes how low-light sensitivity scales with the square root of luminance, meaning black-level precision remains critical. If local dimming lags behind brightness escalation, the net experience can stagnate—or even degrade.

Another overlooked factor is usage context. In typical Japanese and global living rooms, average picture level (APL) remains moderate due to energy standards and content mastering practices. As seen in recent manufacturer disclosures, peak brightness is often reserved for small highlights and brief moments. A 10,000-nit capability does not mean the screen sustains that output across the frame. The perceptual result is stability rather than spectacle.

At CES 2026, several experts emphasized that extreme brightness is primarily headroom for specular highlights—sun glints, sparks, reflections—not a mandate for overall luminance inflation. Modern tone-mapping engines prioritize midtone preservation to protect texture and avoid fatigue. Instead of pushing everything brighter, processors selectively allocate dynamic range where it matters most.

The consequence is subtle but profound. HDR in 2026 is less about shock value and more about consistency. As ecosystems standardize around Dolby Vision mastering guidelines—typically graded at 1,000 to 4,000 nits with strict metadata control—even ultra-bright displays follow creative intent rather than maximizing output. The ceiling rises, but the visible floor remains carefully managed.

This is the HDR paradox of 2026: hardware evolution continues at full speed, yet perceived transformation slows. The industry has reached a maturity point where engineering progress outpaces sensory differentiation. For gadget enthusiasts chasing numbers, that may feel anticlimactic. From a perceptual science standpoint, however, it signals something else entirely—the beginning of HDR as a refined, transparent infrastructure rather than a headline-grabbing feature.

From Nits to JOD: Why Human Vision Sets a Hard Limit

Peak brightness numbers look spectacular on spec sheets, but human vision does not scale linearly with nits. As recent psychophysical research has emphasized, the key metric is no longer cd/m² but JOD, or Just-Objectionable-Difference. JOD quantifies the smallest perceptual step at which viewers can reliably say, “this looks different.”

According to studies published between 2025 and 2026, brightness improvements deliver strong JOD gains only up to a certain threshold. Beyond that point, the perceptual curve flattens. This is the hard biological ceiling that even 10,000-nit panels cannot break.

Human vision follows logarithmic and square-root laws, not marketing curves. Once displays exceed mid-range HDR brightness, each additional 1,000 nits produces diminishing perceptual returns.

The underlying model combines two classic visual principles. At low luminance, sensitivity follows the de Vries–Rose law, where detection thresholds scale with the square root of light intensity. At higher luminance, perception aligns with Weber’s law, meaning we respond to relative—not absolute—changes. In practice, doubling brightness does not double perceived impact.

| Upgrade Step | Physical Increase | Perceptual Impact (JOD Trend) |

|---|---|---|

| 500 → 1,000 nits | 2× | Large, clearly visible improvement |

| 2,000 → 4,000 nits | 2× | Minor, often subtle improvement |

| 4,000 → 10,000 nits | 2.5× | Marginal in typical content |

This plateau effect explains why many enthusiasts upgrading from a 1,500-nit TV to a 3,000-nit or even brighter flagship report limited real-world difference. The panel is objectively superior, yet the visual system compresses that gain.

Research also shows a negative interaction when peak brightness rises without equally deep blacks. Elevated black levels reduce contrast sensitivity, and halo artifacts further erode perceived realism. In such cases, JOD scores can stagnate or even decline despite higher peak output.

Another constraint is comfort. Extremely bright highlights, particularly in near-field displays such as VR headsets, can increase visual fatigue. Studies referenced in HDR perception research indicate that sustained exposure to intense luminance spikes reduces subjective satisfaction, even when technical dynamic range expands.

The eye optimizes for stability and contrast relationships, not raw luminance extremes. That is why perceptual alignment, not peak brightness inflation, now defines meaningful HDR progress. Once a display reaches sufficient headroom for specular highlights, additional nits become statistical margin rather than transformative experience.

In 2026, the bottleneck is no longer panel engineering alone. It is the physiology of the human visual system. JOD modeling makes this limit measurable—and unmistakable.

The Plateau Effect: Psychophysical Evidence Behind HDR Saturation

Why does a jump from 1,000 nits to 4,000 or even 10,000 nits often feel underwhelming in real life? Psychophysical research over 2025–2026 points to what experts call a perceptual plateau. The key insight is that human vision does not scale linearly with luminance, even though display specifications do.

Recent studies using the Just-Objectionable-Difference (JOD) model quantify how much visible improvement users can actually perceive when brightness and contrast increase. JOD represents the smallest quality difference that observers reliably detect in controlled experiments. According to analyses summarized in 2026 HDR research, luminance gains deliver strong perceptual impact up to a certain threshold, after which the curve flattens dramatically.

| Upgrade Step | Physical Gain | Perceptual Impact (JOD) |

|---|---|---|

| 500 → 1,000 nits | 2× peak luminance | Large visible improvement |

| 2,000 → 4,000 nits | 2× peak luminance | Marginal improvement |

| 4,000 → 10,000 nits | 2.5× peak luminance | Often barely noticeable |

This plateau is grounded in well-established visual laws. Sensitivity in low luminance regions follows the de Vries–Rose law, where detection thresholds relate to the square root of background luminance. At higher luminance levels, perception aligns more closely with Weber’s law, meaning differences are processed logarithmically. In practical terms, each additional nit contributes less subjective impact than the previous one.

Large-scale psychophysical experiments cited in 2026 industry analyses demonstrate that once peak brightness exceeds roughly the 1,000–2,000 nit range—assuming competent black levels—JOD gains shrink to fractions of a point. A flagship display may improve measured luminance by several thousand nits, yet deliver only +0.2 to +0.5 JOD compared with a high-end 2024 model. For enthusiasts expecting dramatic visual shock, this feels like stagnation, even though engineering progress is substantial.

There is also a negative interaction effect. When peak luminance rises without proportional improvement in black level or local dimming precision, perceived quality can decline. Elevated blacks, halo artifacts, or tone-mapping compression reduce contrast integrity. Psychophysical data confirm that contrast consistency often contributes more to perceived HDR quality than extreme peak brightness.

Another dimension is visual fatigue. In near-eye or close-viewing environments, such as desktop monitors or VR displays, very high luminance spikes can increase discomfort and adaptation strain. Some 2026 findings indicate that beyond certain brightness thresholds, subjective satisfaction plateaus while fatigue metrics rise. This creates a trade-off: more measurable dynamic range does not automatically translate into better user experience.

The plateau effect therefore reframes the HDR race. Once a display reaches perceptual sufficiency—strong highlights, stable blacks, and credible midtones—further brightness escalation operates mostly as headroom for rare specular highlights rather than as a constant upgrade. The human visual system effectively compresses these gains, smoothing technological leaps into subtle refinements.

For gadget enthusiasts, the takeaway is both surprising and liberating. The ceiling you are sensing is not marketing exaggeration or imagination. It is rooted in measurable psychophysical limits. HDR saturation is a property of the eye as much as of the panel, and understanding this plateau explains why 2026’s most advanced displays can feel visually similar to their already excellent predecessors.

Micro RGB and SQD Mini LED: Reinventing LCD Beyond Brightness Wars

In 2026, the LCD battlefield has clearly shifted. Instead of chasing ever-higher peak brightness numbers, manufacturers are redefining what HDR should feel like. Micro RGB and SQD Mini LED are not about winning a spec sheet war—they are about rebuilding LCD from its optical foundation.

According to multiple CES 2026 reports from CNET and ZDNET, the most discussed LCD prototypes were not merely brighter, but structurally different. By rethinking how light is generated and controlled, these technologies aim to solve the perceptual plateau identified in recent JOD-based research.

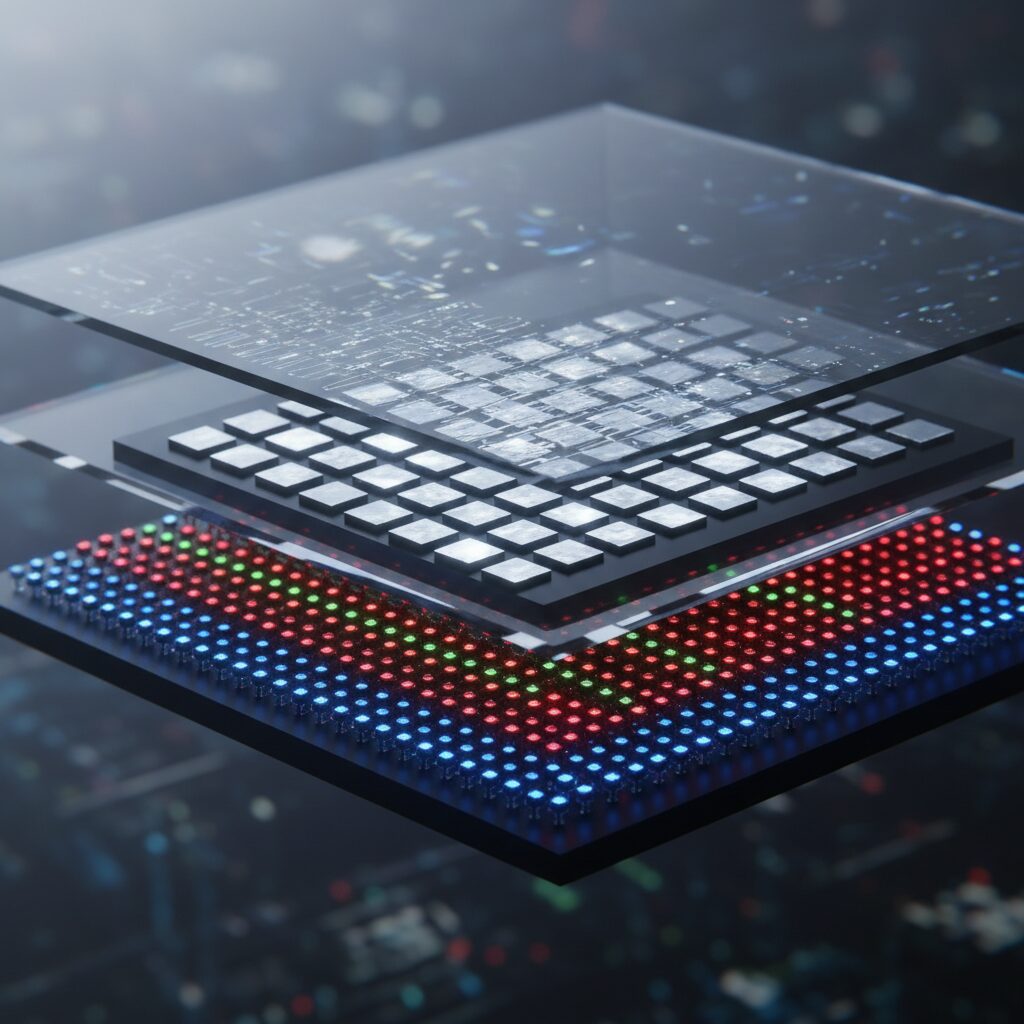

Micro RGB replaces the traditional white LED plus quantum dot filter architecture with independent red, green, and blue micro LEDs in the backlight itself. Because color filters inherently absorb light, eliminating them reduces optical loss and increases efficiency.

This filterless structure enables extremely wide color reproduction—up to full Rec.2020 coverage in announced flagship models—while still reaching peak brightness levels approaching 10,000 nits in controlled highlights. Importantly, this brightness is delivered with higher spectral precision rather than brute-force luminance.

SQD Mini LED, promoted by TCL, tackles a different bottleneck: local dimming granularity. With more than 20,000 dimming zones, it dramatically reduces halo artifacts that have historically limited LCD contrast performance.

| Technology | Core Innovation | Primary Benefit |

|---|---|---|

| Micro RGB | RGB micro LED backlight (filterless) | Higher color purity, improved efficiency |

| SQD Mini LED | 20,000+ local dimming zones | Reduced halo, OLED-like black control |

The impact of these approaches becomes clearer when viewed through perceptual science. As HDR studies using the JOD model suggest, brightness gains above certain thresholds yield diminishing visible improvements. However, improvements in contrast precision and color volume remain perceptually meaningful.

By increasing zone density, SQD systems reduce negative interactions between high peak brightness and elevated black levels—an issue documented in psychophysical experiments. Meanwhile, Micro RGB improves color separation at high luminance, preventing desaturation in specular highlights.

Another crucial factor is energy efficiency. As reported by AV Watch, recent Japanese Mini LED models achieve higher brightness while reducing power consumption significantly. Micro RGB’s filterless efficiency and SQD’s precise dimming both contribute to this balance, aligning with tightening energy regulations.

What makes this shift compelling is that it aligns with content ecosystem constraints. Even if Dolby Vision masters cap highlight output, a display with better color volume and local control can render those highlights with greater fidelity rather than exaggeration.

In practical viewing, this translates into subtler improvements: cleaner highlight roll-off, fewer blooming artifacts in subtitles against dark scenes, and more stable color tones at high brightness levels.

Rather than overwhelming viewers with dramatic luminance spikes, these systems aim to make HDR feel natural and artifact-free. In that sense, Micro RGB and SQD Mini LED are not escalating the brightness war—they are quietly redefining what premium LCD performance actually means.

Tandem OLED’s Comeback: Multi-Stack Efficiency and 3,000-Nit Milestones

Tandem OLED has re-emerged in 2026 not as a nostalgic revival, but as a calculated engineering response to the limits of single-stack OLED brightness. While LCD technologies have pushed headline numbers toward 10,000 nits, OLED manufacturers have focused on multi-stack efficiency to close the luminance gap without sacrificing pixel-level black.

The core idea is simple but profound: instead of driving a single emissive layer harder, Tandem OLED stacks multiple organic emission layers vertically. By distributing the electrical load across four layers and refreshing blue phosphorescent materials, manufacturers significantly improve luminous efficiency and panel longevity.

Multi-stack architecture increases peak brightness beyond 3,000 nits while preserving OLED’s defining advantage: true per-pixel black with no blooming.

According to coverage from CES 2026 by CNET and Greg Benz Photography, flagship models such as LG’s G6 series and ASUS ProArt monitors now exceed 3,000 nits in peak highlights. That represents roughly 1.5 to 2 times the brightness of previous-generation OLED panels, achieved not by brute-force current increases but by structural redesign.

| Parameter | Conventional OLED | Tandem OLED (2026) |

|---|---|---|

| Emission layers | Single stack | Multi-stack (up to 4) |

| Peak brightness | ~1,000–1,500 nits | 3,000+ nits |

| Energy efficiency | Lower at high APL | Improved per nit output |

| Black control | Per-pixel | Per-pixel (unchanged) |

What makes this comeback strategically important is not just brightness. The RGB Stripe Tandem OLED implementations used in ASUS ProArt and ROG Swift monitors address one of OLED’s historical criticisms: text clarity. By maintaining a traditional RGB subpixel layout rather than adopting alternative subpixel geometries, these panels deliver sharper UI rendering alongside HDR punch.

This balance matters in a 2026 landscape defined by perceptual plateau effects. Research discussed in recent HDR studies shows that beyond certain luminance thresholds, gains in perceived quality diminish sharply. In that context, moving from 2,000 to 3,000 nits is less about dramatic visual shock and more about preserving highlight detail under aggressive tone mapping.

The 3,000-nit milestone functions as headroom rather than spectacle. It allows specular highlights—metal reflections, sparks, sunlight glints—to remain unclipped when content is mastered at 1,000 to 4,000 nits under Dolby Vision guidelines. Instead of making the whole image brighter, Tandem OLED ensures bright pixels stay precise while dark pixels remain perfectly off.

Equally important is thermal and longevity performance. By spreading luminance demand across multiple emissive layers, the electrical stress per layer decreases. That reduces differential aging, especially in blue subpixels, historically the weakest link in OLED durability. For professionals using reference monitors for grading, this stability is as critical as peak brightness.

The result is a subtle but meaningful shift. Tandem OLED does not win by outshouting Mini LED on absolute luminance charts. It wins by combining 3,000-nit-class highlights with zero blooming, stable color volume, and refined subpixel structure. In a market where raw brightness numbers are approaching perceptual limits, that multidimensional efficiency is what defines OLED’s comeback.

When Hardware Converges: Why LCD and OLED Now Look More Alike

For years, LCD and OLED were easy to tell apart. LCD meant higher brightness but weaker blacks, while OLED delivered perfect contrast at the cost of peak luminance. In 2026, that clear divide is fading fast, and many enthusiasts now struggle to distinguish them in real-world viewing.

The reason is simple: both technologies have absorbed each other’s strengths. Hardware evolution has pushed them toward perceptual parity, especially within the brightness and contrast ranges where human vision is most sensitive.

How the Gap Narrowed

| Aspect | LCD (2026) | OLED (2026) |

|---|---|---|

| Peak Brightness | Up to 10,000 nits (Micro RGB, Mini LED) | 3,000+ nits (Tandem OLED) |

| Black Level | 20,000+ dimming zones reduce halo | Per-pixel self-emission |

| Color Gamut | Up to 100% Rec.2020 coverage | Wide gamut with improved efficiency |

Samsung and Hisense’s Micro RGB backlights remove traditional color filters, enabling extremely high brightness and wide color coverage, as reported by CNET at CES 2026. Meanwhile, TCL’s SQD Mini LED systems exceed 20,000 local dimming zones, dramatically reducing halo artifacts once typical of LCD.

On the other side, Tandem OLED panels stack multiple emission layers to reach over 3,000 nits while maintaining pixel-level black control. ASUS and LG implementations show that OLED is no longer limited to dim-room performance.

From a perceptual standpoint, this convergence makes sense. Research using the Just-Objectionable-Difference model indicates that improvements beyond certain luminance thresholds yield diminishing visible returns. Moving from 2,000 to 4,000 nits does not feel twice as impactful as moving from 500 to 1,000 nits.

As a result, when both LCD and OLED comfortably exceed 1,000 nits and deliver deep blacks, the human visual system treats them as similarly “high quality.” The plateau effect described in recent HDR perception studies explains why dramatic spec differences translate into subtle experiential differences.

There is also a strategic shift in tuning philosophy. Modern LCDs no longer chase raw brightness alone; they prioritize controlled highlights and mid-tone preservation. OLEDs, in turn, focus on sustaining brightness without clipping texture. Both aim for balanced tone mapping rather than exaggerated contrast.

Even color performance is converging. With claims of up to 95–100% Rec.2020 coverage in advanced LCD systems and highly efficient OLED materials improving spectral purity, wide color is no longer a differentiator but a baseline expectation.

In practical terms, this means that in a living room with mixed lighting, a flagship Mini LED LCD and a flagship Tandem OLED often look strikingly alike during HDR playback. Differences remain in edge cases—extreme highlights, off-axis viewing, or near-black uniformity—but they are far subtler than marketing narratives suggest.

What we are witnessing is not stagnation but maturation. LCD has learned precision; OLED has learned brightness. And for the enthusiast, the question is no longer which technology is categorically superior, but which implementation aligns best with personal viewing habits.

Panasonic’s Auto AI and Ambient Light Intelligence Explained

Panasonic’s latest VIERA models take a fundamentally different approach to HDR optimization. Instead of chasing ever-higher peak brightness numbers, they focus on maintaining perceptual consistency through Auto AI and an advanced ambient light sensor system. In 2026, this philosophy aligns closely with findings from perceptual research showing that beyond certain luminance thresholds, human sensitivity to improvement plateaus.

The goal is not to make the picture look more dramatic, but to make it look consistently correct in any environment. This shift reflects a broader industry movement toward what experts describe as “transparent technology” — systems that quietly optimize without drawing attention to themselves.

How Auto AI Works in Practice

According to Panasonic’s official product releases, Auto AI continuously analyzes both the incoming video signal and the room environment. It does not rely on a single brightness preset. Instead, it dynamically adjusts multiple parameters in real time.

| Input Source | Detected Factor | AI Adjustment |

|---|---|---|

| Room lighting | Brightness level | Shadow detail lift or suppression |

| Room lighting | Color temperature | White balance micro-correction |

| Video signal | Noise vs. texture | Detail enhancement without over-sharpening |

The ambient light sensor goes beyond measuring simple lux levels. It also detects the color temperature of surrounding light. If you are watching in a warm-lit living room at night, the TV subtly shifts white balance to prevent the image from appearing bluish. In bright daylight, it compensates for perceived black lift caused by glare by refining low-level gamma.

This behavior aligns with visual science principles such as the Weber–Fechner law, which suggests our perception of brightness is logarithmic. Rather than increasing peak luminance aggressively, Panasonic optimizes mid-tones and shadow gradation, where perceptual sensitivity remains higher.

Perceptual Stability Over Spectacle

One of the most overlooked features is the 4K Fine Remaster Engine integrated with Auto AI. It distinguishes compression noise from intentional texture, preserving film grain while reducing artifacts. This selective processing prevents the common HDR pitfall of over-processed highlights paired with artificial sharpness.

What users perceive as “no change” is often the result of thousands of micro-adjustments happening per second. When lighting conditions shift during sunset, the image does not suddenly look warmer or dimmer. The AI compensates before you consciously notice the difference.

This approach contrasts sharply with earlier HDR generations, where switching between SDR and HDR or changing room lighting could produce obvious tonal jumps. Panasonic’s system minimizes those discontinuities, prioritizing continuity of creative intent over visual shock value.

For gadget enthusiasts expecting dramatic brightness surges, this subtlety may initially feel underwhelming. However, in long-term viewing scenarios, especially in mixed lighting conditions typical of Japanese living spaces, this stability reduces eye strain and maintains color fidelity.

In a market where peak luminance can reach several thousand nits, Panasonic’s strategy demonstrates that intelligent environmental adaptation may deliver greater real-world benefit than raw brightness escalation. The innovation lies not in making HDR louder, but in making it invisible.

Sharp’s Spatial Recognition AI: Depth as the New Dynamic Range

Sharp’s 2026 AQUOS lineup introduces what it calls Spatial Recognition AI, powered by the Medalist S6X engine. Rather than chasing ever-higher peak nits, this approach analyzes the spatial structure of each frame and redistributes processing power based on perceived distance. In other words, Sharp treats depth as a new form of dynamic range.

Conventional HDR expands luminance from dark to bright. Spatial Recognition AI expands perceptual contrast from near to far. By identifying foreground subjects, mid-ground elements, and background layers, the processor selectively enhances texture, micro-contrast, and edge definition where the viewer’s attention naturally converges.

| Processing Focus | Traditional HDR | Spatial Recognition AI |

|---|---|---|

| Primary Variable | Luminance (nits) | Perceived depth layers |

| Enhancement Target | Highlights & shadows | Foreground separation |

| Viewer Impact | Brightness contrast | Three-dimensional realism |

For example, when a person stands against a cityscape at dusk, the AI detects the subject as spatially closer and subtly increases local contrast and clarity on facial contours and clothing texture. The distant skyline, by contrast, retains a softer gradation to preserve atmospheric perspective. This selective emphasis mimics how human vision prioritizes near-field detail.

Research in visual perception, including models referenced in recent HDR studies using JOD metrics, suggests that once peak brightness surpasses certain thresholds, additional luminance yields diminishing perceptual gains. Sharp’s strategy shifts the optimization axis from absolute brightness to structural realism. Instead of adding more light, it adds more spatial coherence.

According to AV Watch’s coverage of Sharp’s latest quantum dot × Mini LED AQUOS models, the company achieved up to 1.5× brightness while reducing power consumption by 32 percent. That efficiency headroom enables more granular local processing without exceeding energy constraints. The benefit is not a flashier highlight, but a cleaner separation between objects.

This matters particularly in high-APL scenes where aggressive brightness boosts can flatten textures. By preserving background subtlety and enhancing only the perceptually dominant plane, Spatial Recognition AI avoids the halo artifacts and visual fatigue sometimes associated with brute-force HDR expansion.

The result feels less like an effect and more like a refinement. Viewers may not consciously notice a surge in brightness, yet they perceive stronger presence, clearer subject isolation, and a more convincing sense of air between objects. In an era where 10,000-nit specifications risk perceptual saturation, Sharp reframes innovation around how space itself is rendered.

By reconstructing depth relationships rather than amplifying luminance alone, Spatial Recognition AI aligns display engineering with how our visual system interprets real-world scenes. It is a quieter evolution, but one that directly addresses the plateau of traditional HDR impact.

Windows 11, Auto HDR, and the OS-Level Bottleneck

Even if your display reaches 3,000 or 10,000 nits, the actual HDR experience on a PC is often constrained by the operating system layer. In 2026, Windows 11 remains the dominant gaming and content platform, yet it frequently acts as an invisible bottleneck between advanced panels and real-world perception.

The issue is not raw GPU power or panel capability. It is how the OS interprets, converts, and delivers HDR signals across mixed SDR and HDR environments. When that pipeline is inconsistent, the result is not spectacular brightness but subtle degradation.

In many PC setups, the limiting factor is no longer the display hardware, but Windows 11’s HDR signal management and tone-mapping behavior.

One widely reported problem is that enabling HDR in Windows 11 can make the entire desktop appear dim or washed out. According to user reports aggregated in Windows support communities, brightness shifts and gamma inconsistencies occur when HDR is toggled on, especially on monitors that already perform internal tone mapping.

This becomes more complex with Auto HDR. Designed to convert SDR games into HDR output, Auto HDR estimates highlight data that does not exist in the source. In theory, this expands dynamic range. In practice, it can conflict with the monitor’s own processing.

| Feature | Intended Role | Common Side Effect |

|---|---|---|

| Windows HDR Toggle | Enable system-wide HDR output | Desktop dimming or color shift |

| Auto HDR | Convert SDR games to HDR | Highlight clipping or flat midtones |

| Monitor Tone Mapping | Map HDR to panel capability | Double processing with OS |

The core bottleneck lies in metadata handling. If Windows misreads or incompletely communicates peak brightness and EOTF characteristics, the tone curve may compress highlights unnecessarily. As Netflix’s Dolby Vision mastering guidelines emphasize, HDR relies on strict adherence to metadata and PQ transfer functions. When the OS layer introduces ambiguity, precision is lost.

Another structural issue is mixed SDR/HDR composition. Windows 11 renders much of its UI in SDR even when HDR is enabled. The OS must continuously map SDR content into an HDR container, which can lower perceived contrast or alter midtone balance. On high-end tandem OLED or Mini LED displays, this flattening effect is particularly noticeable because the hardware headroom is not fully utilized.

The paradox is clear: as panels become more accurate, OS-level inconsistencies become more visible. A 1,000-nit display may mask tone-mapping errors. A 4,000-nit or 10,000-nit display exposes them.

For enthusiasts, the practical implication is nuanced. Disabling Auto HDR for color-critical work, ensuring firmware and GPU drivers are synchronized, and calibrating HDR using Windows’ built-in HDR calibration tool can reduce conflicts. However, these steps highlight the systemic issue: HDR on PC still requires manual intervention.

In a mature HDR ecosystem where creative intent and perceptual consistency matter more than raw brightness, the operating system becomes a gatekeeper. Until Windows-level HDR handling achieves the same determinism as dedicated video playback pipelines, even the most advanced 2026 display may feel constrained—not by physics, but by software.

Netflix, Dolby Vision, and the Power of Creative Intent Metadata

When discussing HDR in 2026, Netflix and Dolby Vision stand at the center of the ecosystem. While hardware has reached 3,000 to 10,000 nits in flagship displays, Netflix’s priority is not maximum brightness but faithful reproduction of creative intent. That philosophy is embedded directly into Dolby Vision’s dynamic metadata workflow.

According to Netflix’s Dolby Vision HDR Mastering Guidelines, original productions are graded in controlled environments using the P3-D65 color space and the PQ (ST.2084) transfer function. Mastering is typically performed on reference monitors capable of 1,000 to 4,000 nits. This reference range defines the creative ceiling of most content viewers actually stream.

Dolby Vision differs from static HDR formats by attaching scene-by-scene or even frame-by-frame metadata. This metadata communicates how luminance and color should be mapped to the capabilities of the target display. Rather than letting the television guess, the content itself carries instructions aligned with the colorist’s grading decisions.

| Element | Function | Impact on Viewing |

|---|---|---|

| Reference Mastering | 1,000–4,000 nits grading environment | Defines artistic highlight limits |

| Dynamic Metadata | Scene-based luminance instructions | Prevents over-bright rendering |

| Tone Mapping | Display adaptation process | Maintains detail and color balance |

This structure explains why many enthusiasts report that newer ultra-bright TVs do not dramatically change the Netflix experience. The display is not underperforming; it is obeying metadata constraints. Highlights such as explosions or sunlight reflections are allowed to peak, but midtones and skin tones remain carefully controlled to avoid distortion.

Autodesk’s professional documentation on Dolby Vision workflows emphasizes that metadata is designed to maintain consistency across devices. A filmmaker grading on a calibrated mastering monitor expects similar emotional tonality whether the audience watches on a high-end Mini LED TV or a midrange OLED. Consistency, not spectacle, is the goal.

In this context, HDR becomes less about hardware extremes and more about interpretive accuracy. Netflix’s integration of Dolby Vision 4.0 continues to refine this pipeline, ensuring that dynamic range is allocated intentionally rather than explosively. Brightness becomes a narrative tool, not a marketing number.

For gadget enthusiasts chasing peak luminance, this can feel limiting. However, from a production standpoint, it represents maturity. The display’s job is no longer to impress independently, but to act as a transparent window into the creator’s graded vision. That transparency is precisely why HDR today may feel stable, even when the underlying hardware has advanced dramatically.

Tone Mapping in the 10,000-Nit Era: Protecting Midtones Over Highlights

In the 10,000-nit era, tone mapping is no longer about chasing brighter highlights. It is about protecting the integrity of midtones. As peak luminance climbs into five-digit territory, the real battleground shifts to how faithfully a display preserves faces, textures, and ambient light that occupy the center of the luminance range.

According to recent perceptual research using the Just-Objectionable-Difference model, improvements in peak brightness beyond a certain threshold yield diminishing visible gains. What viewers consistently notice instead is instability in midtones. If skin tones shift, shadows flatten, or subtle gradients collapse, the entire HDR experience feels artificial, regardless of how bright the specular highlights appear.

Why Midtones Matter More Than Ever

| Luminance Region | Typical Content | Perceptual Sensitivity |

|---|---|---|

| Highlights (1,000–10,000 nits) | Reflections, sparks, sun glints | Low duration, localized impact |

| Midtones (100–400 nits) | Faces, interiors, landscapes | High sensitivity, constant exposure |

| Shadows (<10 nits) | Night scenes, depth cues | Detail critical for realism |

Most cinematic content, including Dolby Vision masters referenced in Netflix’s production guidelines, is graded within 1,000 to 4,000 nits. Even when displayed on a 10,000-nit panel, the majority of narrative information sits in the midrange. Over-compressing this zone to “make room” for extreme peaks leads to washed-out skin tones and loss of material texture.

Modern tone-mapping engines therefore prioritize curve precision over brute-force expansion. Instead of globally lowering average picture level to avoid clipping, advanced processors apply high-bit-depth calculations to isolate highlight roll-off while anchoring midtone gamma. The goal is not maximum brightness, but maximum perceptual coherence.

This shift aligns with Weber’s Law, which describes the logarithmic nature of brightness perception. Doubling highlight luminance does not double perceived impact. However, a small deviation in midtone contrast is immediately noticeable because our visual system is continuously adapted to that range.

Manufacturers have responded by refining dynamic metadata interpretation. Rather than simply obeying static HDR10 peak metadata, newer algorithms analyze scene structure frame by frame, preserving midtone separation even when aggressive highlight compression is required. In practical terms, a candle flame may lose a few hundred nits of theoretical intensity, but the surrounding room retains dimensional depth and natural color balance.

There is also a fatigue factor. Studies cited in HDR perceptual evaluations indicate that excessive contrast expansion in midtones increases visual strain during long viewing sessions. By stabilizing this region, displays achieve a more “transparent” presentation—where the technology recedes and the image feels consistent across diverse lighting environments.

In professional grading environments such as those supported by Dolby Vision workflows in tools like Autodesk Flame, creative intent is carefully balanced around midtone storytelling. If consumer displays distort that balance in pursuit of highlight spectacle, the narrative tone shifts. Protecting midtones is therefore not a compromise; it is a fidelity strategy.

The irony of the 10,000-nit era is clear. As hardware headroom expands dramatically, the most sophisticated tone mapping decisions often involve restraint. By preserving midtone stability and allowing highlights to roll off gracefully, modern HDR systems deliver images that feel richer, more natural, and more aligned with human perception than any raw luminance specification could promise.

Energy Regulations and Real-World Brightness Constraints

As peak brightness figures climb into the 3,000 to 10,000‑nit range, energy regulations and real-world usage constraints increasingly shape what users actually see. In many regions, including Japan and the EU, televisions must meet annual energy consumption targets tied to standardized viewing conditions. This means that catalog peak brightness and sustained on-screen brightness are fundamentally different metrics.

Sharp’s latest mini LED AQUOS models, for example, are promoted as delivering up to 1.5 times higher brightness while still achieving 100% of Japan’s energy efficiency target standards, according to AV Watch and the company’s official releases. That balance is achieved not by ignoring regulation, but by tightly controlling average picture level and limiting how long extreme luminance can be sustained.

| Metric | Spec Sheet Value | Typical Real-World Behavior |

|---|---|---|

| Peak Brightness | 3,000–10,000 nits | Short bursts in small highlight areas |

| Average Picture Level (APL) | Not emphasized | Actively limited to reduce power draw |

| Energy Rating | Meets 100% standard | Requires dynamic brightness management |

Under energy compliance testing, televisions are evaluated using standardized video sequences with defined luminance distributions. To pass these tests, manufacturers optimize tone mapping and backlight algorithms so that full-screen brightness remains moderate. As a result, even a 10,000‑nit panel rarely outputs extreme luminance across large areas of the screen.

This constraint becomes especially visible in high APL scenes such as snow landscapes or bright sports broadcasts. Instead of maintaining headline-level luminance, the display gradually reduces output to stay within thermal and electrical envelopes. What the user perceives is stability rather than explosive brightness.

Thermal design also plays a decisive role. Sustained multi-thousand-nit output dramatically increases heat generation, particularly in tandem OLED and dense mini LED backlights. To prevent panel degradation and ensure long-term reliability, firmware imposes temporal limits on highlight intensity. The brighter the advertised peak, the shorter its allowable duration in many cases.

Netflix’s Dolby Vision mastering guidelines further reinforce this ceiling effect. Even if hardware can reach extreme luminance, dynamic metadata may instruct the TV to map highlights within 1,000 to 4,000 nits depending on creative intent. The combination of regulatory energy limits and content-level constraints means the theoretical maximum often remains unused.

In practical living rooms, ambient light conditions add another layer. Japanese households, for instance, commonly use moderate indoor lighting rather than showroom-level brightness. Excessive peak luminance in such environments can cause glare and visual fatigue, reducing perceived comfort. Manufacturers therefore tune factory defaults conservatively to align with real domestic conditions.

The outcome is a subtle but important shift: brightness is treated as headroom rather than a constant output target. Energy regulation, thermal physics, and content metadata converge to ensure that extreme luminance appears only when it meaningfully enhances realism—such as specular reflections or sparks—rather than dominating entire frames.

For gadget enthusiasts scanning spec sheets, this can feel like underutilized potential. Yet from an engineering and regulatory standpoint, it represents a mature equilibrium. Displays are no longer designed to impress in a five-minute showroom demo alone; they are optimized to deliver consistent HDR performance over years of compliant, energy-conscious operation.

From Spectacle to Infrastructure: The Rise of Invisible Display Technology

In 2026, HDR is no longer a spectacle designed to impress at first glance. It has quietly transitioned into infrastructure, a foundational layer that supports visual consistency rather than dramatic effect. While peak brightness figures now reach 3,000 to 10,000 nits in flagship models, many users report that “nothing seems different.” This is not stagnation. It is maturity.

The shift can be understood by comparing physical performance gains with perceptual outcomes measured through the Just-Objectionable-Difference (JOD) model.

| Metric | 2024 Class | 2026 Class | Perceptual Impact |

|---|---|---|---|

| Peak Brightness | 1,000–1,500 nits | 3,000–10,000 nits | Marginal JOD gain |

| Rec.2020 Coverage | ~80% | 95–100% | Incremental realism |

| Environment Adaptation | Manual settings | AI auto-optimization | Perceived stability |

Psychophysical research published in 2025–2026 shows that once brightness surpasses a certain threshold, perceptual improvement plateaus. According to studies applying the JOD framework, doubling luminance in high ranges produces only fractional gains in visible differentiation. In other words, engineering progress continues, but human perception has reached diminishing returns.

This plateau has redirected innovation toward invisibility. Panasonic’s Auto AI systems, for example, integrate ambient light and color temperature sensing to dynamically adjust tone and white balance. Rather than making the image more dramatic, the system prevents environmental shifts from degrading perceived quality. The result is consistency across daytime and nighttime viewing, which users interpret as “no change.”

Sharp’s spatial recognition AI takes a similar infrastructural approach. By identifying foreground and background relationships, it enhances depth selectively instead of boosting global brightness. The HDR range is allocated intelligently within the scene structure, not as a blanket amplification. This preserves texture and realism over spectacle.

Content ecosystems reinforce this invisibility. Netflix’s Dolby Vision mastering guidelines emphasize strict adherence to creative intent, typically within 1,000–4,000 nit grading environments. Even if a television can output 10,000 nits, dynamic metadata constrains rendering to what the master specifies. As Netflix’s partner documentation explains, display devices must follow scene-level instructions embedded in Dolby Vision streams. Hardware headroom becomes insurance, not fireworks.

Operating systems add another stabilizing layer. Although Windows 11 HDR implementation still faces inconsistencies, the broader goal of system-level tone mapping is uniformity across applications. The ambition is seamlessness, not exaggeration.

Energy regulation also contributes to this infrastructural turn. Sharp’s latest Mini LED models reportedly achieve higher peak brightness while meeting 100% of updated energy efficiency standards. This is accomplished by limiting peak luminance duration and managing average picture level. The headline number exists, but everyday luminance remains moderated for stability and compliance.

The industry has therefore crossed a psychological threshold. Early HDR astonished viewers with explosive contrast. Today’s HDR minimizes friction, prevents clipping, guards midtones, and harmonizes with ambient light. The ultimate success is when users stop thinking about HDR altogether.

From spectacle to infrastructure, invisible display technology represents a system-level convergence of hardware headroom, perceptual science, AI calibration, and content standardization. What appears unchanged is in fact continuously optimized beneath the surface, maintaining a natural visual baseline that feels effortless and, precisely because of that, ordinary.

参考文献

- CNET:CES 2026 Showcases the Future of TVs. Learn Which Display Tech Will Make the Biggest Splash

- ZDNET:We saw dozens of TVs at CES 2026: Keep these 5 models on your radar this year

- Mashable:CES 2026: The coolest TVs are from Samsung, LG, and TCL

- Panasonic Newsroom Global:4K液晶ビエラ 2シリーズ5機種を発売(AI技術により自動で最適な画質・音質に調整)

- AV Watch:シャープ、独自パネルの量子ドット×ミニLEDアクオス。輝き1.5倍で消費電力32%カット

- Netflix Partner Help Center:Dolby Vision HDR Mastering Guidelines

- Reddit:HDR setting in Windows 11 keeps changing monitor brightness