If you thought the megapixel race defined smartphone cameras, 2026 proves that the real battle happens at the sensor level. Flagship devices now compete not just on resolution, but on physics—sensor size, pixel architecture, and dynamic range measured in stops rather than marketing slogans.

From 1-inch sensors in devices like the Xiaomi 17 Ultra to stacked transistor designs in the iPhone 17 Pro Max and Xperia 1 VII, manufacturers are pushing against the physical limits of thin smartphone bodies. At the same time, LOFIC technology is emerging as a game-changing innovation, enabling up to 17–20 stops of dynamic range in a single exposure and reshaping HDR photography.

In this article, you will discover how leading brands such as Xiaomi, Apple, Samsung, Sony, and Sharp are approaching sensor design in radically different ways. By comparing real specifications, DxOMark rankings, optical engineering choices, and semiconductor strategies, you will gain a clear understanding of where mobile imaging stands in 2026—and where it is heading next.

- Why 2026 Is a Turning Point for Smartphone Camera Sensors

- The Physics of Sensor Size: Signal-to-Noise Ratio, Pixel Pitch, and Full Well Capacity

- 1-Inch Sensors Go Mainstream: Benefits, Trade-Offs, and Design Constraints

- LOFIC Explained: How Lateral Overflow Integration Capacitors Redefine HDR

- Dynamic Range in Numbers: 17 to 20 Stops and What It Means in Real-World Shooting

- Flagship Sensor Comparison 2026: Xiaomi, Apple, Samsung, Sony, Vivo, and Sharp

- Xiaomi 17 Ultra: 1-Inch Sensor, Leica Optics, and Continuous Optical Zoom

- Apple iPhone 17 Pro Max: Stacked Sensors, A19 Pro, and Video-Centric Optimization

- Samsung Galaxy S26 Ultra: 200MP Strategy, 0.5–0.6μm Pixels, and AI Compensation

- Sony Xperia 1 VII and Sharp AQUOS R10: Pro Workflows and Spectral Accuracy

- DxOMark Rankings and Market Data: Who Leads the 2026 Camera Race?

- Supply Chain Shifts: OmniVision, Sony, and the Diversification of 1-Inch Sensors

- The Rise of In-House Sensor Development and ISP Co-Design

- From Automotive to Smartphones: How LOFIC and HDR Tech Cross Industries

- What to Look for in 2026: Choosing a Smartphone by Sensor Technology, Not Just Megapixels

- 参考文献

Why 2026 Is a Turning Point for Smartphone Camera Sensors

In 2026, smartphone camera sensors reach a structural inflection point where incremental upgrades no longer define progress, and fundamental architectural shifts do. For over a decade, the industry chased megapixels, then sensor size. Now, both strategies approach physical and economic ceilings. What makes 2026 different is not a single device launch, but the convergence of larger sensors, stacked transistor designs, and the rapid commercialization of LOFIC technology.

According to DXOMARK’s published testing methodology, image quality gains in recent years correlate more strongly with dynamic range and signal-to-noise ratio than with raw resolution. This reflects a broader industry realization: capturing more usable light matters more than counting more pixels. As smartphone bodies remain thin, manufacturers are forced to innovate within strict spatial constraints.

The physics is straightforward. Larger pixels collect more photons, increasing signal electrons relative to read and dark noise. However, expanding sensor size increases lens stack thickness and module depth. By 2026, most flagship devices cluster between 1/1.3-inch and 1-inch formats, indicating that the industry is nearing a practical size boundary.

| Device (2026) | Main Sensor Size | Notable Sensor Tech |

|---|---|---|

| Xiaomi 17 Ultra | 1.0-inch | LOFIC, Light Hunter 1050L |

| iPhone 17 Pro Max | 1/1.28-inch | 2-layer stacked transistor |

| Galaxy S26 Ultra | 1/1.3-inch | 200MP ISOCELL (0.6μm) |

| Xperia 1 VII | 1/1.35-inch | Exmor T stacked design |

This clustering around similar physical dimensions signals maturity. The competitive battlefield shifts from “how big” to “how efficiently.” Sony’s stacked Exmor T architecture separates photodiode and transistor layers, improving light capture efficiency without increasing footprint. Samsung, as reported by TechInsights, pushes pixel miniaturization down to 0.5μm in certain sensors, relying heavily on computational reconstruction to compensate.

Yet the most disruptive element in 2026 is LOFIC, or Lateral Overflow Integration Capacitor. Originally developed for automotive sensors to manage extreme contrast scenes and flicker, LOFIC introduces a lateral capacitor within each pixel to temporarily store overflow charge. Instead of clipping highlights when a photodiode saturates, excess electrons are redirected and preserved.

LOFIC fundamentally expands Full Well Capacity without enlarging pixel size, enabling 100–120dB dynamic range in single exposure.

This is not a marginal improvement. RedShark News reports that upcoming mobile sensors promise up to 17–20 stops of dynamic range, approaching professional cinema camera territory. The practical implication is profound: fewer multi-frame HDR composites and significantly reduced ghosting in motion scenes. For users shooting concerts, night streets, or backlit portraits, highlight retention and shadow detail improve simultaneously.

Market data reinforces this shift. As of January 2026, DXOMARK rankings show top-performing smartphones overwhelmingly adopting large sensors combined with advanced dynamic range solutions. Huawei Pura 80 Ultra leads with a 175 score, integrating a large-format sensor and variable aperture. Vivo and Oppo follow closely with LOFIC-equipped models. Hardware investment, not just AI tuning, drives these results.

Another reason 2026 becomes pivotal is supply chain diversification. Historically, Sony dominated premium 1-inch-class sensors. Now OmniVision and other players enter aggressively. The Xiaomi 17 Ultra’s adoption of an OmniVision 1-inch LOFIC sensor signals a structural change: flagship imaging no longer depends on a single supplier. Increased competition accelerates innovation cycles and may reduce component costs in the medium term.

At the same time, vertical integration intensifies. Reports indicate Apple is developing its own LOFIC-based prototype targeting 20 stops of dynamic range, potentially reducing long-term reliance on third-party suppliers. Google and Samsung similarly invest in sensor-ISP co-optimization. In 2026, the sensor is no longer a modular commodity but a strategically customized silicon asset.

Crucially, computational photography does not disappear; it becomes symbiotic. AI-driven segmentation, remosaicing, and zoom enhancement still matter. However, as industry commentary from GSMArena notes, computational gains plateau when input data lacks headroom. LOFIC and stacked designs increase that headroom. Better physics feeds better algorithms.

We also see the influence of automotive imaging. High dynamic range capture under flickering LED lighting—once a car industry problem—now improves smartphone video in urban night environments. This cross-industry technology transfer highlights how 2026 represents not just incremental refinement, but convergence of sectors.

In strategic terms, 2026 marks the end of the “megapixel war” as a marketing centerpiece. Although 200MP sensors remain visible, resolution alone no longer dominates keynote presentations. Instead, manufacturers emphasize dynamic range, highlight roll-off, real-time HDR video, and consistent cross-lens color science. The narrative changes from quantity to quality.

For enthusiasts and power users, this shift means purchasing decisions must account for sensor architecture rather than headline numbers. A 1-inch sensor with LOFIC behaves differently from a smaller high-resolution sensor relying purely on multi-frame fusion. Real-world performance—especially in challenging lighting—diverges more clearly than spec sheets suggest.

Ultimately, 2026 stands as a turning point because the industry collectively acknowledges its physical limits and responds with architectural reinvention. Sensor size approaches its practical maximum within smartphone form factors. In response, manufacturers redesign pixel structures, expand full well capacity, and integrate capacitor-based overflow management. The evolution moves from surface-level metrics to deep silicon engineering.

That structural transition reshapes competition, supply chains, and user expectations simultaneously. When physics, semiconductor architecture, and computational imaging converge at once, the result is not just a better camera phone—it is a new baseline for what mobile imaging can achieve.

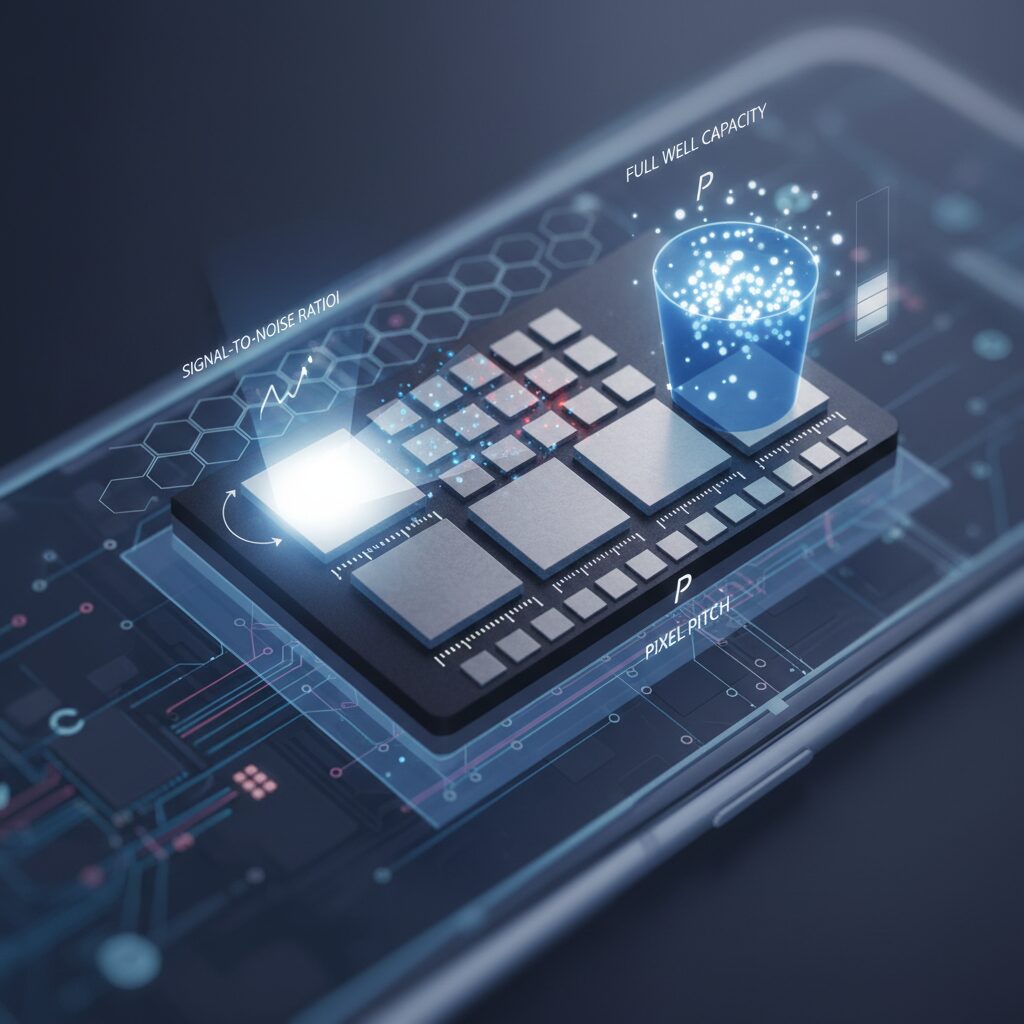

The Physics of Sensor Size: Signal-to-Noise Ratio, Pixel Pitch, and Full Well Capacity

At the heart of every camera sensor lies a simple physical reality: image quality is governed by how many photons each pixel can collect and how cleanly that signal can be read out. In smartphones, where space is brutally limited, understanding signal-to-noise ratio (SNR), pixel pitch, and full well capacity (FWC) is essential to understanding why sensor size still matters in 2026.

Signal-to-noise ratio can be simplified as the ratio between captured signal electrons and total noise, including dark current and read noise. As described in imaging literature and reflected in DXOMARK’s sensor testing methodology, increasing the number of collected photons directly improves SNR. Larger sensors enable larger pixels, which in turn increase the number of signal electrons before noise dominates.

Pixel pitch, typically expressed in micrometers, determines the physical area available to capture light. A 1.0µm pixel has nearly three times the light-collecting area of a 0.6µm pixel. This difference is not cosmetic; it fundamentally alters noise behavior in dim environments.

| Pixel Pitch | Relative Light Area | Implication |

|---|---|---|

| 0.5µm | 1× (baseline) | Higher reliance on pixel binning and AI noise reduction |

| 0.6–0.7µm | ~1.4–2× | Improved balance of resolution and sensitivity |

| 1.0µm | ~4× | Stronger native low-light performance |

Samsung’s achievement of 0.5µm-class pixels, as reported by TechInsights, demonstrates how far miniaturization has progressed. However, shrinking pixel pitch inevitably reduces per-pixel photon capacity, increasing the importance of computational photography to recover detail.

This leads directly to full well capacity, the maximum number of electrons a pixel can store before saturating. FWC determines highlight headroom and dynamic range. The simplified relationship often used in sensor physics expresses dynamic range as proportional to the ratio between FWC and read noise.

When FWC increases, the sensor can hold more charge before clipping, preserving highlight detail without sacrificing shadow information. According to RedShark News, next-generation mobile sensors targeting 17 to 20 stops of dynamic range rely on structural innovations that effectively expand usable well capacity.

In practical terms, a larger sensor with larger pixels does not just reduce noise; it also delays saturation under bright light. This dual advantage explains why 1-inch class sensors consistently outperform smaller formats in extreme contrast scenes, even before software processing is applied.

Ultimately, sensor physics imposes non-negotiable constraints. You can enhance algorithms, stack transistors, or refine read circuits, but photon statistics remain governed by area and capacity. In 2026’s flagship devices, the competitive edge increasingly depends on how efficiently each square micrometer converts light into stable, high-capacity charge storage.

1-Inch Sensors Go Mainstream: Benefits, Trade-Offs, and Design Constraints

In 2026, 1-inch image sensors are no longer experimental showpieces reserved for niche models. They are becoming mainstream in flagship smartphones, fundamentally reshaping expectations for mobile photography. The shift reflects a broader industry consensus: physical sensor size once again matters more than headline megapixels.

The physics is straightforward. A larger sensor collects more photons per exposure, improving signal-to-noise ratio and tonal depth. According to DXOMARK’s sensor testing methodology, low-light score and dynamic range are strongly correlated with sensor surface area and full well capacity. This is why devices equipped with 1-inch-class sensors consistently dominate high-contrast and night-scene evaluations.

| Sensor Class | Typical Use in 2026 | Key Advantage | Main Constraint |

|---|---|---|---|

| 1/1.3-inch | Mainstream flagship | Balanced size/performance | Limited extreme DR headroom |

| 1-inch | Ultra-tier models | Higher SNR, richer tonal gradation | Module thickness and lens size |

The benefits are tangible. A 1-inch sensor paired with technologies like LOFIC can expand effective dynamic range into the 100–120dB territory, approaching 17–20 stops as reported by RedShark News when covering next-generation mobile sensors. In practical terms, this means retaining highlight detail in backlit scenes while preserving shadow texture without aggressive multi-frame stacking.

However, the move to 1-inch is not without trade-offs. A larger sensor demands a longer focal distance to maintain optical performance. That directly increases camera module height, forcing manufacturers to choose between thicker devices or larger camera bumps. As Android Authority has noted, industrial design constraints remain the biggest obstacle to universal 1-inch adoption.

Thermal and power considerations also intensify. Larger sensors generate more data per frame, especially when paired with 50MP or higher resolutions. This requires stronger image signal processors and more advanced memory pipelines, increasing power draw during 4K or 8K video capture. Without careful optimization, sustained performance can throttle.

Another subtle constraint is lens design. To fully resolve a 1-inch sensor, manufacturers must deploy higher-quality optics with tighter tolerances. Otherwise, edge softness and field curvature negate the theoretical advantage of the larger silicon. This partially explains why 1-inch sensors are often paired with co-engineered optics in premium “Ultra” devices.

Despite these challenges, supply diversification is accelerating adoption. With companies like OmniVision and SmartSens entering the 1-inch segment alongside Sony, competition is reducing exclusivity. As reported by GSMArena, wider LOFIC integration beginning in 2026 further enhances the value proposition of large sensors by improving single-exposure HDR.

For performance-focused users, the appeal is obvious. A 1-inch sensor delivers cleaner night images, smoother tonal roll-off, and more natural subject separation without relying excessively on computational blur. Yet the mainstream future depends on solving packaging efficiency. Until lens thickness, heat dissipation, and cost structure are optimized, 1-inch sensors will remain concentrated in high-end flagships rather than universal standards.

Even so, 2026 marks a turning point. What was once a bold marketing statement is steadily becoming an expected benchmark in premium mobile imaging.

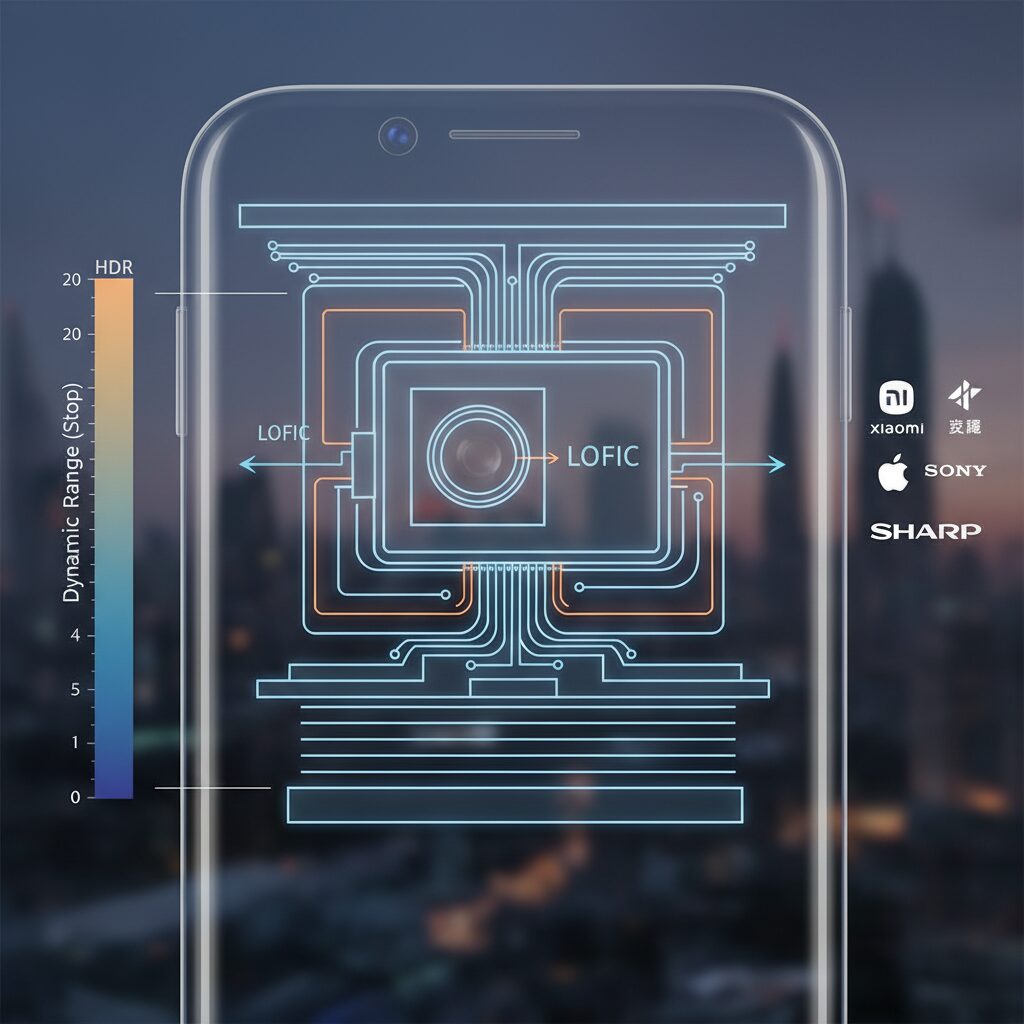

LOFIC Explained: How Lateral Overflow Integration Capacitors Redefine HDR

LOFIC, short for Lateral Overflow Integration Capacitor, is emerging as one of the most transformative sensor technologies in 2026. Instead of relying on multiple exposures to simulate high dynamic range, LOFIC fundamentally changes how a pixel handles light at the hardware level.

In a conventional CMOS sensor, once a photodiode reaches its full well capacity, excess charge is clipped, resulting in blown highlights. LOFIC introduces a lateral capacitor next to each pixel that temporarily stores overflow electrons, effectively expanding the pixel’s charge-handling capability in a single exposure.

LOFIC increases Full Well Capacity (FWC) at the pixel level, enabling dramatically wider dynamic range without multi-frame HDR artifacts.

This hardware-based approach directly addresses the core weakness of traditional HDR. Multi-frame HDR blends short and long exposures, but moving subjects often produce ghosting and edge artifacts. By contrast, LOFIC captures highlight and shadow information simultaneously, eliminating the need for temporal alignment.

According to industry reports covered by GSMArena and RedShark News, next-generation LOFIC sensors are targeting dynamic ranges between 100dB and 120dB, equivalent to roughly 17 to 20 stops. That territory has traditionally been reserved for professional cinema cameras.

| Technology | Dynamic Range Approach | Motion Artifacts |

|---|---|---|

| Multi-frame HDR | Composite of multiple exposures | Possible ghosting |

| LOFIC (Single Exposure) | Expanded in-pixel charge storage | Minimal |

The physics behind this matters. Dynamic range can be approximated as the ratio between full well capacity and read noise. By multiplying FWC several times over, as seen in sensors like OmniVision’s recent 1-inch class designs, the signal-to-noise ratio in highlights improves without sacrificing shadow detail.

In practical shooting scenarios, this means sunsets retain color gradation around the sun while preserving foreground texture. High-contrast indoor scenes with bright windows no longer require aggressive tone mapping that flattens midtones.

Sony Semiconductor Solutions has also indicated that maintaining high dynamic range during zoom operations is a development priority, suggesting that LOFIC integration is not just about still photography but also about consistent HDR in video capture.

Another crucial implication is power efficiency. Because LOFIC reduces dependence on multi-frame stacking, image signal processors perform fewer alignment and fusion operations. This can lower computational load during 4K or 8K HDR recording, an advantage increasingly relevant for thermal management in slim devices.

LOFIC represents a shift from computational compensation to physical capability. Instead of fixing limitations after capture, it expands the capture envelope itself. For gadget enthusiasts tracking sensor evolution, this marks a genuine architectural leap rather than incremental tuning.

Dynamic Range in Numbers: 17 to 20 Stops and What It Means in Real-World Shooting

When you hear that a smartphone sensor delivers 17 to 20 stops of dynamic range, it may sound like marketing jargon. However, in imaging science, a “stop” has a precise meaning: each stop represents a doubling of light. In other words, a 20-stop sensor can theoretically capture a brightness range of 2^20, or over one million levels between the darkest shadow and the brightest highlight.

Dynamic range in stops is calculated from the ratio between full well capacity and the noise floor. As explained in sensor testing methodologies such as those referenced by DXOMARK, the wider this ratio, the more tonal information a sensor can retain before shadows turn to noise or highlights clip to pure white.

| Dynamic Range | Light Ratio (Approx.) | Real-World Implication |

|---|---|---|

| 12 stops | 4,096:1 | Typical older smartphone HDR |

| 17 stops | 131,072:1 | High-end single-exposure mobile sensor |

| 20 stops | 1,048,576:1 | Approaching cinema camera territory |

The jump from 12 to 17 stops is not incremental. It represents a more than 30-fold increase in measurable contrast range. Moving from 17 to 20 stops expands that range by another factor of eight. This exponential growth is why even a few additional stops dramatically change what you can capture in a single frame.

So what does 17 to 20 stops mean in real-world shooting? Imagine photographing a sunset cityscape. The sky near the sun may be thousands of times brighter than the shaded streets below. With limited dynamic range, you must choose: preserve the sky and lose shadow detail, or expose for buildings and blow out the clouds.

With a 17-stop sensor enabled by technologies such as LOFIC, the sensor can store overflow charge instead of immediately saturating. According to industry reports from RedShark News and Sony Semiconductor Solutions, this allows highlight retention without relying solely on multi-frame HDR stacking. You can preserve texture in bright clouds while still seeing detail in window frames and asphalt in one exposure.

At 20 stops, the practical benefit becomes even clearer in extreme contrast scenes. Shooting indoors against a bright window is a classic torture test. Lower-range sensors often produce halo artifacts or ghosting when computational HDR merges multiple frames. A high-stop single-exposure approach minimizes motion artifacts, which is crucial for moving subjects like people turning their heads or cars passing by.

Video is another area where these numbers matter. Multi-frame HDR works well for stills, but in video it can introduce flicker and rolling inconsistencies. A sensor capable of maintaining 17 or more stops natively provides smoother highlight roll-off and more gradation in shadows frame after frame. This results in footage that looks less “processed” and more cinematic.

It is important to note that real-world usable dynamic range depends on processing pipelines, tone mapping, and display capabilities. A phone may capture 20 stops at the sensor level, but what you see on a 1,000 to 3,000 nit display is compressed into a narrower output range. Even so, having more captured data gives the ISP and AI algorithms far greater flexibility to map tones naturally.

For enthusiast shooters, 17 to 20 stops means fewer compromises. You can backlight portraits, shoot night streets with bright signage, or capture stage performances with spotlights without fearing irrecoverable highlights. These numbers are not abstract. They directly translate into freedom: more margin for exposure errors, richer gradation, and images that more closely resemble how your eyes perceive high-contrast scenes.

Flagship Sensor Comparison 2026: Xiaomi, Apple, Samsung, Sony, Vivo, and Sharp

In 2026, flagship sensor competition is no longer defined by megapixels alone but by how each brand balances physical size, pixel architecture, and next-generation dynamic range technologies. The shift from simple resolution races to structural innovation is clearly visible across Xiaomi, Apple, Samsung, Sony, Vivo, and Sharp.

According to DXOMARK’s sensor testing protocol, dynamic range and texture retention under extreme contrast now weigh heavily in real-world scoring. That evaluation framework explains why manufacturers are investing in larger sensors, stacked transistor designs, and LOFIC-based HDR rather than merely increasing pixel counts.

| Brand / Model | Main Sensor Size | Resolution | Key Technology |

|---|---|---|---|

| Xiaomi 17 Ultra | 1.0-inch | 50MP | LOFIC, Light Hunter 1050L (OmniVision) |

| iPhone 17 Pro Max | 1/1.28-inch | 48MP | 2-layer stacked transistor (Exmor T) |

| Galaxy S26 Ultra | 1/1.3-inch | 200MP | ISOCELL HP series, 0.5–0.6μm pixels |

| Xperia 1 VII | 1/1.35-inch | 48MP | Exmor T for mobile |

| Vivo X300 Ultra | 1/1.12-inch | 200MP | Sony LYT-901 |

| AQUOS R10 | 1/1.55-inch | 50.3MP | 14-channel spectral sensor |

Xiaomi currently pushes the physical limit with a true 1-inch type sensor in the 17 Ultra. By integrating OmniVision’s LOFIC-based Light Hunter 1050L, it dramatically increases full well capacity. Industry reports indicate up to 6.3× improvement over the previous generation, directly benefiting highlight preservation in high-contrast scenes.

Apple takes a different path. The iPhone 17 Pro Max uses a 1/1.28-inch 48MP sensor with a two-layer transistor pixel structure, branded under Sony’s Exmor T architecture. Rather than maximizing size, Apple focuses on readout efficiency and consistent color science across lenses, optimizing the sensor-to-ISP pipeline with the A19 Pro.

Samsung remains committed to ultra-high resolution. The Galaxy S26 Ultra continues with 200MP, leveraging ISOCELL pixel miniaturization down to 0.5–0.6μm. As TechInsights notes, this milestone enables extreme detail capture, but it also increases reliance on pixel binning and AI remosaic to maintain low-light performance.

Sony’s Xperia 1 VII demonstrates how stacked transistor design compensates for slightly smaller sensor dimensions. The 1/1.35-inch Exmor T for mobile separates photodiodes and transistors into distinct layers, improving light efficiency per pixel. This architectural refinement narrows the gap with physically larger sensors.

Vivo’s X300 Ultra positions itself between size and resolution extremes. Its 1/1.12-inch 200MP Sony LYT-901 sensor combines high pixel density with a comparatively large optical format, aiming to deliver both detailed zoom crops and strong base dynamic range.

Sharp’s AQUOS R10 offers a distinctive approach. Instead of pursuing the largest sensor, it integrates a 14-channel spectral sensor to enhance color accuracy. This multi-channel measurement supports faithful reproduction under mixed lighting, aligning with Sharp’s emphasis on natural tonal rendering.

Across these flagships, one pattern becomes clear: sensor innovation in 2026 is defined by architecture, not just area. LOFIC expands dynamic range at the hardware level, stacked designs improve photon efficiency, and ultra-high resolution sensors depend increasingly on computational reconstruction.

For enthusiasts comparing these brands, the real differentiator lies in how each company interprets sensor physics. Xiaomi maximizes surface area and charge capacity. Apple optimizes integration and processing harmony. Samsung scales resolution aggressively. Sony refines layered semiconductor design. Vivo balances density and format size. Sharp prioritizes spectral fidelity. The competition is no longer about who has the biggest number, but who engineers the smartest pixel.

Xiaomi 17 Ultra: 1-Inch Sensor, Leica Optics, and Continuous Optical Zoom

Xiaomi 17 Ultra pushes mobile imaging into true camera territory by combining a 1-inch main sensor, Leica co-engineered optics, and a mechanically driven continuous optical zoom system. Rather than relying solely on computational tricks, it builds its imaging philosophy on physical light capture and precision optics.

The main camera uses a 1.0-inch 50MP sensor branded as Light Hunter 1050L, supplied by OmniVision. According to industry coverage and Xiaomi’s official announcement, this sensor integrates LOFIC technology, dramatically increasing full well capacity and expanding dynamic range in a single exposure.

| Component | Specification | Key Advantage |

|---|---|---|

| Main Sensor | 1.0-inch, 50MP (Light Hunter 1050L) | Higher photon capture, improved SNR |

| HDR Tech | LOFIC | Expanded dynamic range without ghosting |

| Telephoto | 200MP Periscope | Continuous optical zoom (75–100mm) |

A 1-inch sensor significantly increases the light-receiving area compared to the 1/1.3-inch class common in other flagships. As DXOMARK’s sensor testing methodology explains, larger photosites improve signal-to-noise ratio by capturing more photons per exposure. In practical terms, this means cleaner shadows, smoother tonal transitions, and more natural highlight roll-off, especially in high-contrast scenes.

What makes Xiaomi 17 Ultra particularly compelling is how Leica tuning complements the hardware. Leica’s color science prioritizes micro-contrast and realistic tonal gradation rather than aggressive saturation. Reviews from Notebookcheck highlight that the device preserves texture in low light while maintaining controlled highlights, a result that aligns with the extended full well capacity enabled by LOFIC.

The telephoto system represents another leap. Instead of switching between fixed focal lengths, the 200MP periscope module delivers continuous optical zoom from 75mm to 100mm. This is achieved through internal lens group movement, similar in principle to traditional interchangeable lens systems.

This design avoids the abrupt framing jumps and hybrid interpolation artifacts typical of stepped zoom systems. Mid-telephoto portraits benefit the most, with consistent sharpness across the zoom range and compression characteristics that feel optically authentic rather than digitally simulated.

For enthusiasts, the Leica Edition adds a physical master zoom ring, reinforcing tactile control. Adjusting focal length and exposure through hardware input changes how you shoot, encouraging deliberate framing instead of tap-and-hope capture.

In 2026’s competitive landscape, many brands pursue higher megapixel counts or AI enhancement. Xiaomi 17 Ultra instead doubles down on physics: sensor size, optical precision, and expanded dynamic range at the pixel level. For users who prioritize tonal depth, optical authenticity, and mechanical zoom continuity, this configuration represents one of the most technically ambitious implementations currently available.

Apple iPhone 17 Pro Max: Stacked Sensors, A19 Pro, and Video-Centric Optimization

The iPhone 17 Pro Max approaches mobile imaging from a different angle than the 1-inch sensor race. Instead of chasing sheer sensor size, it refines a 1/1.28-inch 48MP main sensor built on Sony’s Exmor T stacked architecture, prioritizing readout speed, noise control, and video consistency.

According to Apple’s official technical specifications, all rear cameras adopt 48MP sensors, enabling uniform color science and detail rendering across focal lengths. This design philosophy minimizes inter-lens disparity, which is critical for professional workflows that switch perspectives mid-shoot.

The core idea is not maximum hardware scale, but maximum integration between stacked sensor, ISP, and Neural Engine.

The stacked, two-layer transistor pixel structure separates photodiodes and processing circuitry onto different layers. This increases light efficiency and reduces read noise, a factor that DXOMARK identifies as essential for improving signal-to-noise ratio in compact sensors.

In practical terms, faster sensor readout reduces rolling shutter distortion during 4K and 8K capture. This is especially relevant for handheld creators shooting action scenes, where geometric distortion can break immersion.

| Component | Specification | Impact on Video |

|---|---|---|

| Main Sensor | 1/1.28-inch, 48MP, stacked (Exmor T) | Higher light efficiency, reduced noise |

| Chipset | A19 Pro with 16-core Neural Engine | Real-time segmentation and tone mapping |

| Dynamic Range Target | Up to 20 stops (prototype LOFIC research) | Cinema-grade highlight retention |

The A19 Pro plays a decisive role in video-centric optimization. Its 16-core Neural Engine processes segmentation data in real time, separating skin tones, sky gradients, and foreground subjects frame by frame. Apple emphasizes that this happens at the raw data level before compression, preserving tonal subtlety.

Industry coverage from RedShark News notes that Apple is developing a LOFIC-based sensor prototype targeting up to 20 stops of dynamic range. While not yet mainstream, this signals a long-term ambition to rival dedicated cinema cameras in highlight and shadow retention.

Another under-discussed advantage lies in ecosystem stability. Consistent 48MP resolution across lenses enables seamless ProRes workflows, with fewer color shifts when cutting between ultra-wide, wide, and telephoto footage.

For creators who prioritize motion fidelity over extreme sensor size, the iPhone 17 Pro Max positions itself as a computational video platform rather than a hardware brute-force device.

Security also intersects with imaging. The A19 Pro integrates Memory Integrity Enforcement, reinforcing protection against data tampering and advanced spyware threats. For journalists and documentary filmmakers, this layer adds confidence that captured footage remains authentic.

In a market increasingly defined by 1-inch sensors and LOFIC breakthroughs, the iPhone 17 Pro Max demonstrates that stacked sensor efficiency, silicon-level AI acceleration, and tightly controlled color science can collectively redefine what “Pro” video means on a smartphone.

Samsung Galaxy S26 Ultra: 200MP Strategy, 0.5–0.6μm Pixels, and AI Compensation

Samsung continues to double down on its 200MP philosophy with the Galaxy S26 Ultra, even as rivals pivot toward larger 1-inch sensors and LOFIC-based dynamic range expansion. Instead of increasing sensor size, Samsung refines pixel miniaturization and computational recovery. The core idea is clear: maximize spatial resolution first, then restore light efficiency through AI.

The S26 Ultra is built around an improved ISOCELL HP2 lineage sensor in a 1/1.3-inch class, while Samsung’s broader roadmap includes 0.5μm-class pixels as confirmed by TechInsights. This represents one of the smallest pixel pitches ever commercialized in mobile imaging, pushing semiconductor fabrication to its limits.

| Model Strategy | Sensor Size | Pixel Pitch | Approach |

|---|---|---|---|

| Galaxy S26 Ultra | 1/1.3-inch | ~0.6μm class | High MP + AI remosaic |

| ISOCELL HP5 (reference) | 1/1.56-inch | 0.5μm | Ultra-dense integration |

From a physics standpoint, shrinking pixel pitch reduces the full well capacity per pixel, meaning fewer photons are captured individually. As DXOMARK explains in its sensor testing methodology, signal-to-noise ratio depends directly on photon count. Smaller pixels inherently collect less light, which traditionally increases noise in low-light conditions.

Samsung’s answer is aggressive pixel binning and AI remosaic processing. In typical shooting modes, multiple adjacent pixels are merged to simulate a larger effective pixel, improving light sensitivity. When lighting is sufficient, the system reconstructs a full 200MP image using deep-learning-based detail inference. This dual-mode strategy allows flexibility between ultra-high detail and improved low-light performance.

The trade-off becomes visible when comparing market trends. As of early 2026, DXOMARK rankings show that many top-performing devices rely on larger sensors and extended dynamic range technologies. Samsung instead prioritizes in-sensor zoom and cropping freedom. A 200MP frame allows lossless digital zoom ranges that smaller-resolution sensors cannot replicate, especially in daylight scenarios.

However, enthusiast communities have raised concerns about certain secondary sensors reportedly shrinking in physical size compared to previous generations. While such critiques focus on hardware regression, Samsung’s public emphasis remains on computational photography as the equalizer.

In practical use, this means sharper textures in architecture shots, greater cropping latitude for wildlife or sports, and more flexible reframing in post-processing. The camera system treats resolution as data density, feeding Samsung’s ISP and AI models with richer information for noise reduction and sharpening.

Ultimately, the Galaxy S26 Ultra represents a different interpretation of flagship imaging in 2026. While competitors pursue larger photodiodes and LOFIC-driven dynamic range gains, Samsung advances semiconductor scaling and neural processing synergy. It is a bet that algorithmic compensation can offset the physical compromises of 0.5–0.6μm pixels, and that users will value versatility and zoom freedom as much as raw sensor size.

Sony Xperia 1 VII and Sharp AQUOS R10: Pro Workflows and Spectral Accuracy

For creators who demand predictable color science and reliable grading latitude, Sony Xperia 1 VII and Sharp AQUOS R10 present two distinct yet professional approaches. Rather than competing on sheer sensor size, both devices focus on workflow integrity and spectral fidelity, aligning closely with broadcast and photographic standards.

Xperia 1 VII emphasizes pipeline consistency from capture to post-production, while AQUOS R10 prioritizes faithful color reproduction at the point of capture. This philosophical difference shapes how each device integrates into pro workflows.

| Model | Main Sensor | Core Imaging Strength | Workflow Focus |

|---|---|---|---|

| Xperia 1 VII | 1/1.35″ 48MP Exmor T (2-layer) | Stacked transistor architecture | Manual control & video tools |

| AQUOS R10 | 1/1.55″ 50.3MP | 14-channel spectral sensor | Color accuracy & display validation |

Sony’s Exmor T for mobile sensor uses a two-layer transistor pixel structure, separating photodiodes and transistors to improve light collection efficiency. According to Sony Semiconductor Solutions, this stacked design enhances signal conversion while suppressing noise in high-contrast scenes. In practice, this means cleaner shadow recovery when grading log-style footage, particularly in mixed lighting.

The Xperia 1 VII also inherits operational logic from Sony’s Alpha mirrorless ecosystem. Dedicated manual exposure control, real-time eye AF, and extended optical zoom coverage from 85mm to 170mm allow creators to treat the phone as a compact production tool. The AI Camerawork and Auto Framing features further support solo video creators by maintaining subject framing without external gimbals.

This is not merely computational photography; it is workflow-aware engineering. Color profiles remain consistent across focal lengths, reducing correction time in post. For editors working in Rec.709 or HDR timelines, predictable tonal roll-off is critical.

Sharp’s AQUOS R10 takes a different route with its 14-channel spectral sensor. Instead of relying solely on RGB data, the additional channels analyze finer wavelength variations. As Sharp states in its official release, this enables reproduction closer to “what the human eye perceives.” In controlled comparisons, this translates into more stable white balance under complex LED or mixed fluorescent environments.

The synergy with the Pro IGZO OLED display, reaching up to 3000 nits peak brightness, strengthens on-device verification. High dynamic range images can be evaluated immediately with minimal perceptual mismatch. For photographers delivering fast-turnaround content, this reduces reliance on external reference monitors.

Xperia 1 VII optimizes capture flexibility and grading latitude, while AQUOS R10 optimizes spectral accuracy and on-device color validation.

From a professional standpoint, the distinction matters. If your priority is controlled video production and seamless integration into established editing pipelines, Xperia’s Alpha-derived philosophy offers familiarity and depth. If your priority is faithful color capture in unpredictable lighting with minimal correction, AQUOS R10’s spectral approach provides measurable advantages.

Both devices demonstrate that in 2026, pro imaging is no longer defined solely by sensor size. It is defined by how intelligently the sensor data flows through the entire creative process.

DxOMark Rankings and Market Data: Who Leads the 2026 Camera Race?

In 2026, the global camera race is no longer defined by megapixels alone but by measurable imaging performance. DxOMark’s smartphone ranking, updated in January 2026, provides one of the most widely cited third‑party benchmarks, evaluating exposure, dynamic range, texture, noise, autofocus, and video under a standardized protocol. According to DxOMark, the gap between the top 10 devices has narrowed, yet the technological direction of the leaders is remarkably consistent.

The top tier is dominated by large sensors (1/1.3-inch or bigger) combined with advanced HDR architectures such as LOFIC or stacked designs. This signals that hardware innovation, not just AI tuning, is driving measurable gains in lab and real‑world testing.

| Rank (Jan 2026) | Device | Camera Score | Key Hardware Trait |

|---|---|---|---|

| 1 | Huawei Pura 80 Ultra | 175 | 1-inch class sensor + variable aperture |

| 2 | Vivo X300 Pro | 171 | LYT-828 with LOFIC |

| 3 | Oppo Find X8 Ultra | 168 | Dual periscope telephoto |

| 4 | iPhone 17 Pro | 168 | Advanced video processing (A19 Pro) |

Huawei’s 175-point score places it clearly at the top, leveraging a 1-inch class sensor and variable aperture to balance depth of field and highlight control. Vivo follows closely, and notably integrates a LOFIC-equipped Sony LYT sensor, reinforcing the importance of expanded full well capacity for single-exposure HDR performance.

Apple’s iPhone 17 Pro, tied at 168 points, demonstrates a different strategy. Rather than relying solely on sensor size, Apple maximizes consistency across lenses and prioritizes video stability and color science. As Apple’s official technical documentation highlights, the A19 Pro chip enables real-time semantic rendering, which contributes heavily to video sub-scores in DxOMark’s protocol.

Beyond rankings, market data indicates a broader structural shift. Industry coverage from GSMArena and Sony Semiconductor Solutions confirms that LOFIC adoption is accelerating in 2026, particularly in 1/1.3-inch and larger sensors. This aligns directly with the scoring pattern seen in DxOMark’s top 10, where devices lacking next-generation HDR architectures struggle to break into the 170-point range.

Another notable data point is the persistence of older flagships such as Huawei Pura 70 Ultra within the top 10. This suggests that foundational sensor architecture can sustain competitiveness across product cycles, even as AI tuning evolves.

For performance-focused buyers, the ranking reveals a clear pattern: the leaders are no longer experimenting—they are converging. The 2026 camera race is being won by devices that push physical photon capture limits first, and computational refinement second. DxOMark’s data does not merely rank phones; it reflects a hardware-first renaissance in mobile imaging.

Supply Chain Shifts: OmniVision, Sony, and the Diversification of 1-Inch Sensors

The battle over 1-inch smartphone sensors is no longer just about image quality. It is increasingly about supply chain power, cost control, and long-term strategic independence. In 2026, the dominance once enjoyed by a single supplier is visibly eroding.

For years, Sony Semiconductor Solutions effectively defined the high-end mobile sensor category. Its stacked CMOS designs and Exmor branding became synonymous with premium imaging. However, as Android Authority and GSMArena report, competitors such as OmniVision and China-based SmartSens have accelerated their push into the 1-inch segment.

The adoption of OmniVision’s 1-inch sensor in Xiaomi 17 Ultra marks a structural shift in procurement strategy, not just a technical milestone. By integrating the Light Hunter 1050L with LOFIC HDR, Xiaomi demonstrated that flagship-grade dynamic range is no longer tied exclusively to Sony supply.

| Vendor | 1-Inch Strategy (2026) | Notable Adoption |

|---|---|---|

| Sony | Stacked CMOS, Exmor T lineage, upcoming LOFIC variants | Premium global flagships |

| OmniVision | LOFIC-enabled 1-inch (OV50X class) | Xiaomi 17 Ultra |

| SmartSens | Emerging large-format mobile sensors | Selective Chinese OEM projects |

According to DXOMARK’s 2026 rankings, most top-performing devices now use sensors at or above the 1/1.3-inch class. This trend increases demand for larger wafers, more advanced packaging, and tighter yield control. As volumes rise, OEMs are reluctant to depend on a single fabrication ecosystem.

OmniVision’s re-entry into the ultra-premium tier is particularly significant. The company previously competed aggressively in mid-range segments, but LOFIC integration and expanded full well capacity reposition it as a credible alternative in the highest performance bracket. Reports indicate that the Light Hunter implementation achieves multiple-fold FWC expansion over prior generations, directly addressing dynamic range constraints.

Diversification reduces pricing pressure risk and strengthens negotiation leverage for smartphone brands. When only one supplier can deliver a 1-inch stacked sensor at scale, pricing power naturally concentrates. The moment two or three viable suppliers exist, long-term contracts, co-development agreements, and volume guarantees become far more balanced.

Sony is not retreating. Public statements from Sony Semiconductor Solutions highlight continued investment in stacked pixel architectures and high dynamic range solutions optimized for both stills and video. The company’s roadmap reportedly includes LOFIC-class technologies tailored for mobile, emphasizing energy efficiency during high-frame-rate capture.

Energy efficiency is a crucial differentiator. Larger sensors consume more power due to increased readout complexity and higher data throughput. Vendors that can combine wide dynamic range with lower thermal output will gain an advantage, particularly as 8K video and AI-enhanced real-time processing become mainstream.

Another layer of competition lies in vertical integration. Apple’s reported prototype LOFIC sensor targeting up to 20 stops of dynamic range signals a longer-term ambition to reduce dependency on external suppliers. Even if mass production remains years away, the strategic message is clear: sensor technology is becoming core intellectual property rather than a replaceable component.

This shift transforms image sensors from commodity hardware into strategic assets. As geopolitical tensions and semiconductor export controls continue to shape global trade, diversified sourcing also mitigates political and logistical risks. Chinese OEMs, in particular, have strong incentives to cultivate domestic or non-Japanese alternatives.

From a manufacturing standpoint, producing 1-inch sensors at smartphone volumes is non-trivial. Yield rates decline as die size increases, and advanced stacked architectures require complex wafer bonding processes. Any supplier capable of delivering consistent yield at scale gains immediate credibility with global OEMs.

The broader implication for enthusiasts is subtle but important. More suppliers competing in the 1-inch category means faster iteration cycles, aggressive innovation in HDR circuitry, and potentially lower costs over time. What was once reserved for ultra-premium “Ultra” models could gradually filter into more accessible Pro-tier devices.

In 2026, supply chain diversification is not merely a background industry story. It is actively reshaping the pace of imaging innovation, the balance of power between sensor makers and smartphone brands, and the economic feasibility of bringing cinema-grade dynamic range to mass-market devices.

The Rise of In-House Sensor Development and ISP Co-Design

As sensor hardware approaches physical limits, leading smartphone makers are shifting their competitive focus inward. Instead of relying solely on off-the-shelf components, companies are accelerating in-house sensor development tightly integrated with their own ISP and AI pipelines. This co-design strategy is rapidly becoming the defining battlefield of 2026.

The logic is simple but powerful. When a company controls both the silicon that captures photons and the processor that interprets them, it can optimize the entire imaging chain at the pixel level. According to industry coverage by GSMArena and RedShark News, Apple, Samsung, and Google are all deepening investments in custom sensor architectures aligned with their proprietary image processing engines.

Apple provides one of the clearest examples. Reports indicate that the company is developing its own LOFIC-based sensor prototype targeting up to 20 stops of dynamic range. Rather than simply matching Sony’s Exmor lineup, Apple aims to co-design the sensor with the A19 Pro chip’s Neural Engine, enabling real-time segmentation, tone mapping, and highlight recovery directly tuned to the sensor’s charge behavior.

This vertical integration reduces dependency on external vendors and allows Apple to tailor pixel circuitry, readout speed, and noise characteristics to its computational photography pipeline. Wikipedia and Apple’s technical documentation note that the A19 Pro emphasizes secure, high-speed image processing, suggesting that future custom sensors will likely align with these hardware-level capabilities.

Samsung is taking a parallel but distinct approach. Through ISOCELL development, it already controls both sensor fabrication and image processing in Galaxy devices. TechInsights reports Samsung achieving a 0.5μm pixel milestone, demonstrating its willingness to push miniaturization while relying on advanced ISP algorithms to compensate for reduced photon capture.

Rather than enlarging sensors indefinitely, Samsung appears to prioritize computational enhancement tightly tuned to its Exynos and Snapdragon ISP pipelines. This approach reflects a belief that AI-driven remosaicing and multi-frame synthesis can offset hardware constraints when sensor and processor are engineered together.

| Company | Sensor Strategy | ISP/AI Integration Focus |

|---|---|---|

| Apple | Custom LOFIC prototype (20 stops target) | A19 Pro Neural Engine co-optimization |

| Samsung | ISOCELL in-house fabrication (0.5μm pixel) | AI remosaic + ISP tuning |

| Customized sensor tuning for Pixel | Tensor ISP + Video Boost AI pipeline |

Google, while not a sensor manufacturer in the traditional sense, exemplifies another dimension of co-design. Pixel devices increasingly rely on customized sensor tuning aligned with the Tensor chipset’s ISP. DXOMARK rankings show Google maintaining competitive imaging performance despite not always using the largest sensors, underscoring the effectiveness of hardware–software synergy.

What makes in-house development so strategically important in 2026 is the emergence of technologies like LOFIC. As reported by GSMArena, LOFIC adoption is expanding rapidly, but the real advantage lies in how each brand implements it. The capacitor structure, overflow handling, and readout sequencing must be harmonized with HDR algorithms to avoid artifacts and latency.

If a manufacturer depends entirely on a third-party sensor, optimization windows are narrower. In contrast, in-house teams can redesign pixel architecture to match ISP noise reduction curves, tone mapping thresholds, and AI-based subject recognition. This level of synchronization directly affects highlight roll-off, shadow detail retention, and motion artifact suppression.

Another benefit of co-design is power efficiency. Sony Semiconductor Solutions has emphasized maintaining high dynamic range during zoom with controlled power consumption. When sensor readout patterns are engineered alongside ISP scheduling, energy use during 4K or 8K video capture can be significantly stabilized. This matters as thermal constraints increasingly limit sustained performance.

From a market perspective, this shift signals a structural transformation. The 1-inch sensor race may grab headlines, but the deeper competition now unfolds at the semiconductor architecture level. According to industry reporting in early 2026, multiple brands are exploring pixel-level customization rather than merely scaling physical dimensions.

For gadget enthusiasts, this means spec sheets will become less transparent indicators of real-world performance. Two phones with identical sensor sizes may produce radically different results depending on how deeply the ISP, AI engine, and sensor circuitry are intertwined. The era of modular component comparison is giving way to vertically integrated imaging ecosystems.

Ultimately, in-house sensor development and ISP co-design represent the next frontier of differentiation. As physical enlargement reaches practical limits, companies that master end-to-end silicon control will define the visual signature of the smartphone camera in the years ahead.

From Automotive to Smartphones: How LOFIC and HDR Tech Cross Industries

LOFIC and advanced HDR technologies did not originate in smartphones. They were first refined in automotive imaging, where cameras must reliably capture scenes that combine dark tunnels, bright headlights, and rapidly changing lighting conditions. In autonomous driving and ADAS systems, a sensor failure caused by highlight clipping or flicker can directly impact safety, which is why extremely wide dynamic range became a non‑negotiable requirement.

According to Sony Semiconductor Solutions and industry analyses cited by GSMArena, early LOFIC implementations were designed to suppress LED flicker and preserve detail under high-contrast conditions. By expanding the Full Well Capacity at the pixel level, these sensors achieved stable HDR in a single exposure—something multi-frame smartphone HDR historically struggled with when subjects were moving.

The migration of LOFIC from cars to phones represents a rare case where safety-critical imaging standards reshape consumer photography.

The technical demands of each industry differ, but the core objective remains identical: maximize usable dynamic range without introducing artifacts. Automotive systems prioritize reliability and flicker suppression, while smartphones emphasize texture retention, natural color gradation, and real-time processing efficiency.

| Industry | Primary HDR Challenge | LOFIC Benefit |

|---|---|---|

| Automotive | LED flicker, extreme contrast, motion | Stable single-exposure HDR, flicker mitigation |

| Smartphone | Ghosting, highlight clipping, low-light noise | Higher FWC, reduced artifacts, improved texture |

RedShark News reports that next-generation mobile sensors are targeting 17 to 20 stops of dynamic range, approaching cinema-grade territory. This ambition mirrors automotive requirements, where detecting objects in shadow while preserving bright signage is critical. The smartphone industry is effectively inheriting performance benchmarks once reserved for vehicles and professional cameras.

Another important crossover lies in power efficiency. Automotive cameras must operate continuously with thermal stability. When Sony and other manufacturers adapt these architectures for mobile use, the result is not only higher HDR performance but also optimized energy consumption during video recording—an increasingly important metric for flagship smartphones.

This cross-industry technology flow signals a broader paradigm shift: innovation no longer moves only from consumer electronics upward. Instead, safety-driven automotive R&D feeds directly into the pocket devices used for night photography, backlit portraits, and high-contrast urban scenes. For gadget enthusiasts, understanding this lineage adds a new layer of appreciation to what appears, on the surface, to be “just” better smartphone HDR.

What to Look for in 2026: Choosing a Smartphone by Sensor Technology, Not Just Megapixels

In 2026, choosing a smartphone camera by megapixels alone is no longer enough. The real difference in image quality now lies in sensor architecture, full well capacity, and dynamic range design. If you truly care about photography, you need to look beneath the headline numbers.

According to DXOMARK’s sensor testing protocol, signal-to-noise ratio and dynamic range are strongly influenced by how many photons each pixel can physically capture. That depends not just on resolution, but on sensor size, pixel pitch, and charge management technology.

In 2026, sensor size and charge handling matter more than raw megapixel count.

Here is how major 2026 flagship approaches differ at the sensor level:

| Device | Main Sensor Size | Key Sensor Tech |

|---|---|---|

| Xiaomi 17 Ultra | 1.0-inch | LOFIC, expanded FWC |

| iPhone 17 Pro Max | 1/1.28-inch | Stacked transistor (Exmor T) |

| Galaxy S26 Ultra | 1/1.3-inch | 200MP ISOCELL HP series |

A 1-inch sensor physically captures more light, which improves low-light clarity and tonal depth. However, larger sensors increase module thickness. This is why some brands adopt stacked structures like Sony’s Exmor T, separating photodiodes and transistors into layers to improve light efficiency without dramatically increasing footprint.

The most important keyword in 2026 is LOFIC. Originally developed for automotive sensors, LOFIC adds lateral overflow capacitors to temporarily store excess charge when highlights would otherwise clip. Reports from RedShark News note that next-generation implementations target up to 17–20 stops of dynamic range, approaching cinema-grade territory.

This changes how you should evaluate HDR performance. Traditional multi-frame HDR can introduce ghosting in moving subjects. A single-exposure LOFIC design reduces motion artifacts because highlight and shadow data are captured simultaneously at the pixel level.

Meanwhile, ultra-high-resolution sensors such as Samsung’s 200MP designs rely on pixel binning and computational remosaic processing. TechInsights highlights Samsung’s achievement of 0.5μm-class pixels, enabling extreme resolution in compact formats. The trade-off is smaller individual photodiodes, which depend heavily on AI processing to compensate for reduced native light capture.

So what should you actually check when comparing phones?

First, confirm the sensor’s physical size. A jump from 1/1.56-inch to 1/1.3-inch or 1-inch is not marketing fluff; it directly impacts photon collection. Second, look for advanced charge management like LOFIC or stacked transistor architectures. Third, consider whether the brand emphasizes computational enhancement or optical fundamentals.

Megapixels tell you how much detail can be recorded. Sensor technology tells you how much light and tonal nuance can be preserved.

In 2026, the smartest buyers read spec sheets like engineers, not like billboard slogans. When you evaluate smartphones through the lens of sensor physics and dynamic range design, you choose a device that performs consistently in real-world scenes—not just in bright showroom lighting.

参考文献

- Android Authority:Why 2026 smartphones won’t have this game-changing camera tech

- DXOMARK:Smartphone Ranking

- GSMArena:The image sensor competition is on: LOFIC sensors will see wider adoption starting in 2026

- RedShark News:New smartphone image sensors from Apple and Sony promise 20 and 17 stops

- NotebookCheck:Xiaomi 17 Ultra review – Best overall package in the smartphone sector meets Leica APO zoom

- Apple:iPhone 17 Pro and 17 Pro Max – Technical Specifications

- Sony Semiconductor Solutions:Sony Semiconductor Solutions to Release Advanced CMOS Sensor for Mobile Applications

- TechInsights:Samsung Achieves 0.5μm Pixel Milestone for Image Sensors