Have you ever captured a stunning night skyline, only to find green ghosts and hazy streaks ruining your shot? In 2026, lens flare is no longer a minor annoyance but one of the biggest technical battlegrounds in smartphone photography.

As sensors grow larger and apertures become brighter, internal reflections inevitably increase. Flagship devices like the Samsung Galaxy S26 Ultra, iPhone 17 Pro, and Sony Xperia 1 VII are now competing not just on megapixels, but on how precisely they control light at the physical and computational levels.

In this article, you will discover how nano-coatings, Zeiss T* optics, moth-eye nanostructures, metalenses, and diffusion-based AI models are transforming mobile imaging. If you care about night photography, cinematic video, or simply the cleanest possible image quality, this deep dive will help you understand what truly matters in 2026.

- Why Lens Flare Became the Defining Challenge of Smartphone Cameras in 2026

- Samsung Galaxy S26 Ultra: Nano-Coating, Spectral Control, and the Black Matrix Structure

- iPhone 17 Pro: Atomic Layer Coating, 200mm Zoom, and the Physics of Multi-Lens Reflection

- Sony Xperia 1 VII: Zeiss T* Coating, Larger Ultra-Wide Sensors, and Optical Simulation

- The Physics of Reflection: Why More Aperture and More Elements Increase Flare Risk

- Moth-Eye Nanostructures: Reducing Surface Reflection from 4.4% to 0.23%

- Metalens Technology and Samsung’s Polar ID: Flattening Optics to Minimize Internal Reflections

- Diffusion Models for Single Image Flare Removal: LightsOut and Difflare Explained

- Night Photography Breakthroughs: Optical Center Symmetry, MFDNet, and the FlareX Dataset

- Third-Party Optical Filters and Ultra-Low Reflection Coatings in the Japanese Market

- Expert Insights: The Physical Limits of Curved Lenses and the Risks of Over-Aggressive AI

- How to Choose a 2026 Flagship for Cleaner Night Photos and Professional Video

- 参考文献

Why Lens Flare Became the Defining Challenge of Smartphone Cameras in 2026

In 2026, smartphone camera innovation is no longer defined by megapixels or extreme zoom ratios. It is defined by how well manufacturers control light. As sensors grow larger and lenses become brighter within ultra-thin bodies, unwanted internal reflections—lens flare and ghosting—have emerged as the most visible limitation of mobile imaging.

The paradox is clear: the more light smartphones capture, the harder it becomes to keep that light pure. Wider apertures improve low-light performance, yet they also increase the probability of internal reflections between lens elements, cover glass, and sensor surfaces.

According to optical principles long established in physics, every air-to-glass boundary reflects a portion of incoming light. When lens stacks become more complex—as seen in periscope-style telephoto systems reaching up to 200mm equivalent—those reflective interfaces multiply, increasing flare risk geometrically rather than linearly.

| Trend (2026) | Benefit | Flare Impact |

|---|---|---|

| Larger sensors | Higher dynamic range | Greater sensitivity to stray light |

| Brighter apertures | Improved night shots | Increased internal scattering |

| Long periscope zoom | True optical reach | More reflective surfaces |

Community discussions around recent flagship devices show that even with advanced coatings, users still report green dot ghosts and contrast loss under strong point light sources. These are not isolated defects but manifestations of physical constraints inside millimeter-thin modules.

Experts frequently emphasize that curved lenses inherently bend and reflect light. As highlighted in professional discussions cited by Apple user communities and optical engineers, eliminating flare entirely would require removing refraction itself—an impossibility under current optical physics.

At the same time, user expectations have shifted dramatically. Social platforms prioritize night cityscapes, concert footage, and neon-lit portraits. In such environments, flare is not a rare edge case but a daily shooting condition. What was once a niche optical artifact has become a mainstream quality benchmark.

Manufacturers now compete on nano-coatings, spectral tuning, structural light shielding, and computational correction because incremental sensor gains no longer impress advanced users. The differentiator is optical integrity under extreme lighting.

This shift marks a deeper transformation in mobile photography. The industry has moved from capturing more light to mastering light behavior itself. And in that transition, lens flare has become the defining challenge that separates engineering ambition from optical excellence.

Samsung Galaxy S26 Ultra: Nano-Coating, Spectral Control, and the Black Matrix Structure

Samsung approaches the Galaxy S26 Ultra with a clear premise: when aperture brightness increases, optical discipline must increase as well.

The extremely bright main lens captures more light for low‑light scenes, but as Android Police notes, brighter optics inherently raise the risk of internal reflections and flare.

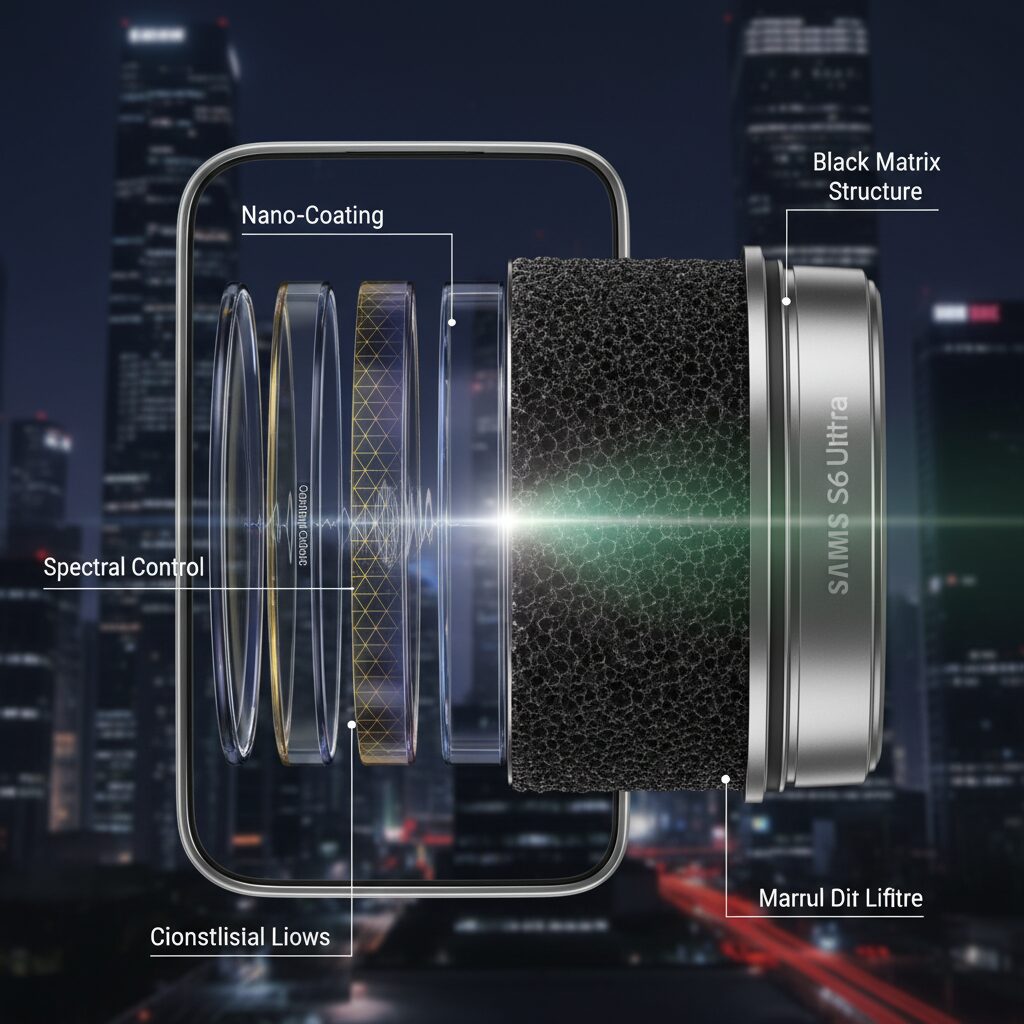

To counter this, Samsung integrates nano‑coating, spectral transmission tuning, and a newly refined Black Matrix structure as a single optical system rather than isolated fixes.

| Element | Technical Role | Expected Benefit |

|---|---|---|

| Improved Nano-Coating | Applied to all lens elements | Reduced flare and ghosting |

| Spectral Transmission Control | Selective wavelength adjustment | More accurate skin tones |

| Black Matrix Structure | Enhanced internal light shielding | Suppressed edge reflections |

The improved nano‑particle coating goes beyond conventional anti‑reflection layers. According to Android Authority, Samsung specifically targeted the long‑standing criticism of yellowish skin tones in previous Galaxy devices.

This suggests that the coating is engineered not only to reduce surface reflection, but also to fine‑tune spectral transmission before light even reaches the sensor.

By physically shaping which wavelengths pass through most efficiently, Samsung reduces the burden on computational color correction.

From an optical physics standpoint, flare is amplified when high‑intensity light reflects between lens elements and barrel surfaces.

The Black Matrix structure addresses this at the mechanical level by refining edge darkening and internal shielding, minimizing stray reflections along the lens periphery.

This is particularly important in night scenes, where point light sources such as street lamps easily trigger ghost artifacts.

What makes this integration notable is its systems‑level design philosophy. Rather than relying solely on post‑processing, Samsung suppresses flare at three physical stages: surface reflection, spectral imbalance, and internal scattering.

Industry commentary referenced by Telegrafi indicates that the new lens stack was redesigned specifically to combat glare in bright backlit conditions.

The result is not just fewer artifacts, but improved contrast stability when shooting directly into strong light.

For enthusiasts who care about micro‑contrast and tonal realism, this matters. When flare is reduced physically, shadow detail remains intact and highlight bloom is less destructive.

In practical terms, this means cleaner night skylines, more natural portraits under mixed lighting, and stronger color consistency in RAW workflows.

The Galaxy S26 Ultra demonstrates that nano‑scale materials engineering and precise internal light control are becoming as critical as sensor size in defining flagship image quality.

iPhone 17 Pro: Atomic Layer Coating, 200mm Zoom, and the Physics of Multi-Lens Reflection

The iPhone 17 Pro represents Apple’s most ambitious attempt yet to reconcile advanced optics with the harsh realities of physics. As the camera system expands to a 48MP triple-lens array and up to a 200mm-equivalent telephoto on the Pro Max, each additional lens element introduces new reflective interfaces. According to discussions in Apple Support Communities, users still observe green dot ghosts under strong point light sources, highlighting how multi-lens complexity inherently raises flare risk.

At the core of Apple’s countermeasure is the evolution of ALC, or Atomic Layer Coating. Unlike conventional vapor deposition, atomic layer processes control film thickness at the nanometer scale. This precision matters because reflections arise when light encounters abrupt refractive index changes. By stacking ultra-thin layers with carefully tuned optical thickness, Apple reduces surface reflectance across visible wavelengths while maintaining transmission efficiency for computational photography pipelines.

| Component | Technical Approach | Optical Objective |

|---|---|---|

| Outer lens glass | Advanced ALC multilayer coating | Minimize surface reflection and ghosting |

| Telephoto module (200mm eq.) | Increased lens groups with dual-sided coating | Maintain contrast at long focal lengths |

| Front protection | Ceramic Shield 2 integration | Balance durability and optical clarity |

The move to a 200mm-equivalent optical system is particularly significant. Longer focal lengths require more complex lens group arrangements to correct chromatic aberration and maintain sharpness across the frame. Each air–glass boundary reflects a small percentage of incident light—often around 4% without coating, as widely cited in optical engineering literature. Even with multilayer coatings, cumulative reflections can form secondary images, perceived as ghost artifacts.

More zoom does not simply mean more reach; it means more internal surfaces where light can misbehave. Apple’s strategy is to suppress these reflections before they cascade. By coating both sides of individual elements and optimizing spectral transmission, the system aims to keep contrast intact even in high dynamic range night scenes.

MacRumors reports that Apple has also reduced external reflections on the display by up to 75% using a matte anti-reflective treatment. While this primarily affects viewing rather than capture, it signals a holistic approach to light management—controlling both incoming photons and perceived output.

Still, physics imposes limits. As optical experts often note, curved lenses inevitably refract and partially reflect light. In a stacked telephoto design, internal reflections may align along the optical axis, producing symmetrical ghosts relative to bright sources. This explains why certain flare patterns persist even with advanced coatings.

What makes the iPhone 17 Pro noteworthy is not the elimination of flare, but the engineering philosophy behind its mitigation. By combining nanometer-scale material control with increasingly complex lens geometry, Apple pushes multi-lens smartphone optics closer to their physical ceiling—while accepting that perfection in a device only millimeters thick remains an asymptotic goal rather than an absolute state.

Sony Xperia 1 VII: Zeiss T* Coating, Larger Ultra-Wide Sensors, and Optical Simulation

Sony continues its long-standing collaboration with Zeiss in the Xperia 1 VII, placing optical purity at the center of its camera philosophy. At the heart of this approach is the Zeiss T* coating, applied not only to the internal lens elements but also to the outer cover glass, minimizing reflections before they can degrade contrast.

According to coverage by FoneArena and PhoneArena, the Xperia 1 VII expands its hardware ambition further by integrating a larger ultra-wide sensor, a move that directly impacts flare behavior. A wider field of view inevitably invites more stray light from oblique angles, making coating precision and optical simulation more critical than ever.

| Element | Xperia 1 VII Implementation | Impact on Flare Control |

|---|---|---|

| Lens Coating | Zeiss T* on all optics | Reduces internal reflections and ghosting |

| Ultra-wide Sensor | 48MP, approx. 2.1× larger area than predecessor | Improves light capture while demanding stricter reflection control |

| Optical Simulation | Zeiss-assisted curvature and thickness optimization | Maintains edge contrast under strong backlight |

The Zeiss T* coating is not merely a marketing label. In optical engineering, multilayer coatings are tuned to specific wavelength ranges to suppress reflectance at each air–glass boundary. By reducing residual reflectance at these interfaces, the Xperia 1 VII achieves cleaner blacks and higher micro-contrast, particularly visible in night scenes with point light sources.

The larger 48MP ultra-wide sensor, reported to offer roughly 2.1 times the light-receiving area of the previous model, changes the equation further. A bigger sensor gathers more photons, which improves dynamic range and signal-to-noise ratio. However, it also magnifies imperfections caused by stray light. Without rigorous flare suppression, the benefits of a larger sensor would be partially offset by veiling glare and contrast washout.

To address this, Sony leverages Zeiss optical simulation tools to optimize lens curvature and coating thickness specifically for high-incidence light. Ultra-wide lenses are particularly vulnerable to diagonal rays entering from outside the frame. Through simulation-based refinement, peripheral contrast drop is reduced before the image even reaches the sensor.

In addition, the Xperia 1 VII integrates environmental light awareness into its imaging pipeline. Reports indicate the presence of an auxiliary illuminance sensor that feeds real-time data to the image processor. When strong backlight or complex lighting is detected, exposure and dynamic range parameters are adjusted proactively, complementing the physical suppression achieved by the T* coating.

What distinguishes Sony’s approach is its refusal to rely solely on computational fixes. While many competitors prioritize post-processing, the Xperia 1 VII treats flare mitigation as an optical design challenge first. For enthusiasts who value natural rendering and predictable highlight behavior, this hardware-centric philosophy offers a distinct advantage.

In practical terms, this means cleaner streetlights in night photography, reduced green or purple ghost artifacts, and more stable contrast in ultra-wide landscape shots. By combining Zeiss T* heritage with modern optical simulation and a substantially larger ultra-wide sensor, the Xperia 1 VII pushes mobile imaging closer to dedicated camera discipline—where light is shaped physically before it is ever interpreted digitally.

The Physics of Reflection: Why More Aperture and More Elements Increase Flare Risk

Lens flare is not a manufacturing defect but a direct consequence of optical physics. Whenever light passes from air into glass, a portion of it is inevitably reflected. According to fundamental optics principles described in standard physics literature, this reflection occurs at every boundary where refractive index changes. In a modern smartphone lens stack, that boundary can appear dozens of times before light even reaches the sensor.

The more aperture you open and the more elements you stack, the more opportunities light has to misbehave. This is the structural reason flagship cameras in 2026 face increasing flare risk despite advanced coatings.

Two design trends amplify this challenge: larger apertures for low‑light performance and more lens elements for complex zoom systems. Both improve image quality in one dimension while complicating internal light paths in another.

| Design Factor | Optical Benefit | Flare Risk Mechanism |

|---|---|---|

| Large aperture (low f-number) | More light, better night performance | Wider incident angles, increased internal reflections |

| More lens elements | Improved correction, longer zoom reach | More air-glass interfaces, cumulative reflection |

A wide aperture allows rays entering from extreme angles to pass through the optical system. These oblique rays are harder to control and more likely to bounce between curved surfaces, forming ghost artifacts. As smartphone sensors grow larger and lenses become brighter, this geometric complexity increases.

Adding elements multiplies the number of reflective interfaces. Even if each surface reflects only a small percentage of light, those reflections compound. User reports in Apple Support Communities and Reddit discussions about the iPhone 17 Pro’s green ghosting illustrate how difficult it is to fully suppress such cumulative effects in multi-element zoom systems.

Physics imposes a stubborn constraint here. As optical engineers often note, as long as curved refractive lenses are used, some degree of reflection is unavoidable. Each additional corrective element introduced to reduce distortion or extend focal length introduces another potential reflection path.

From a ray-tracing perspective, the system becomes exponentially more complex. A single incoming photon may generate multiple secondary reflections before reaching the sensor. Some of these reflections remain faint and invisible, while others align coherently and form visible flare patterns or symmetrical ghost spots around bright sources.

This is why flare tends to intensify in scenes with small, high-intensity light sources such as street lamps at night. The concentrated energy increases the visibility of even minor internal reflections. Larger apertures capture more of that energy, which enhances exposure but simultaneously magnifies internal scattering artifacts.

Manufacturers counteract this with multilayer coatings, blackened lens edges, and optimized barrel geometry. Yet these are mitigation strategies, not eliminations. The underlying physics remains constant: every added surface and every widened light cone raises the probability of stray reflections.

For gadget enthusiasts evaluating 2026 flagship cameras, understanding this trade-off is essential. The push for larger apertures and more complex optical stacks delivers undeniable gains in brightness and versatility, but it also means engineers are fighting an increasingly intricate battle against the fundamental behavior of light itself.

Moth-Eye Nanostructures: Reducing Surface Reflection from 4.4% to 0.23%

Moth-eye nanostructures represent one of the most radical breakthroughs in anti-reflection engineering for smartphone cameras in 2026. Inspired by the natural surface of a moth’s eye, this technology replaces conventional thin-film coatings with a nanoscale surface texture that fundamentally changes how light interacts with glass.

Instead of relying solely on deposited layers, the lens surface itself is patterned with subwavelength protrusions smaller than visible light. According to Sharp’s technical documentation, this biomimetic structure enables an almost seamless transition of refractive index from air to glass, dramatically suppressing Fresnel reflections at the boundary.

The result is a reduction in surface reflection from approximately 4.4% to as low as 0.23%, a difference that is not incremental but transformative for high-contrast mobile imaging.

| Surface Type | Typical Reflectance | Optical Principle |

|---|---|---|

| Standard glass (uncoated) | ~4.4% | Sudden refractive index change |

| Moth-eye nanostructure | ~0.23% | Gradual refractive index gradient |

From a physics standpoint, reflections occur because light encounters an abrupt refractive index mismatch between air and lens material. The moth-eye architecture creates a graded-index layer, meaning light does not “see” a sharp boundary. As reported by TechRadar and corroborated by optoelectronics specialists at Electronic Specifier, this gradient effect enables near-zero reflectance across a broad visible spectrum rather than at a single tuned wavelength.

For smartphone cameras with increasingly bright apertures, this reduction is critical. Wide apertures gather more light but also amplify internal scattering and ghosting from each optical interface. By lowering the initial surface reflection to 0.23%, engineers effectively reduce the cascade of secondary reflections inside multi-element lens stacks.

In practical terms, this translates into noticeably improved microcontrast in night photography. Streetlights produce fewer halo artifacts, and fine textures remain intact even under backlit conditions. The improvement is not merely aesthetic; it preserves dynamic range by preventing stray light from veiling shadow detail.

Sharp has already commercialized moth-eye films for optical filters, expanding from industrial face shields to camera applications. These implementations combine low reflectivity with hydrophobic and anti-fog properties, creating multifunctional optical layers suitable for mass-market devices.

Beyond consumer electronics, TelAztec has demonstrated moth-eye texturing on CVD diamond surfaces for extreme environments, highlighting the structural durability of the approach. This cross-industry validation reinforces that the 0.23% reflectance figure is not a laboratory curiosity but a manufacturable reality.

By structurally engineering the lens surface rather than merely coating it, moth-eye nanostructures redefine the baseline of optical purity in mobile cameras. For gadget enthusiasts who scrutinize flare performance and contrast retention, this shift from chemical coating to structural optics marks a decisive evolution in lens design.

Metalens Technology and Samsung’s Polar ID: Flattening Optics to Minimize Internal Reflections

One of the most radical approaches to minimizing internal reflections in 2026 is the shift from curved glass stacks to flat, nanostructured optics. Metalens technology, developed by Metalenz and now partnered with Samsung, represents a structural rethink of how light is shaped inside ultra-thin devices.

Instead of relying on multiple curved elements, a metalens uses billions of nanoscale pillars—often called meta-atoms—etched onto a flat substrate. Each pillar manipulates the phase of incoming light, allowing the lens to focus and direct beams without the need for traditional curvature.

According to Photonics Spectra, Samsung’s collaboration with Metalenz has already reached mass production through the Polar ID system, combining Metalenz optics with the ISOCELL Vizion 931 sensor. This system captures not only intensity but also polarization information, something conventional smartphone lenses cannot do with a single flat element.

The difference between traditional lens stacks and metalens-based systems becomes clearer when we compare their structural characteristics.

| Aspect | Conventional Lens Stack | Metalens (Metasurface) |

|---|---|---|

| Geometry | Multiple curved elements | Single flat nanostructured layer |

| Reflection Interfaces | Many air–glass boundaries | Significantly reduced interfaces |

| Module Thickness | Relatively thicker stack | Up to 50% smaller module size |

| Polarization Capture | Requires complex optics | Integrated into metasurface design |

Every air–glass interface in a conventional lens introduces a Fresnel reflection component. As lens counts increase—especially in compact zoom or authentication modules—these micro-reflections accumulate, forming visible ghosts around strong light sources. Because a metalens is fundamentally flat and can consolidate optical functions, it inherently reduces the number of reflective boundaries inside the module.

Polar ID leverages this advantage in a biometric context. By analyzing polarization signatures of reflected infrared light, it enhances spoof resistance while maintaining a thinner optical path. Fewer internal bounces mean less stray IR scatter, which improves signal purity before any digital correction is applied.

Industry coverage and supply chain reports cited by Metalenz indicate that this flat-optics approach is being positioned as a stepping stone toward broader camera integration in future flagship models. While current deployment focuses on facial authentication, the same physics—phase control without curvature—directly addresses flare generation at its structural origin.

In essence, metalens technology does not merely coat reflections away; it redesigns how light propagates through the module. By flattening optics and engineering phase at the nanoscale, Samsung’s Polar ID demonstrates that minimizing internal reflections can begin not at the coating layer, but at the geometric foundation of the lens itself.

Diffusion Models for Single Image Flare Removal: LightsOut and Difflare Explained

Diffusion models have rapidly become the most promising approach for Single Image Flare Removal (SIFR). Unlike traditional CNN-based pipelines that simply suppress bright artifacts, diffusion-based methods attempt to model how a clean image would have been formed in the absence of unwanted reflections. According to recent arXiv research such as LightsOut (arXiv:2510.15868), this shift from pixel correction to generative reconstruction significantly improves structural fidelity.

The key advantage of diffusion models is their ability to leverage a learned prior of “natural images.” Instead of treating flare as random noise, they treat it as a physically induced corruption layered on top of a plausible scene. This conceptual difference is what allows LightsOut and Difflare to outperform earlier flare removal networks that often blurred fine details or distorted geometry.

LightsOut: Outpainting Beyond the Frame

LightsOut introduces a diffusion-based outpainting strategy specifically designed for flare scenarios where the light source is outside the visible frame. Conventional models struggle in these cases because the causal source of the flare is missing. LightsOut reconstructs a plausible extension of the scene beyond the image boundary and infers the position and intensity of the hidden light source.

By estimating off-frame illumination, the model can subtract flare patterns in a way that remains physically consistent with optical behavior. The authors describe it as a plug-and-play preprocessing module, meaning it can enhance existing flare removal pipelines without retraining them from scratch.

| Feature | LightsOut | Conventional CNN |

|---|---|---|

| Handles off-frame light | Yes (via diffusion outpainting) | Limited |

| Physical consistency | Explicitly modeled | Implicit or ignored |

| Detail preservation | High | Often blurred |

This approach is particularly effective in night photography, where strong point sources such as streetlights generate complex ghost patterns. By reconstructing the broader illumination context, LightsOut reduces overcorrection artifacts that previously plagued flare removal tools.

Difflare: Structural Guidance in Latent Diffusion

Difflare, presented at BMVC, leverages pretrained latent diffusion models and introduces a Structural Guidance Injection Module (SGIM). Rather than relying solely on paired flare/no-flare datasets, it taps into the powerful image priors already embedded in large-scale diffusion training.

The innovation lies in guiding the diffusion process with structural constraints. Straight architectural lines, repetitive textures, and fine edges are explicitly protected during the denoising trajectory. This prevents the common failure mode where removing flare also erases subtle background information.

Experimental results reported in the BMVA archive show improved perceptual quality and structural similarity compared to prior flare removal baselines. Importantly, Difflare demonstrates that diffusion models can be controlled—not just sampled—to achieve task-specific restoration without sacrificing realism.

Together, LightsOut and Difflare signal a broader transition in computational photography. Flare removal is no longer a matter of masking bright streaks; it is a reconstruction problem grounded in optics and generative priors. For gadget enthusiasts who demand artifact-free night shots, these diffusion-driven methods represent the cutting edge of AI-powered image restoration.

Night Photography Breakthroughs: Optical Center Symmetry, MFDNet, and the FlareX Dataset

Night photography has long exposed the structural weaknesses of smartphone optics, especially when strong point light sources create complex ghosts and veiling flare. In 2026, however, research-grade AI models are beginning to treat flare not as random noise, but as a predictable optical phenomenon governed by geometry and frequency.

A key breakthrough is the adoption of the Optical Center Symmetry Prior. According to research on self-supervised nighttime flare removal, ghost artifacts tend to appear symmetrically around the optical center of the lens system. By modeling this symmetry, algorithms can localize flare candidates with sub-pixel precision instead of blindly suppressing bright regions.

This physics-aware assumption dramatically reduces overcorrection. Rather than erasing legitimate highlights, the model distinguishes between true scene luminance and internally reflected artifacts based on geometric consistency.

Another milestone is MFDNet, the Multi-Frequency Deflare Network designed specifically for nighttime scenes. As detailed in ResearchGate publications, MFDNet decomposes an image into low-frequency components, which capture illumination spread and glow, and high-frequency components, which contain edges and textures.

| Component | Represents | Processing Strategy |

|---|---|---|

| Low Frequency | Light bloom, flare haze | Selective suppression and smoothing |

| High Frequency | Edges, textures, fine detail | Detail preservation and reconstruction |

By attenuating flare in the low-frequency domain and then recombining it with intact high-frequency data, MFDNet avoids the soft, plastic look common in earlier denoising pipelines. The result is a night cityscape where neon signs remain crisp and building contours stay sharp.

Equally important is the FlareX dataset, introduced via OpenReview as a physics-informed benchmark. FlareX combines over 3,000 pairs of 3D-rendered flare simulations and real-world flare images, covering diverse lens coatings, aperture designs, and incident light angles.

This hybrid construction matters. Purely synthetic datasets lack real sensor noise characteristics, while purely real datasets lack ground truth. FlareX bridges that gap, allowing diffusion-based models to learn both physical plausibility and perceptual realism.

Together, Optical Center Symmetry, MFDNet, and FlareX redefine nighttime imaging from reactive correction to predictive modeling. Instead of merely cleaning artifacts, modern smartphones increasingly reconstruct scenes in alignment with optical physics. For users obsessed with low-light performance, this shift means fewer green ghosts, cleaner headlights, and night shots that finally reflect what the eye actually sees.

Third-Party Optical Filters and Ultra-Low Reflection Coatings in the Japanese Market

In Japan, where smartphone photography is deeply embedded in daily life and social media culture, third-party optical filters and ultra-low reflection coatings have evolved from niche accessories into performance-critical tools.

According to ranking data published in January 2026 by MyBest, circular polarizers, lens protectors, and dedicated lens hoods consistently dominate the camera accessory category, reflecting a user base that actively compensates for residual flare and ghosting at the hardware level.

Rather than relying solely on in-device AI correction, many Japanese users prioritize physically suppressing stray light before it even reaches the sensor.

| Category | Representative Products | Key Technology |

|---|---|---|

| C-PL Filters | KANI Premium CPL, Marumi PRIME PLASMA SPUTTERING | Ultra-low reflectance 0.18%–0.3% |

| Lens Protectors | Kenko ZX II, Marumi PRIME LENS PROTECT | ULC multi-layer coating |

| Lens Hoods | JJC reversible models | Stray light suppression |

Marumi’s PRIME PLASMA SPUTTERING C-P.L is particularly noteworthy. By using plasma sputtering rather than conventional vapor deposition, it achieves extremely low reflectance across the visible spectrum, with figures as low as 0.18% reported in retail specifications. This directly reduces secondary reflections between filter and lens surface, a known cause of night-time ghosting.

Kenko’s ZX II protector series integrates ULC coatings engineered to minimize surface reflections while preserving high transmittance. For smartphone users adapting interchangeable-lens accessories via mounts, these coatings help maintain contrast even under strong point light sources.

Ultra-low reflection coatings in Japan are increasingly defined by two metrics: sub-0.3% surface reflectance and over 95% light transmittance.

The demand is also cultural. Surveys by Hakuhodo and JTB indicate record-high smartphone engagement times and growing use of generative AI in content creation. As users edit, grade, and publish directly from their devices, base image purity becomes critical. Flare artifacts that survive capture can complicate AI-based enhancement workflows.

Additionally, titanium-alloy framed lens protection films with AR coatings, now marketed for devices like the iPhone 17 series, demonstrate how durability and optical integrity are being co-optimized. These products aim to protect protruding camera modules without introducing measurable flare, addressing a long-standing trade-off between safety and image quality.

In the Japanese market, third-party optical solutions are no longer mere add-ons. They function as an extension of the optical design itself, enabling enthusiasts to fine-tune reflection control beyond factory specifications while preserving the tonal fidelity demanded by high-end mobile imaging.

Expert Insights: The Physical Limits of Curved Lenses and the Risks of Over-Aggressive AI

Even in 2026, no matter how advanced coatings or AI engines become, curved lenses remain bound by the fundamental laws of optics. As several experts have pointed out in user communities and technical discussions, reflection and refraction are not “bugs” but inevitable consequences of light crossing media with different refractive indices.

As long as a lens has curvature and multiple elements, some portion of incoming light will reflect internally. This is not a manufacturing flaw but a physical certainty described by Fresnel equations. When modern smartphones adopt brighter apertures and more complex zoom stacks, they increase the number of air–glass interfaces—each a potential source of ghosting.

From a purely physical perspective, the challenge can be summarized as follows.

| Factor | Benefit | Optical Risk |

|---|---|---|

| Larger aperture | Improved low-light capture | Higher flare susceptibility |

| More lens elements | Advanced zoom & correction | More reflective interfaces |

| Thinner modules | Compact device design | Stricter angle tolerances |

Discussions in Apple Support Communities and Reddit threads regarding iPhone 17 Pro illustrate this tension clearly. Users report persistent green dot ghosts under strong point light sources, even with multilayer coatings applied. These cases demonstrate that coating technology can reduce reflections dramatically, but it cannot eliminate them entirely.

This is where AI steps in—but here, too, limits exist. Recent diffusion-based approaches such as LightsOut and Difflare, introduced in peer-reviewed research, can reconstruct flare-free imagery with impressive fidelity. By estimating off-frame light sources or injecting structural guidance, these models preserve lines and textures that older algorithms would blur.

However, aggressive AI correction introduces a different kind of risk. When an algorithm removes flare, it must decide whether a bright streak is optical noise or intentional artistic expression. Overcorrection may flatten contrast, erase atmospheric glow, or subtly alter color balance.

The danger is not visible artifacts—but invisible reinterpretation. When AI “hallucinates” clean pixels, it replaces physical light behavior with statistical probability.

According to flare-removal studies published on arXiv and BMVA proceedings, diffusion models rely heavily on learned priors of what a “natural” image should look like. In edge cases—concert lighting, cinematic backlight, neon reflections—the model may converge toward plausibility rather than truth.

For gadget enthusiasts and creators, this means understanding a critical boundary: optics defines what light actually did, while AI defines what the image should resemble. The more complex the lens system becomes, the harder it is to separate correction from reinterpretation.

Ultimately, curved lenses impose immutable constraints, and AI offers probabilistic solutions—not physical reversals. Recognizing this distinction allows users to evaluate whether a camera system prioritizes optical integrity first, or computational cleanup after the fact.

True innovation lies not in denying physical limits, but in designing systems that respect them while using AI with restraint.

How to Choose a 2026 Flagship for Cleaner Night Photos and Professional Video

Choosing a 2026 flagship for cleaner night photos and professional video means looking far beyond megapixels. You should evaluate how each device controls light at three levels: optics, structure, and computational processing. Night scenes and high-contrast video push every weakness of a lens system to the surface, especially flare and ghosting.

The key question is not how bright the lens is, but how well the camera manages unwanted reflections inside that brightness. According to discussions in Apple Support Communities and Reddit threads, even premium devices can struggle with point light sources at night, producing green ghost artifacts when optical control is insufficient.

What to Compare in 2026 Flagships

| Layer | What to Check | Why It Matters at Night |

|---|---|---|

| Optical Coating | ALC, Zeiss T*, nano-coatings | Reduces internal reflections and color shift |

| Lens Structure | Edge blackening, internal shielding | Suppresses veiling flare from bright sources |

| AI Processing | Diffusion-based flare removal | Restores detail without smearing highlights |

For night photography, pay close attention to anti-reflective technologies. Sharp’s moth-eye research shows that nanostructures can reduce surface reflectance from around 4% to near 0.2%, dramatically improving contrast in backlit scenes. While not every flagship fully adopts moth-eye surfaces, advances in nano-coating thickness control—such as Apple’s Atomic Layer Coating—are directly aimed at minimizing multi-surface reflections in complex zoom systems.

If you shoot professional video, internal lens design becomes even more critical. Video exaggerates flare because moving light sources create dynamic ghost trails. Devices that refine internal black matrix structures or optimize lens curvature through simulation—similar to Sony’s collaboration with Zeiss—tend to preserve contrast across wide angles and during panning shots.

AI is the third pillar. Recent academic work such as LightsOut and Difflare demonstrates that diffusion models can reconstruct flare-free images while preserving structural lines. However, over-aggressive correction may remove intentional cinematic flare. You should test how each phone balances realism and artistic character, especially in night street scenes.

Finally, consider real-world habits. Fingerprints dramatically amplify flare by scattering light. Even the best coating fails if the lens surface is dirty. If you rely on external protection, choose high-transmittance AR-coated glass; Japanese rankings highlight plasma sputtering filters with reflectance below 0.3%, which maintain contrast while protecting the lens.

In 2026, the best flagship for night and video is the one that treats light as a precision resource. Evaluate coating technology, internal optical discipline, and AI philosophy together, and you will select a device that delivers both clinical clarity and professional-grade dynamic range.

参考文献

- Android Authority:iPhone could never: The Galaxy S26 Ultra could fix lens flare, yellow skin tones

- MacRumors:iPhone 17 Pro: Everything We Know

- FoneArena:Sony Xperia 1 VII with 6.5″ FHD+ OLED 120Hz display, Snapdragon 8 Elite, improved ultra-wide camera announced

- SHARP Blog:Sharp’s Moth-eye technology is a unique and advanced technology that is more than just low reflection

- Photonics Spectra:Metalenz Partners with Samsung on Facial Recognition Tech

- arXiv:LightsOut: Diffusion-based Outpainting for Enhanced Lens Flare Removal

- OpenReview:FlareX: A Physics-Informed Dataset for Lens Flare Removal via 2D Synthesis and 3D Rendering