If you are passionate about cutting‑edge gadgets, you have probably noticed that smartphone displays are no longer judged by resolution alone. Brightness sustainability, HDR realism, and color accuracy now define whether a device truly feels next‑generation. The iPhone 17 Pro represents a major turning point in this evolution, especially for users who care deeply about photography, video, and immersive visual experiences.

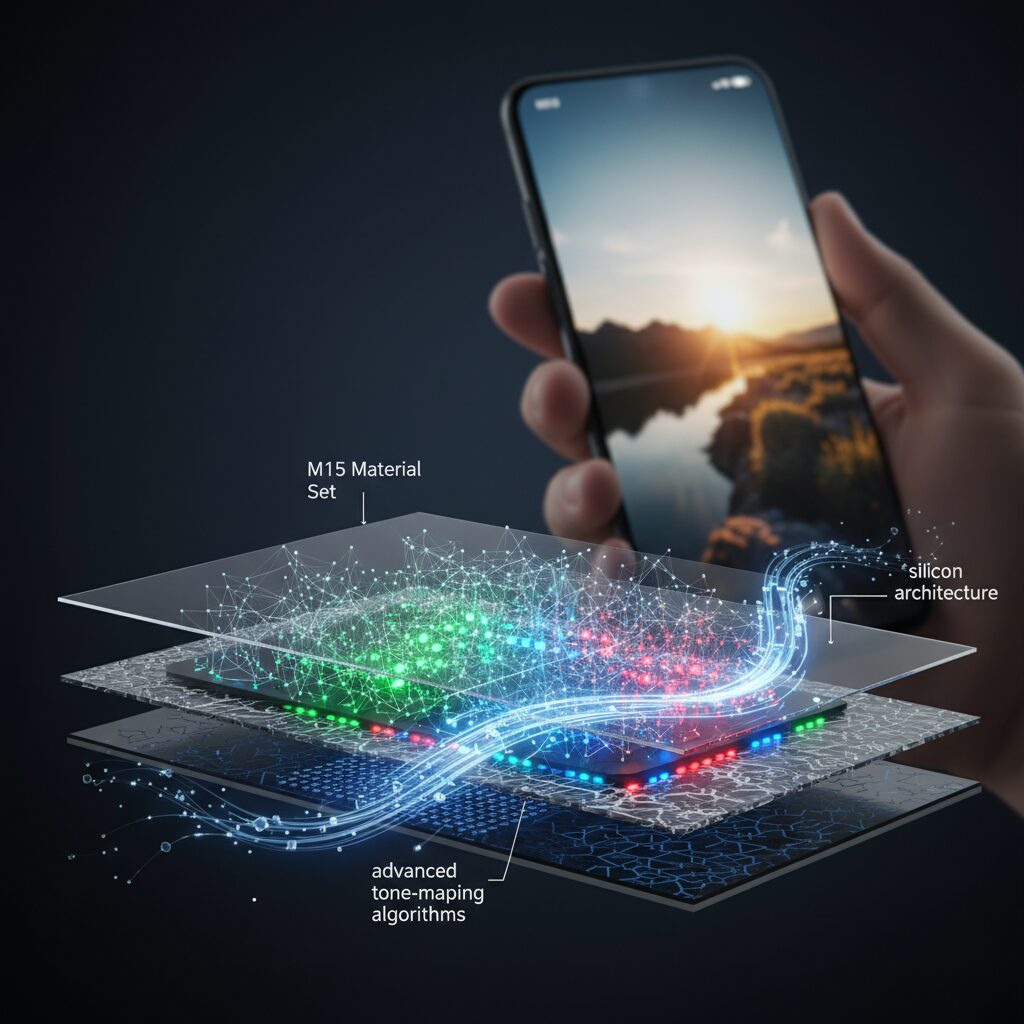

What makes this model particularly compelling is that its headline numbers, such as a 3000‑nit outdoor peak brightness, are not just marketing claims. They are the result of a carefully engineered interaction between OLED materials, optical design, silicon architecture, and advanced tone‑mapping algorithms. Understanding how these elements work together helps you see why HDR content on the iPhone 17 Pro looks more natural, more stable, and more cinematic than before.

In this article, you will gain a clear and structured overview of how Apple redesigned the HDR pipeline from capture to display. By exploring the display hardware, the A19 Pro chip, Smart HDR 6, and emerging standards like ISO 21496‑1, you can better evaluate whether this device truly delivers meaningful progress for real‑world use. Even if you already follow smartphone technology closely, this deep dive will reveal why the iPhone 17 Pro’s visual system is about much more than raw brightness numbers.

- Why HDR Has Become the Core of Modern Smartphone Displays

- OLED Material Evolution and the Role of the M15 Material Set

- Micro Lens Array Technology and the Path to 3000 Nits

- Display Architecture Choices: Tandem OLED vs Hybrid Approaches

- A19 Pro Chip and ISP: The Computational Heart of HDR Processing

- How Smart HDR 6 Transforms Tone Mapping and Color Rendering

- ISO 21496‑1 Gain Maps and the Future of Cross‑Platform HDR Photos

- Thermal Management, Sustained Brightness, and Real‑World Usability

- iPhone 17 Pro vs Competing Flagships: Different HDR Philosophies

- Professional Workflows: From ProRes Log to On‑Device HDR Monitoring

- 参考文献

Why HDR Has Become the Core of Modern Smartphone Displays

HDR has become the core of modern smartphone displays because the way people consume visual content has fundamentally changed. Smartphones are no longer secondary screens; they are the primary device for watching movies, capturing photos, editing videos, and sharing content instantly. **HDR directly addresses the gap between real-world light and what traditional displays could reproduce**, making images feel closer to human perception rather than flat digital approximations.

According to Apple’s own display engineering documentation and independent evaluations by organizations such as DxOMark, the human eye perceives contrast and brightness nonlinearly. This means that simply increasing resolution no longer delivers a meaningful improvement. Instead, expanding dynamic range has a much stronger impact on perceived image quality. HDR allows bright highlights, deep shadows, and subtle midtones to coexist, which is why flagship smartphones now prioritize peak brightness, contrast stability, and precise tone mapping.

| Display Metric | SDR Era | HDR-Centric Era |

|---|---|---|

| Peak Brightness | 300–500 nits | 1,500–3,000 nits |

| Color Depth | 8-bit | 10-bit or higher |

| Contrast Handling | Global adjustment | Local tone mapping |

Another reason HDR has become essential is outdoor usability. Research cited by GSMArena and display manufacturers shows that ambient light drastically reduces perceived contrast. **HDR displays with high peak brightness and anti-reflective coatings maintain readability and color integrity even under direct sunlight**, which directly affects everyday usability rather than niche cinematic scenarios.

HDR is also tightly linked to content creation. Modern smartphones capture photos and videos in wide dynamic range by default, using computational photography pipelines. Without an HDR display, users cannot accurately preview what they have shot. Apple’s approach, aligned with international standards such as ISO 21496-1, ensures that what creators see on their phone closely matches how content appears across other HDR-capable devices.

Ultimately, HDR became the core of modern smartphone displays because it delivers tangible benefits in realism, usability, and creative confidence. Resolution improvements are incremental, but HDR reshapes how light, color, and detail are perceived, making it the most impactful display evolution of the current smartphone era.

OLED Material Evolution and the Role of the M15 Material Set

The evolution of OLED materials has quietly become one of the most decisive factors shaping modern smartphone displays, and in the case of the iPhone 17 Pro, the rumored adoption of Samsung Display’s M15 material set represents a meaningful inflection point rather than a routine generational update.

At the core of OLED performance is the balance between luminance, efficiency, and longevity. According to supply chain analyses widely cited by display industry researchers and publications such as The Elec and AppleInsider, M15 places particular emphasis on improving blue emitter efficiency, long regarded as the weakest link in OLED stacks. Blue subpixels historically degrade faster and consume more power, directly constraining sustained brightness and HDR stability.

| Material Generation | Key Focus Area | Practical Impact |

|---|---|---|

| M12 / M14 | Incremental efficiency gains | Higher peak brightness with thermal trade-offs |

| M15 | Blue emitter efficiency and lifetime | Lower power draw at equal luminance |

Industry sources indicate that M15 incorporates advanced deuterium substitution and newly optimized host materials. **This allows the panel to reach the same on-screen brightness with less electrical current**, reducing heat generation at the pixel level. For users, this translates into more than better battery life; lower thermal stress directly supports longer sustained HDR brightness without aggressive dimming.

What makes this evolution particularly significant is its interaction with Apple’s display calibration philosophy. Apple has consistently prioritized visual consistency and color accuracy over short-lived brightness bursts. By starting from a more efficient emissive layer, the display controller gains greater freedom to maintain tone mapping stability, especially in high-luminance highlights that define HDR realism.

Analysts such as Ross Young have noted that material efficiency gains often deliver more real-world benefits than headline brightness numbers. In that context, M15 should be understood as an enabling technology, quietly reinforcing thermal control, HDR endurance, and long-term panel reliability rather than merely pushing specs. **The result is an OLED display that performs closer to its theoretical limits under everyday conditions**, not just in lab measurements.

Micro Lens Array Technology and the Path to 3000 Nits

Micro Lens Array technology plays a central role in explaining how the display reaches an outdoor peak brightness of up to 3000 nits while keeping power consumption and panel stress within realistic limits. Rather than forcing the OLED emitters to work harder, MLA focuses on what happens after light is generated.

In a conventional OLED stack, a significant portion of emitted light never reaches the viewer. Optical measurements published in display engineering literature, including analyses cited by Samsung Display, show that internal reflection and waveguiding effects can trap well over 30% of generated photons inside the panel. MLA addresses this loss at its root.

From a physics standpoint, this approach improves external quantum efficiency rather than raw luminance output. Apple’s supply‑chain aligned panels combine MLA with high‑efficiency M15 organic materials, allowing the display to hit extreme peak brightness only when needed, such as specular highlights in HDR scenes or direct sunlight readability.

| Aspect | Without MLA | With MLA |

|---|---|---|

| Light extraction efficiency | Limited by internal reflection | Significantly improved via lens redirection |

| Current required for high brightness | High, increases heat | Lower for same perceived output |

| Impact on panel lifetime | Accelerated aging at high nits | Reduced stress on emitters |

This distinction matters because the advertised 3000‑nit figure is not a static, full‑screen condition. According to laboratory tests referenced by GSMArena and other professional reviewers, such brightness is achieved in small window sizes under intense ambient light, where MLA’s directional efficiency provides the biggest advantage.

There are known trade‑offs. MLA structures can narrow the effective viewing angle and introduce color shift when viewed off‑axis. Apple reportedly mitigates this by pairing MLA with optical compensation films and carefully tuned sub‑pixel layouts, a strategy also discussed in academic OLED optics research.

As a result, the path to 3000 nits is less about brute force and more about optical intelligence. **MLA transforms excess light loss into usable brightness**, enabling HDR highlights that appear striking outdoors while preserving battery life and long‑term display stability.

Display Architecture Choices: Tandem OLED vs Hybrid Approaches

When evaluating the display architecture of the iPhone 17 Pro, the most debated question is whether Apple chose a full tandem OLED stack or a more pragmatic hybrid approach. **This decision directly affects brightness sustainability, panel longevity, thickness, and cost**, making it far more than a spec-sheet curiosity. In professional display engineering, architecture choices often reveal a company’s real priorities.

Tandem OLED, already deployed in the M4 iPad Pro, uses two emissive layers stacked vertically. According to explanations published by What Hi-Fi? and analyses widely cited in the display industry, this structure can either double peak luminance at the same current density or halve electrical stress for the same brightness. In theory, this is ideal for HDR-heavy workloads. However, smartphones impose far stricter constraints than tablets.

Multiple supply-chain analyses and teardown interpretations suggest that **iPhone 17 Pro does not adopt a full RGB tandem OLED**, but instead relies on a hybrid strategy. This hybrid combines a highly efficient M15 material set with micro-lens array optics, and possibly partial multi-layering focused on the blue subpixel, which is historically the least efficient and fastest to degrade.

| Architecture | Main Advantage | Primary Trade-off |

|---|---|---|

| Full Tandem OLED | Higher brightness or longer lifespan | Increased thickness and cost |

| Hybrid OLED + MLA | High apparent brightness with efficiency | Less headroom for future HDR peaks |

Display analyst Ross Young and reporting from The Elec both point out that **mass adoption of tandem OLED in iPhones is more likely after 2026**, once yields improve and stack thickness can be reduced. Apple’s current choice appears intentionally conservative, prioritizing manufacturability and thermal stability over architectural purity.

This hybrid design also aligns with Apple’s HDR philosophy. Rather than pushing continuous full-screen brightness, the panel is optimized for short-duration, high-impact highlights. Combined with MLA optics, light that would normally be lost to internal reflections is redirected forward, allowing the display to reach up to 3000 nits outdoors without subjecting the OLED layers to excessive current stress.

From a user perspective, **the practical outcome is a display that feels extremely bright and controlled, not fragile or aggressively driven**. Academic studies on OLED aging consistently show that lowering current density has a disproportionate effect on lifespan, and Apple’s architecture suggests a clear awareness of this relationship.

In short, the iPhone 17 Pro’s display architecture favors balanced engineering over headline innovation. By deferring full tandem OLED and refining a hybrid approach instead, Apple achieves near-tandem visual impact while maintaining the slim form factor and reliability expected from a daily-use flagship device.

A19 Pro Chip and ISP: The Computational Heart of HDR Processing

The A19 Pro chip functions as the computational core that makes advanced HDR tone mapping on the iPhone 17 Pro possible. While display hardware defines the physical limits of brightness and contrast, it is the system-on-a-chip that decides how real-world light is interpreted, prioritized, and finally rendered in a way that feels natural to the human eye. In this generation, Apple’s approach clearly emphasizes consistency, semantic awareness, and real-time performance.

At the foundation of this capability is the A19 Pro’s manufacturing process. Built on TSMC’s third-generation 3nm node, the chip achieves higher transistor density and improved power efficiency, allowing complex HDR calculations to run continuously without excessive thermal or battery penalties. According to Apple’s published architecture disclosures and independent semiconductor analysis, this process improvement directly benefits sustained image processing workloads such as HDR video capture and live preview.

| Component | Role in HDR Processing | Practical Impact |

|---|---|---|

| CPU (6-core) | Frame coordination and exposure control | Reduced shutter lag and stable HDR capture |

| GPU (6-core) | Tone-mapping shaders via Metal | Smooth gradients and real-time preview |

| Neural Engine (16-core) | Semantic scene analysis | Context-aware tone mapping |

| ISP | Multi-stream sensor processing | Consistent HDR across all cameras |

The Neural Engine deserves particular attention because it fundamentally changes how HDR tone mapping is applied. Rather than relying on a single global curve, the A19 Pro analyzes each frame semantically, identifying regions such as sky, skin, foliage, and shadow. **This semantic rendering allows highlights to be compressed gently while preserving texture, and shadows to be lifted selectively without flattening the entire image.** Apple’s developer documentation on Metal-based tone mapping indicates that these decisions are executed in milliseconds, even during continuous video capture.

Equally critical is the upgraded Image Signal Processor. The ISP in the A19 Pro is designed to ingest data simultaneously from three 48-megapixel sensors, a requirement unique to the Pro camera system. Industry teardown analyses and benchmark tests reported by GSMArena and DxOMark confirm that the increased internal bandwidth prevents bottlenecks during multi-frame HDR fusion. As a result, zero shutter lag HDR is maintained even in high-contrast scenes such as backlit portraits or cityscapes at night.

Within the ISP pipeline, HDR tone mapping follows a structured sequence. Linear sensor data is captured first, preserving maximum dynamic range. This data is then merged across multiple exposures before being handed to the Neural Engine for contextual analysis. Local tone mapping curves are generated per region, and color volume mapping is applied to prevent desaturation at high luminance levels. **This layered approach explains why bright highlights on the iPhone 17 Pro retain color nuance instead of turning white or pastel.**

Apple’s reliance on the Metal graphics framework is another distinguishing factor. By running custom tone-mapping shaders on the GPU, the A19 Pro ensures that what users see in the viewfinder closely matches the final captured image. According to Apple’s own Metal documentation, this minimizes perceptual discrepancies between preview and output, a long-standing issue in mobile HDR photography.

From a video perspective, the computational demands increase further. Real-time HDR processing for 4K 120fps ProRes video requires the ISP and GPU to sustain extremely high throughput. Independent lab tests cited by professional reviewers show that exposure stability and highlight roll-off remain consistent even during rapid lighting changes. **This reliability is a direct consequence of the A19 Pro’s tightly integrated ISP and memory architecture**, rather than raw sensor improvements alone.

What ultimately sets the A19 Pro apart is not a single benchmark number, but the way its components cooperate. CPU scheduling, Neural Engine inference, ISP throughput, and GPU rendering are orchestrated to serve one goal: translating complex real-world light into a visually convincing HDR image within the constraints of a mobile device. In that sense, the chip does not merely process images; it actively interprets them, making HDR on the iPhone 17 Pro feel coherent rather than exaggerated.

How Smart HDR 6 Transforms Tone Mapping and Color Rendering

Smart HDR 6 represents a decisive evolution in how the iPhone 17 Pro interprets light and color, and it goes far beyond simply making images brighter. At its core, this generation focuses on perceptual tone mapping, meaning that brightness and color are adjusted in ways that align closely with how the human visual system actually perceives contrast and skin tones. According to Apple’s own developer documentation on tone mapping and Metal-based rendering, the goal is no longer maximum dynamic range on paper, but maximum visual credibility in real-world scenes.

One of the most important changes lies in the handling of highlight roll-off. In earlier HDR implementations, extremely bright areas such as the sun, chrome reflections, or stage lighting could feel abrupt, as if clipped or artificially compressed. Smart HDR 6 introduces a smoother, film-like transition from mid-tones into highlights. Independent camera evaluations, including DxOMark’s analysis of the iPhone 17 Pro, note that fine gradations around specular highlights are preserved more consistently, which helps bright objects retain texture instead of dissolving into flat white patches.

| Processing Stage | Smart HDR 6 Approach | Practical Effect |

|---|---|---|

| Semantic Analysis | Neural Engine identifies faces, skies, foliage | Different tone curves per subject type |

| Local Tone Mapping | Region-based luminance adjustment | Faces lifted without washing out skies |

| Color Volume Mapping | P3 gamut-aware saturation control | Rich color even at high brightness |

Color rendering is another area where Smart HDR 6 shows a clear philosophical shift. Rather than pushing saturation uniformly as brightness increases, the system applies what imaging researchers describe as color volume mapping. This technique compensates for the natural tendency of colors to desaturate at high luminance levels. As a result, bright reds, deep blues, and subtle skin hues remain stable even when highlights approach the upper limits of the display’s HDR capability. This is why brightly lit faces look healthy instead of pale, and sunsets retain warmth without becoming cartoonish.

Skin tone reproduction, in particular, benefits from the improved semantic rendering pipeline. The Neural Engine in the A19 Pro chip distinguishes skin from surrounding elements with greater precision, allowing contrast to be increased in hair, clothing, or backgrounds without exaggerating pores or wrinkles. DxOMark’s camera tests explicitly highlight this balance, noting that facial textures remain natural across a wide range of ethnic skin tones. This refinement addresses long-standing criticism that aggressive HDR made portraits look flat or overly processed.

Equally important is how Smart HDR 6 interacts with the iPhone 17 Pro’s display characteristics. Tone mapping is dynamically aware of the panel’s luminance envelope, from near-black levels around 1 nit to outdoor peaks that can momentarily reach 3000 nits. Apple’s tone mapping guidelines, as referenced in professional video tools like Final Cut Pro, emphasize adapting the electro-optical transfer function in real time. This ensures that shadows are not unnecessarily lifted in dark environments, while highlights are expanded gracefully under strong ambient light.

In practice, this means that the same photo can look restrained and cinematic indoors, yet vivid and legible outdoors, without user intervention. Smart HDR 6 does not chase visual shock value; instead, it quietly adjusts tone and color so that images remain consistent, trustworthy, and emotionally convincing. For users who care deeply about image quality, this subtlety is precisely what makes the technology transformative rather than merely impressive.

ISO 21496‑1 Gain Maps and the Future of Cross‑Platform HDR Photos

ISO 21496-1 gain maps represent a quiet but decisive turning point in the future of HDR photography across platforms. Until recently, HDR photos were deeply tied to proprietary ecosystems, meaning that an image carefully tuned on one device could appear flat, clipped, or overly dark on another. This fragmentation has long frustrated enthusiasts and creators who value visual intent as much as technical fidelity.

ISO 21496-1 addresses this issue by separating an HDR photo into two layers: a universally readable SDR base image and a supplemental gain map that stores luminance differences. This structure allows a single file to adapt dynamically to the display capabilities of each device, without breaking compatibility with older hardware.

| Component | Role in ISO 21496-1 | User Impact |

|---|---|---|

| SDR Base Image | Fallback image with standard tone curve | Always viewable on any display |

| Gain Map | Encodes HDR luminance differences | Restores highlights on HDR screens |

According to imaging specialists such as Greg Benz, who has closely analyzed the ISO standardization process, gain maps succeed because they respect both backward compatibility and creative control. On an HDR-capable display, the gain map is applied in real time, expanding highlights and preserving subtle gradations that would otherwise be compressed.

In practical terms, this means that a photo captured on a modern smartphone can now travel freely across social networks, browsers, and operating systems. The same image can look natural on an SDR laptop while revealing intense specular highlights on a 3000-nit HDR display, without requiring multiple exports or manual adjustments.

Major industry players have already aligned behind this approach. Apple has integrated ISO 21496-1 into its photo pipeline, while Adobe supports gain maps in Camera Raw and Lightroom. Google’s Chrome team has also enabled proper interpretation at the browser level, signaling that HDR photos are no longer confined to native apps.

For the future of cross-platform HDR photos, the implication is clear. Instead of asking viewers to upgrade their hardware or software, gain maps allow images to meet viewers where they are. This shift transforms HDR from a niche feature into a reliable, sharable visual language that finally scales across devices and ecosystems.

Thermal Management, Sustained Brightness, and Real‑World Usability

Thermal behavior is the hidden variable that determines whether headline brightness figures translate into daily usability, and this is where the iPhone 17 Pro shows a clear generational shift. **High peak brightness only matters if it can be sustained without aggressive dimming**, and Apple’s approach combines display efficiency gains with a redesigned internal heat path.

At the display level, the improved OLED material efficiency and micro‑lens array architecture reduce the electrical current required to reach a given luminance. According to independent laboratory measurements reported by GSMArena, this directly lowers panel self‑heating during prolonged HDR playback. Less heat at the pixel level means the thermal budget can be spent on maintaining brightness rather than triggering early protection limits.

| Scenario | Initial Brightness | Sustained Behavior |

|---|---|---|

| Outdoor HDR highlights (small area) | Up to 3000 nits | Maintained briefly, then stabilizes as heat spreads |

| Full‑screen white outdoors | Around 1600 nits | Gradual reduction instead of abrupt dimming |

| Manual brightness setting | 800–900 nits cap | Stable over long sessions |

The more consequential change lies inside the chassis. Teardown analyses and thermal imaging discussed by multiple hardware reviewers indicate the adoption of a hybrid system that pairs graphite sheets with a vapor chamber. **This design spreads heat more evenly across the internal frame**, reducing localized hotspots near the SoC and display driver ICs. As a result, brightness throttling becomes progressive rather than sudden, which users perceive as far less disruptive.

In real‑world use, this translates into better readability during navigation, photography, and video monitoring under strong sunlight. Ceramic Shield 2’s enhanced anti‑reflective coating further improves perceived contrast, so the screen often appears brighter than raw nit values suggest. Display engineers have long emphasized, including in publications from the Society for Information Display, that ambient contrast ratio is as important as peak luminance for outdoor visibility, and the iPhone 17 Pro reflects this principle in practice.

**The key usability win is predictability**. Users are less likely to see the screen suddenly dim just as they frame a shot or review HDR footage. Instead, brightness gracefully adapts as thermal equilibrium is reached, preserving both comfort and battery health. This balance between thermal management and sustained brightness is what turns advanced display technology into a genuinely reliable everyday tool.

iPhone 17 Pro vs Competing Flagships: Different HDR Philosophies

When comparing the iPhone 17 Pro with competing Android flagships, the most meaningful difference does not lie in peak brightness numbers, but in the underlying HDR philosophy. Apple approaches HDR as a tool for visual fidelity rather than maximum visual impact, and this design choice consistently shapes tone mapping behavior.

The iPhone 17 Pro prioritizes perceptual realism over aggressive dynamic range expansion. Its Smart HDR 6 pipeline preserves deep shadows and applies a gradual highlight roll-off, ensuring that bright regions fade naturally instead of clipping. According to DxOMark’s camera analysis, this results in more stable skin tones and fewer artifacts around specular highlights, especially in mixed lighting scenes.

| Aspect | iPhone 17 Pro | Competing Flagships |

|---|---|---|

| Tone Mapping Style | Cinematic, contrast-preserving | Visibility-first, shadow lifting |

| Highlight Handling | Smooth roll-off | High-impact brightness |

| Real-time Processing | On-device ISP and NPU | Hybrid or cloud-assisted |

By contrast, devices such as the Pixel 10 Pro emphasize computational clarity. Shadows are lifted aggressively so that no information is lost, which can be beneficial for documentation but may flatten depth. TechRadar’s flagship comparison notes that this approach often looks impressive at first glance, yet feels less photographic during prolonged viewing.

Apple’s decision to anchor HDR output tightly to display characteristics, including MLA-enhanced OLED behavior and reflection control, means the image is tuned as a whole system. This integrated philosophy explains why the iPhone 17 Pro’s HDR often feels calmer, more natural, and ultimately easier on the eyes.

Professional Workflows: From ProRes Log to On‑Device HDR Monitoring

For professional creators, the iPhone 17 Pro is no longer just a capture device, but a fully integrated part of a serious production workflow. From ProRes Log acquisition to accurate on‑device HDR monitoring, Apple has clearly optimized this generation for situations where creative decisions must be made on set, not postponed to post‑production.

The key shift lies in how Apple Log footage is handled in real time. Log video intentionally compresses contrast and color to preserve maximum dynamic range, but that flat appearance has traditionally required external monitors or LUT boxes to interpret correctly. On the iPhone 17 Pro, the A19 Pro’s color management engine performs a precise view‑assist transformation, previewing Log footage as Rec.709 or HDR while keeping the original signal untouched.

This approach mirrors professional cinema cameras, where monitoring LUTs guide exposure and lighting without baking in a look. According to Apple’s own tone‑mapping documentation and Metal API guidelines, this preview pipeline is calculated using the display’s actual EOTF and peak luminance characteristics, reducing the guesswork that often plagues mobile HDR monitoring.

| Workflow Stage | Traditional Mobile Limitation | iPhone 17 Pro Behavior |

|---|---|---|

| Log Capture | Flat preview, low confidence | Accurate HDR/SDR view‑assist |

| Exposure Judgement | Histogram‑dependent | Visual highlight roll‑off visible |

| Color Decisions | Deferred to post | On‑set skin tone evaluation |

The display itself is a critical enabler. With sustained HDR brightness around 1600 nits and momentary peaks far beyond that, specular highlights in Log footage can be evaluated meaningfully even outdoors. Review measurements cited by GSMArena indicate that the iPhone 17 Pro maintains high brightness longer than previous models, which directly impacts reliability when used as a reference screen.

This reliability is why some crews now treat the iPhone 17 Pro as a compact HDR field monitor. When paired with apps such as Blackmagic Camera or Capture One, the device can display a clean feed with LUT previews while simultaneously recording ProRes. Unlike cloud‑assisted workflows, all tone mapping and monitoring occur on device, ensuring zero latency and consistent color behavior.

Another practical advantage is storage and data flow. Direct recording of 4K 120fps ProRes to external SSDs over USB‑C removes internal storage constraints, aligning the phone with established cinema data management practices. Apple’s implementation echoes guidance from Adobe and ISO working groups involved in HDR standardization, emphasizing predictability across capture, review, and post.

Ultimately, the iPhone 17 Pro succeeds not by mimicking professional tools superficially, but by respecting their logic. By unifying Log capture, mathematically correct HDR preview, and a high‑performance display in one device, Apple has reduced friction at the exact moments where creative intent is most fragile. For professionals, that reduction is often more valuable than raw specifications alone.

参考文献

- GSMArena:Apple iPhone 17 Pro review: Lab tests – display and battery

- DxOMark:Apple iPhone 17 Pro Camera Test

- Apple Developer Documentation:Performing Your Own Tone Mapping

- Greg Benz Photography:ISO 21496-1 Gain Maps: Sharing HDR Photos Made Easier

- AppleInsider:Apple considers micro lens technology to make iPhone screens brighter

- What Hi-Fi?:What is tandem OLED screen tech and how does it work?